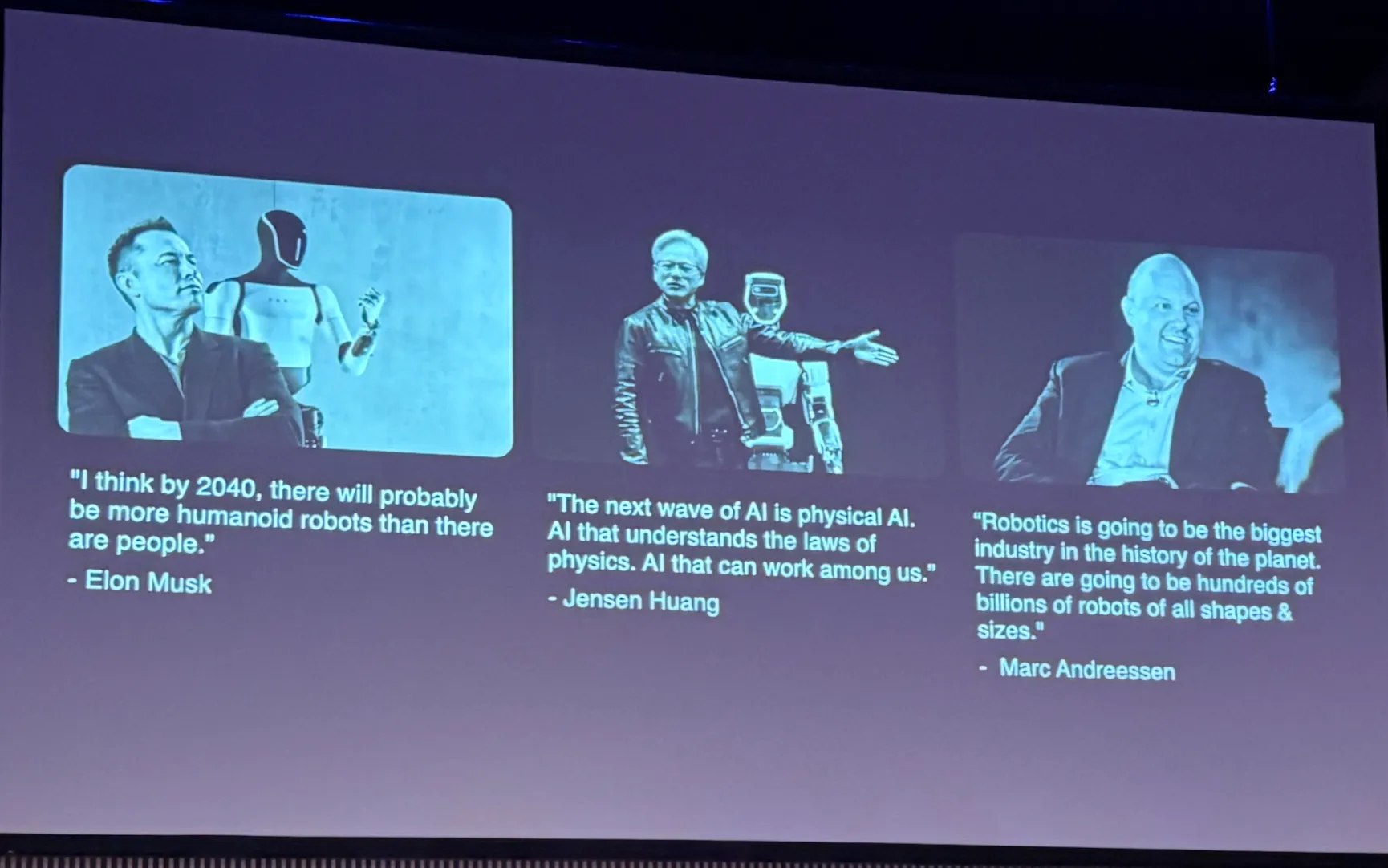

Actuate 2025: The Physical AI Revolution

A detailed narrative for those who couldn't attend.

Written by Bogdan Cristei, PLAUD and Manus AI | September 25, 2025

Executive Summary

Actuate 2025, hosted by Foxglove on September 23-24, 2025, marked a pivotal moment in the physical AI revolution. The conference brought together over 500 attendees from leading robotics companies, research institutions, and investors to witness something remarkable: the transition from robotics demonstrations to production-ready systems achieving unprecedented reliability rates.

Walking through the conference halls, one could sense the industry's transformation. The conversations had shifted from "will this work?" to "how do we scale this safely?" Companies were no longer showing proof-of-concept videos but sharing real deployment metrics, discussing regulatory frameworks, and debating the fundamental architectures that will define the next decade of robotics.

The conference revealed a clear inflection point where robotics companies are moving beyond prototype demonstrations to production-ready systems achieving 99%+ reliability rates. More importantly, the debate has evolved from whether models will work for robotics to how best to train, deploy, and scale them in real-world environments while maintaining the safety and reliability that commercial applications demand.

The Infrastructure Foundation: Foxglove's Strategic Position

Understanding Actuate 2025 requires first understanding its host. Foxglove has positioned itself as the critical infrastructure layer enabling the physical AI revolution, much like how AWS enabled the cloud computing transformation. Founded in 2021, the company has become the leading observability platform for robotics developers, providing the data management and visualization tools that allow robotics teams to focus on their core innovations rather than infrastructure complexities.

Their customer roster reads like a directory of the most important robotics companies: Shield AI, Chef Robotics, Wayve, Anduril, Dexterity, NVIDIA, Waabi, Aescape, Saronic, and Simbe Robotics. This unique position allows Foxglove to see across the entire robotics ecosystem, making them the ideal convener for a conference that brings together the complete value chain.

The company's evolution from open-source (Foxglove Studio) to a commercial platform mirrors the broader maturation of the robotics industry. As one attendee observed during informal conversations, "Foxglove is becoming the AWS of robotics data" - the essential infrastructure that enables everything else to function.

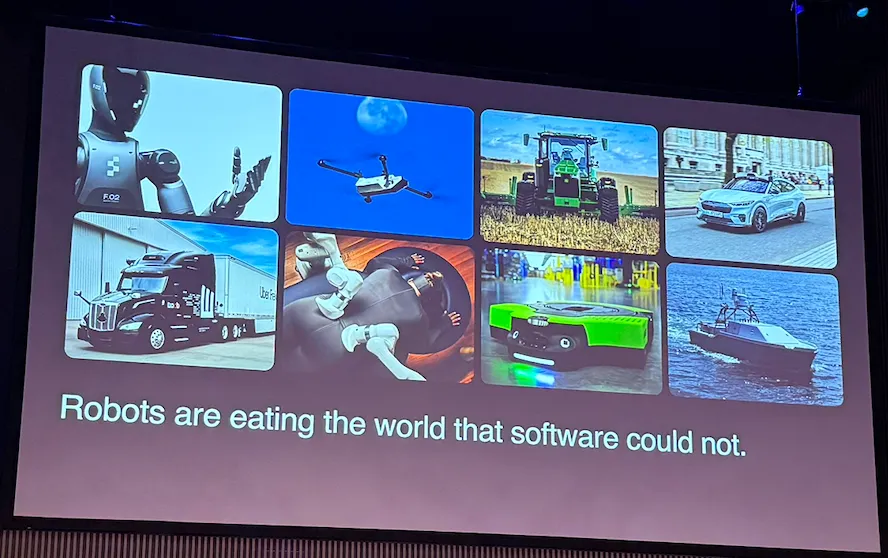

Jim Fan's Vision: The Physical Turing Test

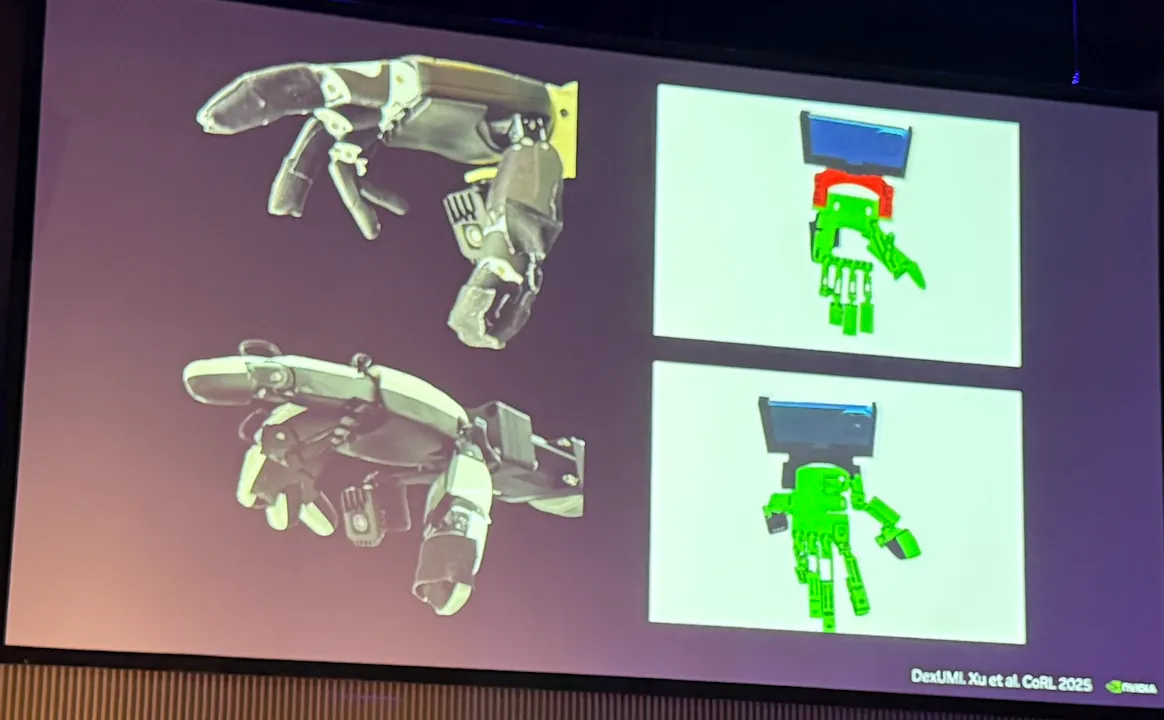

NVIDIA's Jim Fan opened the conference with a keynote that established the intellectual framework for everything that followed. His concept of the "Physical Turing Test" represents more than just another benchmark - it's a fundamental reimagining of how we evaluate robotic intelligence, moving beyond narrow task-specific metrics to assess a robot's ability to understand and interact with the physical world in truly generalizable ways.

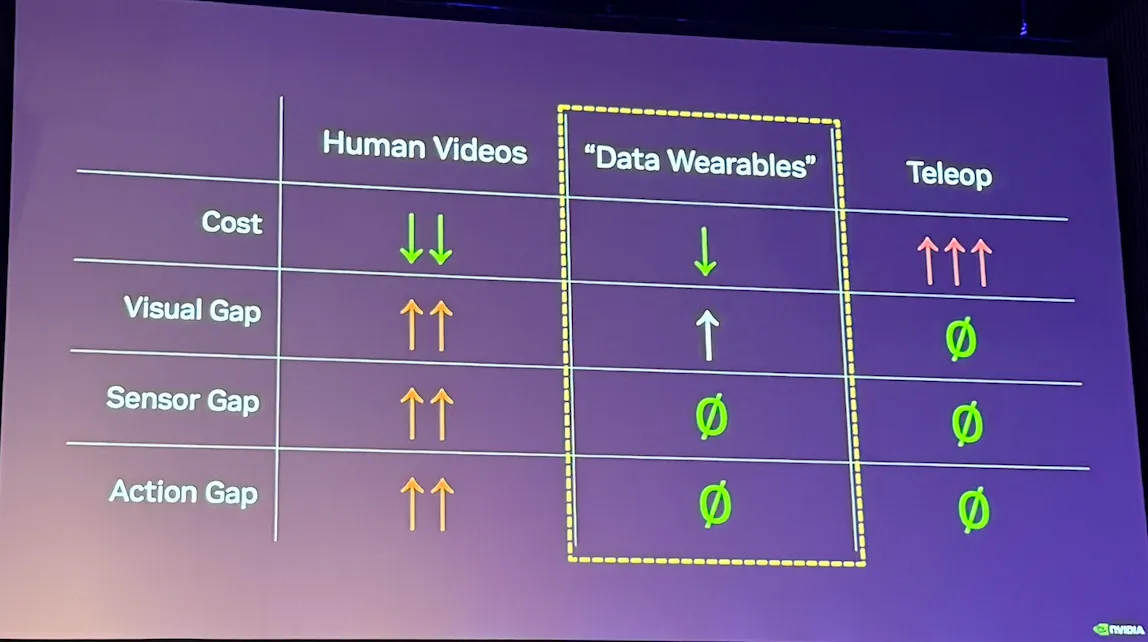

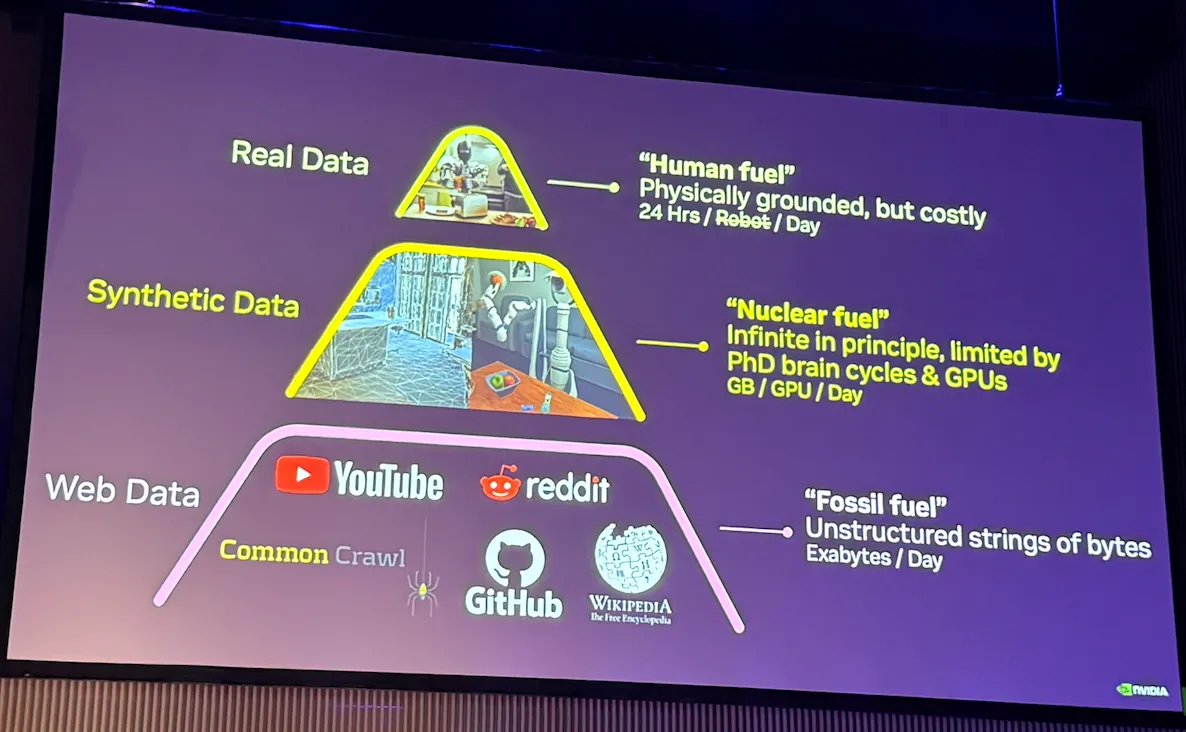

Fan's presentation revealed NVIDIA's strategy to become not just a hardware provider but the foundational platform for the entire physical AI ecosystem. Their approach centers on sophisticated simulation environments that can generate the massive datasets required for foundation model training. This addresses one of robotics' most fundamental challenges: while language models can leverage internet-scale text data, robotics has struggled with the scarcity of high-quality training data.

The technical implications are profound. NVIDIA's Omniverse platform can create photorealistic simulations that generate millions of hours of robotic interaction data across diverse environments and scenarios. More importantly, their synthetic data pipeline can produce edge cases and dangerous scenarios that would be impossible or unethical to collect in the real world.

Fan's emphasis on simulation-first development suggests that successful robotics companies will need to invest heavily in virtual environments before deploying in the real world. This represents a significant shift in how robotics development is approached, with implications for everything from team composition to capital allocation.

Diligent Robotics: The Reality of Hospital Deployment

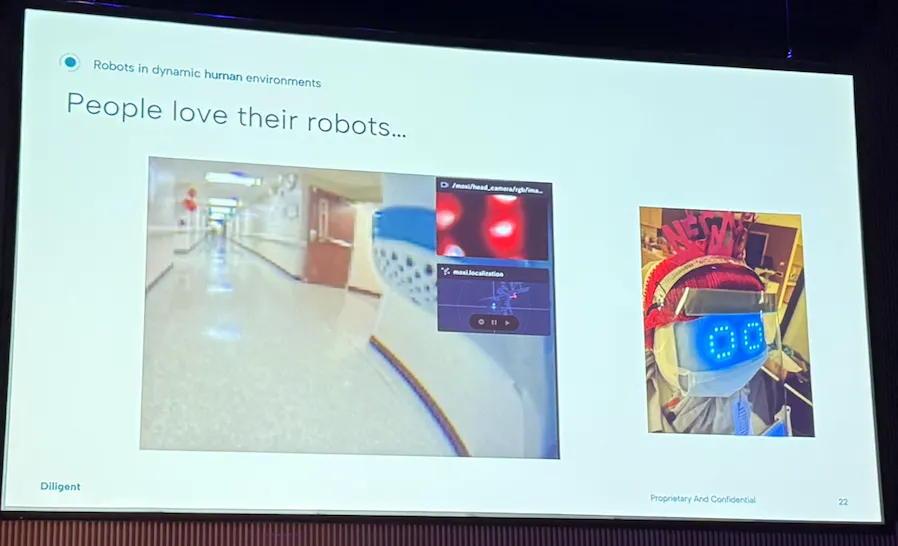

While Fan painted the vision, Vivian Chu from Diligent Robotics provided the reality check. Her presentation on Moxi robots operating in over 40 hospitals offered one of the most detailed examinations of real-world robotics deployment challenges available anywhere in the industry.

The technical challenges Chu described paint a picture of complexity that goes far beyond what most people imagine. Hospitals weren't designed for robots - they were designed for humans. This means Moxi robots must navigate elevator systems with varying button heights and interfaces, access-controlled zones requiring badge authentication, narrow corridors with unpredictable human traffic, and emergency protocols that require immediate path clearing.

The solution required sophisticated sensor fusion combining LIDAR for precise spatial mapping, RGB cameras for visual recognition of infrastructure elements, force sensors for gentle physical interaction with doors and elevators, and audio processing for understanding verbal instructions from staff. But the real breakthrough wasn't technical - it was social.

Chu revealed that Moxi robots are programmed with "politeness protocols" that prioritize human comfort and safety. They slow down in crowded areas, use audio cues to announce their presence, yield right-of-way in all human interactions, and maintain appropriate social distances. These aren't just nice features - they're essential for acceptance in human-centric environments.

The most telling indicator of success came in an unexpected form: hospital staff have begun decorating Moxi robots for holidays, putting Santa hats on them for Christmas and bunny ears for Easter. This cultural integration represents something far more valuable than technical metrics - it shows that robots are being accepted as colleagues rather than intrusive technology.

The operational results speak for themselves: over 1 million successful task completions across hospital deployments, a 99.7% successful navigation rate in complex hospital environments, task completion times competitive with human staff, and zero safety incidents involving patient interaction. But perhaps more importantly, Chu shared the lessons learned from failures, providing insights that only come from real-world deployment at scale.

Kyle Vogt's Transition: From Streets to Homes

The fireside chat with Kyle Vogt offered a unique perspective from someone who has seen both the promise and the challenges of autonomous systems at massive scale. His experience leading Cruise through 250,000 driverless rides provides invaluable context for understanding the challenges facing household robotics.

Vogt's reflection on the autonomous vehicle industry revealed hard-won insights about the relationship between scale and complexity. "Every additional nine of reliability becomes exponentially more expensive," he noted, highlighting one of the fundamental challenges facing any robotics deployment. Achieving 90% reliability might be straightforward, but moving from 99% to 99.9% requires fundamentally different approaches and significantly more resources.

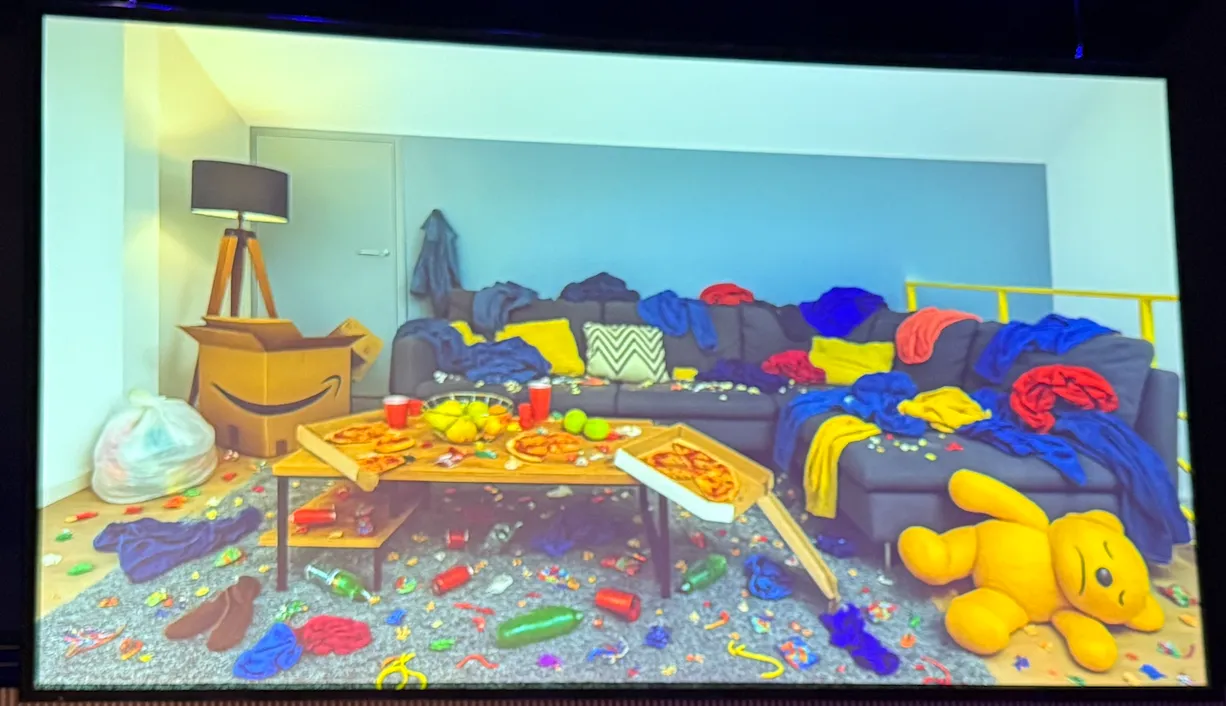

His transition to household robotics with The Bot Company represents a strategic shift based on market opportunity analysis. While people might use autonomous vehicles once or twice daily, household robots could interact with families dozens of times per day, creating a much larger addressable market despite lower per-interaction value.

The technical challenges of household environments present their own unique complexities. Unlike the structured road environments that autonomous vehicles navigate, homes have higher variability in layouts and objects, require delicate manipulation rather than just navigation, demand paramount privacy and security, and operate under much tighter cost constraints than commercial vehicles.

Vogt's approach focuses on "high-frequency, low-stakes" tasks initially - activities that happen often enough to justify the cost but have minimal consequences if the robot fails. This strategy of starting with simple, reliable tasks and gradually expanding capabilities through over-the-air updates mirrors Tesla's approach and provides a roadmap for other companies entering consumer robotics.

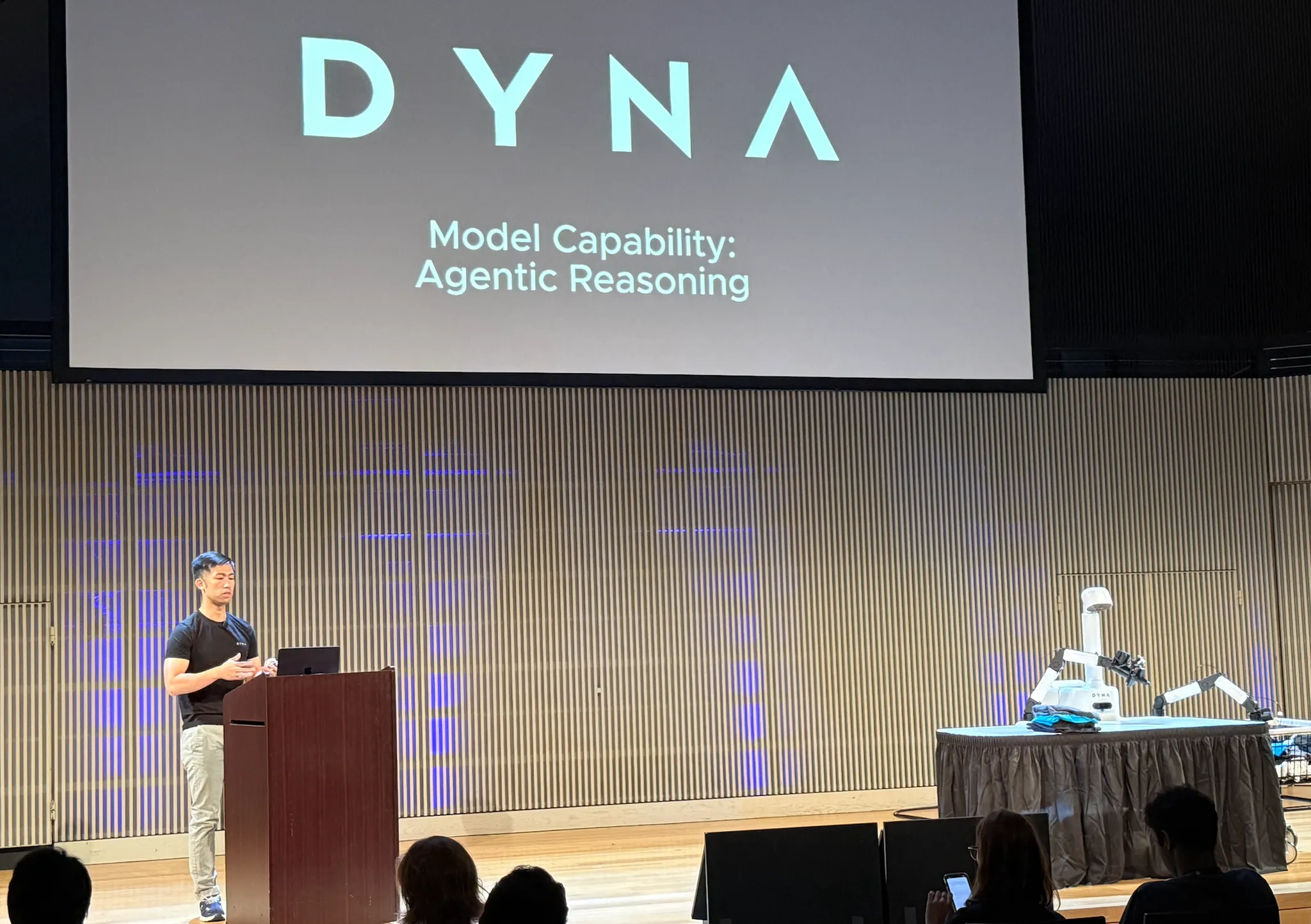

Dyna Robotics: The 99.4% Reliability Breakthrough

Jason Ma's presentation from Dyna Robotics represented a significant technical breakthrough. While Ma spoke, a Dyna robot continuously folded t-shirts with zero human intervention, completing seven perfect folds during his 30-minute presentation. This wasn't a demonstration - it was a proof of the "performance out of the box" philosophy that Dyna has achieved.

The technical innovation centers on their reward model architecture - a neural network that can watch video of a robot performing a task and precisely score the robot's progress toward completion. This enables autonomous learning where robots can practice tasks independently and learn from their own successes and failures, quality assessment that can distinguish between acceptable and unacceptable task completion, and error recovery where the system can adjust its approach in real-time when performance declines.

The most impressive result was achieving 99.4% success rate over 24 hours of continuous napkin folding, completing over 850 napkins. The precision requirements are extraordinary - the difference between acceptable and unacceptable napkin folding is just one centimeter. The system demonstrated remarkable recovery from errors, including accidentally grasping multiple napkins and successfully singulating them, recovering from misaligned stacks, and adapting to varying napkin materials and conditions.

But the real validation came from real restaurant deployment. Ma showed footage of their robot operating in a Los Angeles restaurant's back office, folding napkins for actual commercial use. This represents the crucial transition from laboratory demonstrations to real commercial value creation.

The $120 million Series A funding round that Dyna recently closed represents one of the largest in robotics history, enabling them to scale their foundation model training infrastructure, expand to new task domains beyond manipulation, build commercial deployment capabilities, and hire top talent from major tech companies.

Scaling Autonomy: Real-World Deployment Lessons

Chef Robotics: Multi-Robot Food Assembly at Scale

Vinny Senthil's presentation from Chef Robotics provided detailed insights into one of the most challenging multi-robot coordination problems: high-speed food assembly with multiple robots working on the same production line. The technical complexity of coordinating multiple robots placing ingredients into moving bowls while maintaining food safety standards represents challenges that most robotics companies haven't even begun to consider.

The core challenge involves multiple robots placing the same ingredient into alternating bowls moving at high speed, requiring precise timing coordination between robots, real-time communication about bowl states, conflict resolution when robots compete for the same bowl, and error recovery when one robot falls behind. Chef Robotics has developed sophisticated vision systems that can detect which bowls already contain specific ingredients with 99.999% accuracy, identify ingredient types and quantities in real-time, assess food quality and reject substandard portions, and track bowl positions on moving conveyor systems.

The robots communicate through a mesh network that enables real-time sharing of bowl states between robots, coordination of ingredient placement timing, load balancing when one robot experiences delays, and fault tolerance if individual robots go offline. Their machine learning innovations include Siamese neural networks to compare bowl contents across different camera angles and K-nearest neighbors classification for real-time ingredient assessment and quality control.

Chef Robotics has served millions of bowls using their multi-robot systems, demonstrating 99.999% accuracy in ingredient detection and placement, throughput competitive with human food assembly lines, and successful operation in multiple restaurant chains. Their success demonstrates that multi-robot coordination can achieve the precision and reliability required for commercial food service.

Zipline: The Last 100 Meters Challenge

Karthik Lakshmanan's presentation from Zipline provided insights from one of the world's most successful autonomous drone operations, with over 100 million autonomous miles flown across eight countries and four continents. The "last 100 meters" problem - the final approach to customer homes - presents unique challenges that only emerge at massive deployment scale.

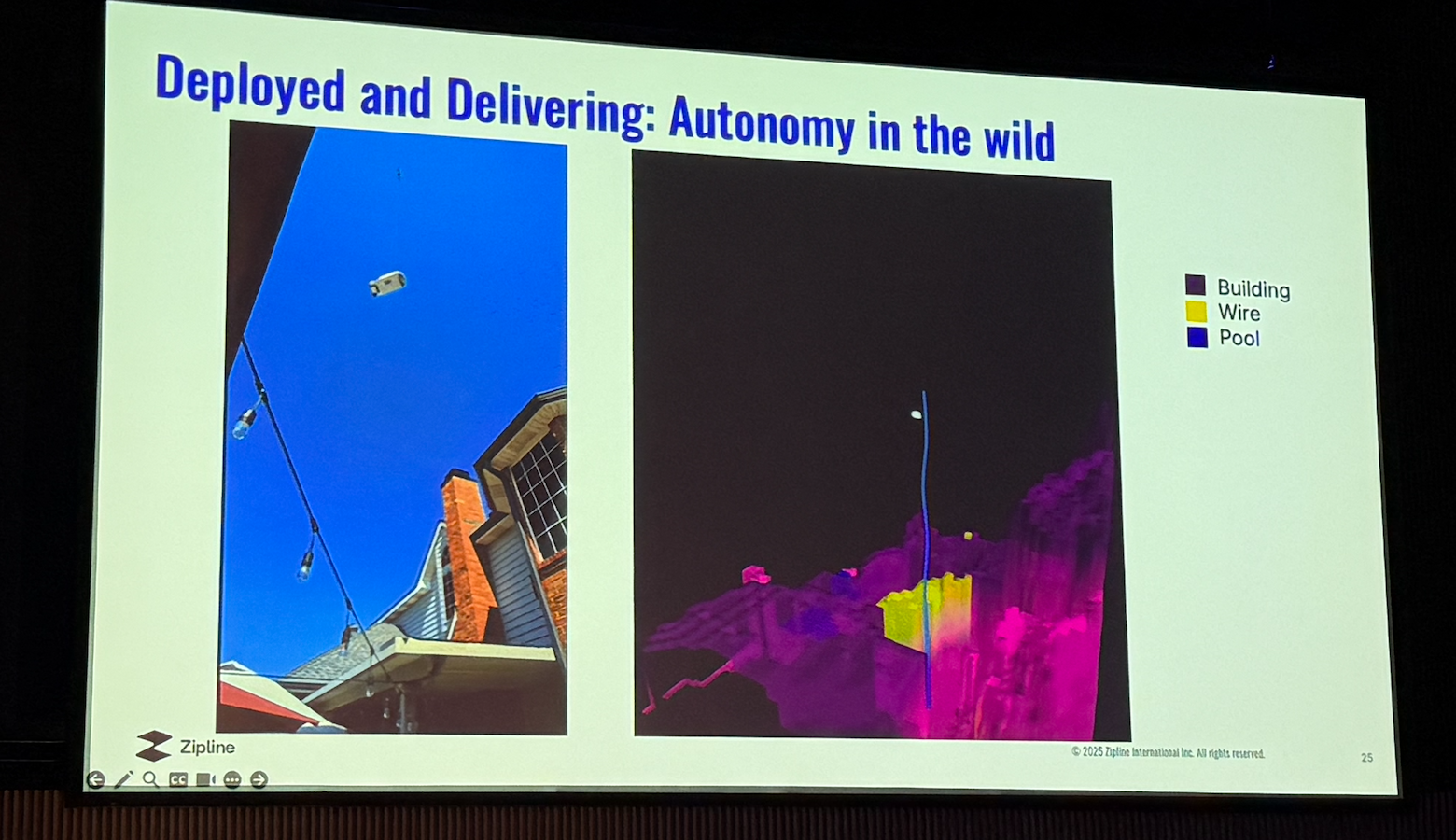

The delivery precision challenges include obstacle avoidance around trees, power lines, and buildings that create complex 3D navigation challenges, weather adaptation for wind, rain, and changing conditions that affect flight dynamics, precision landing where customers expect packages delivered to specific locations, and safety requirements for operating over populated areas that demand extremely high reliability.

Zipline operates across dramatically different environments, from dense urban areas with tall buildings and complex airspace to rural areas with unpredictable obstacles and limited infrastructure, different regulatory environments across countries, and varying customer expectations and cultural norms. Their Platform 2 architecture incorporates advanced sensor fusion combining LIDAR, cameras, and radar, real-time weather adaptation algorithms, precision GPS with RTK correction for centimeter-level accuracy, and redundant safety systems for operation over populated areas.

Operating across eight countries has revealed that regulatory frameworks vary dramatically and require local adaptation, cultural acceptance of drone delivery depends heavily on initial deployment success, infrastructure requirements must be planned carefully, and local partnerships are essential for successful market entry. Zipline's safety record includes zero injuries to people on the ground across 100+ million miles, 99.9% successful delivery rate, and comprehensive failure mode analysis and mitigation.

Slip Robotics: Fleet Observability at Scale

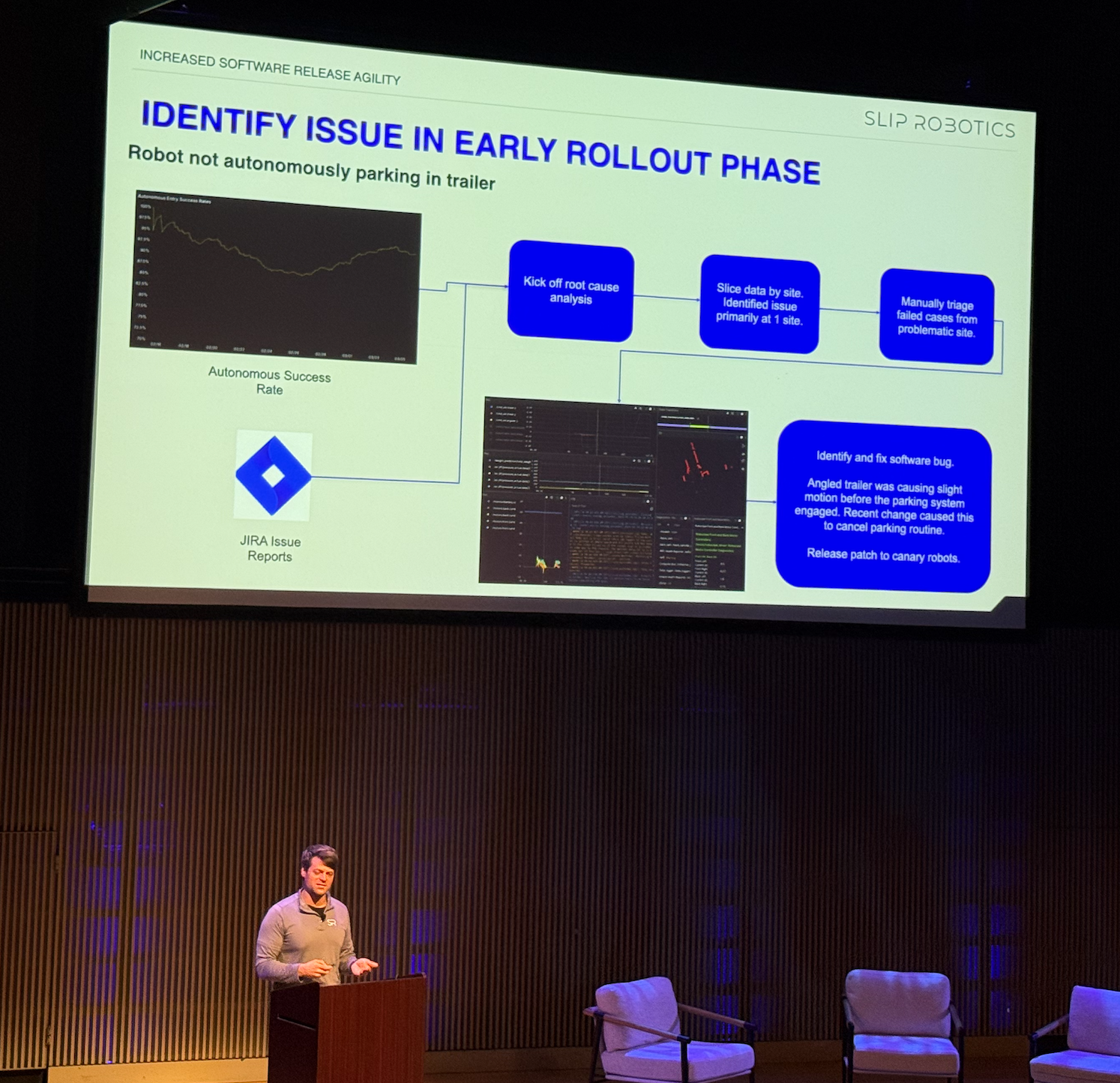

Dennis Siedlak's presentation from Slip Robotics focused on the critical role of observability in scaling autonomous robot fleets, using their SlipBot dock operations as a case study. The transition from prototype to fleet operations introduces challenges that only become apparent at scale: ensuring consistency across all robots in different environments, debugging issues across distributed systems, tracking fleet-wide performance metrics, and continuous improvement through fleet-wide data analysis.

SlipBot operations present unique challenges with dynamic dock conditions that change constantly due to weather, cargo, and human activity, safety requirements for operating around heavy machinery and human workers, uptime demands where customers require high availability for critical logistics operations, and regulatory compliance across different ports and jurisdictions.

Slip Robotics collects data at multiple levels: robot-level sensor data and performance metrics, fleet-level coordination and system-wide performance, environment-level dock conditions and external system integration, and business-level customer satisfaction and operational efficiency. Their integration with Foxglove provides real-time visualization of robot operations, historical analysis of performance trends, automated anomaly detection and alerting, and custom dashboards for different stakeholder needs.

The observability infrastructure has enabled 50% faster identification of performance issues, automated detection of algorithm regressions, data-driven optimization of robot behavior, and rapid deployment of improvements across the fleet. For customers, enhanced observability provides real-time operational status, predictive maintenance to minimize downtime, performance optimization based on usage patterns, and transparent reporting of service level achievements.

Reframe Systems: CAD-to-Robot Construction Workflows

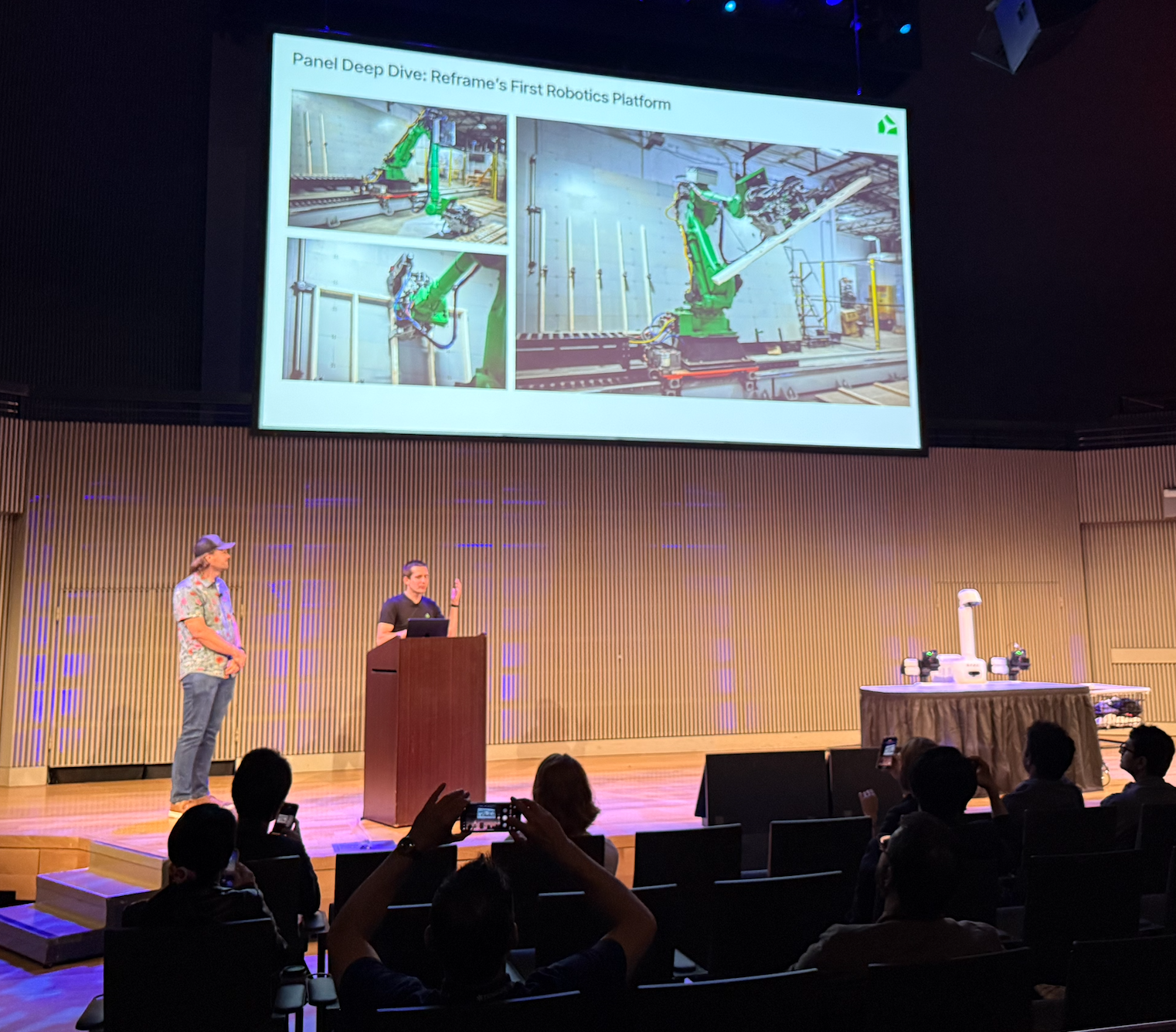

Felipe Polido and Ethan Keller's presentation from Reframe Systems showcased one of the most impressive examples of end-to-end automation in construction, demonstrating how robotics can transform traditional manufacturing processes through direct integration between design and manufacturing systems.

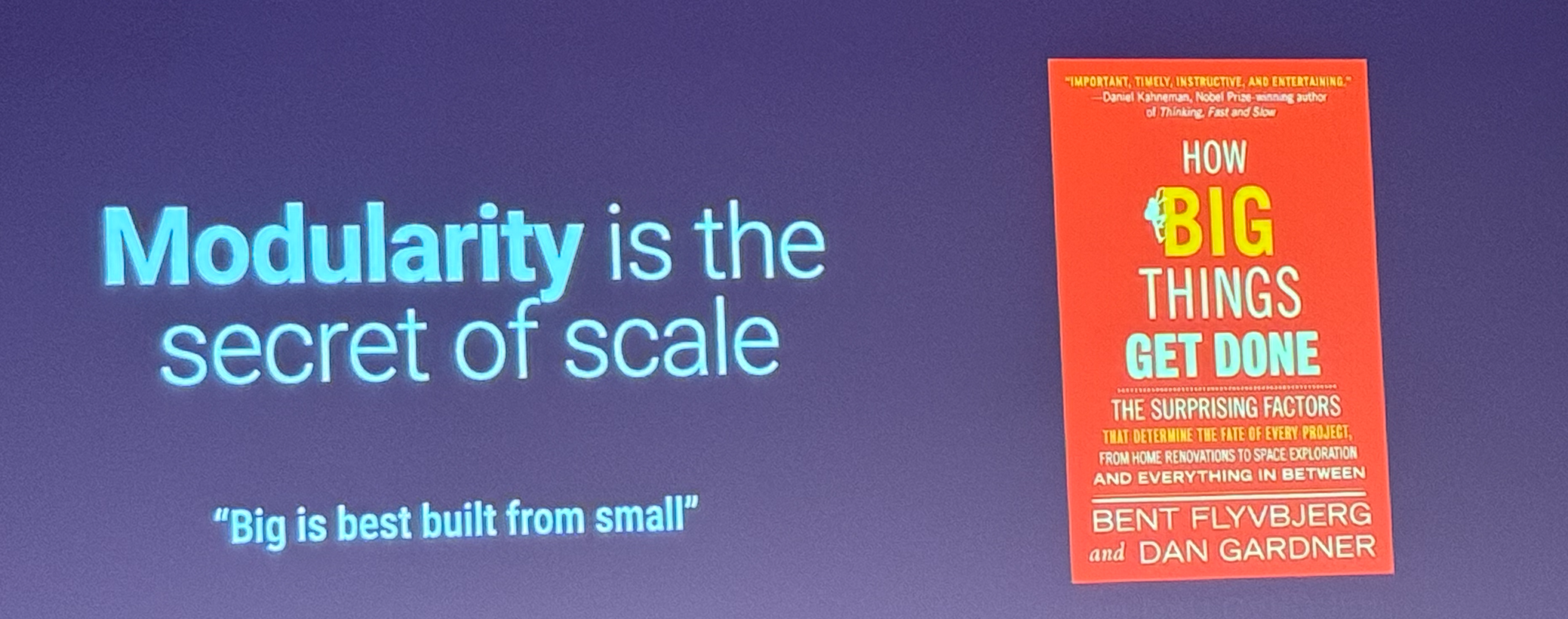

The construction automation challenge stems from construction being one of the least digitized major industries, with productivity growth lagging other sectors for decades. Traditional construction methods are labor-intensive and skill-dependent, prone to quality inconsistencies, limited by human physical capabilities, and difficult to scale rapidly. Reframe focuses on modular construction of climate-resilient homes, where standardized components can be manufactured in controlled environments and assembled on-site.

Their technical innovation centers on direct CAD-to-robot workflow integration that eliminates traditional translation layers. The seamless integration between Onshape CAD software and their robotic manufacturing systems enables automatic generation of robot instructions from CAD models, real-time validation of manufacturability during design, and immediate feedback to architects about design constraints.

The robotic system uses advanced computer vision to identify and locate lumber pieces with natural variations, adapt to material imperfections in real-time, ensure precise joint assembly despite material tolerances, and verify assembly quality throughout the process. Their universal magnetic fixturing system provides unprecedented flexibility with magnets positioned anywhere on the work surface, 400+ pounds of holding force per magnet, rapid reconfiguration for different panel designs, and elimination of custom jigs for each panel type.

Reframe's system is in active production with 1,000+ linear feet of residential walls completed for customer projects, 40% of panels for recent multi-family project built robotically, 5 panels per day current production rate, and target of 90% panel automation by end of year. The robotic manufacturing has achieved consistent joint accuracy within 1mm tolerance, elimination of material waste from human error, standardized quality that meets or exceeds manual construction, and detailed quality documentation for each panel.

Their integration with Foxglove enables real-time visualization of robot state and progress, historical analysis of production efficiency, quality control through recorded sensor data, and debugging of assembly issues through replay of sensor data. This data-driven approach enables analysis of production bottlenecks, optimization of robot motion planning, predictive maintenance based on usage patterns, and continuous improvement of assembly processes.

The Great Middleware Debate

ROS vs DIY: The Infrastructure Decision

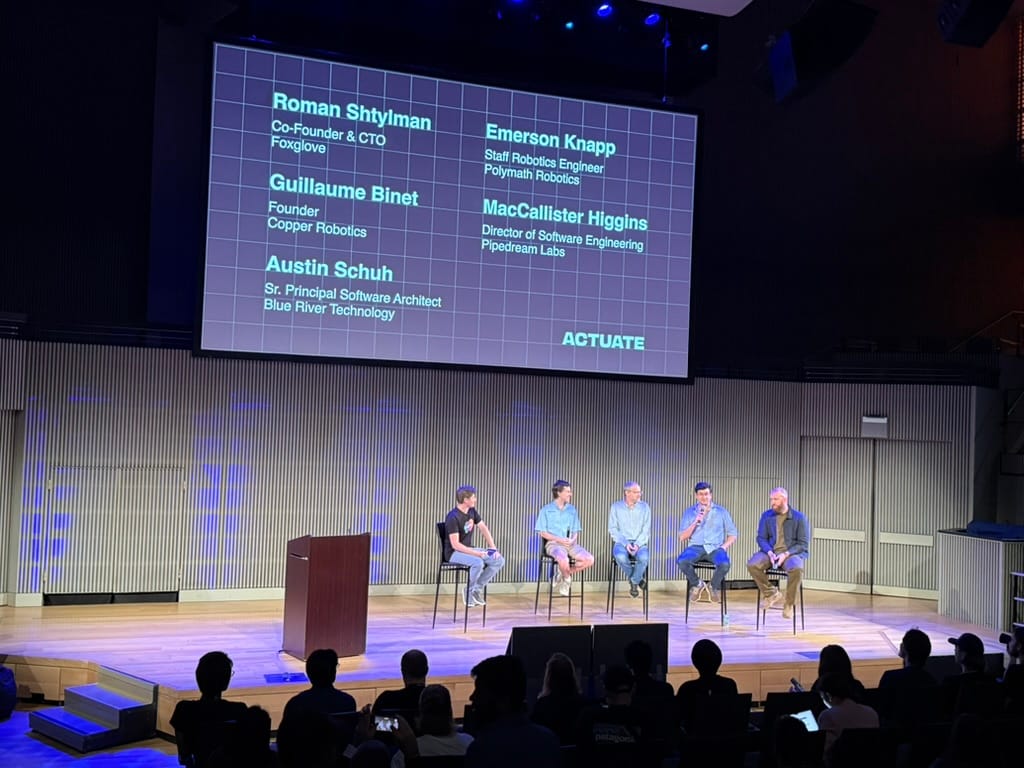

One of the most technically detailed and candid discussions of the conference came in the form of a panel debate about middleware choices in robotics. The conversation revealed fundamental philosophical differences about how to build reliable robotic systems, with each approach representing different trade-offs between development speed, reliability, and long-term scalability.

Austin Schuh from Blue River Technology made a compelling case for custom middleware, drawing from their experience with agricultural spraying systems that require microsecond-level timing precision. Missing deadlines by even 2-5 milliseconds can result in crop damage or chemical waste, making deterministic behavior not just a performance issue but an economic necessity. Their custom AOS (Autonomous Operating System) provides predictable, repeatable performance with detailed timing information that enables rapid problem resolution when issues occur in the field.

Guillaume Binet from Copper Robotics echoed similar themes while adding performance considerations. ROS's event-driven architecture can introduce latency through message passing overhead, while custom middleware eliminates these bottlenecks. More importantly, ROS systems tend to accumulate features and dependencies over time, creating performance degradation that can be difficult to diagnose and resolve.

The counter-argument came from Emerson Knapp at Polymath Robotics, who provided a pragmatic defense of ROS adoption. The extensive ecosystem enables rapid development and testing of new concepts, with engineers trained on ROS able to transfer skills between companies, reducing hiring and training costs. The large ROS community provides solutions to common problems and extensive documentation that can accelerate development significantly.

MacCallister Higgins from Pipedream Labs offered perhaps the most nuanced perspective, having worked with both approaches across different companies and applications. The choice between ROS and custom middleware depends heavily on application requirements, team size, and development timeline. For companies frequently changing hardware configurations, ROS's hardware abstraction layers provide significant value, while small teams benefit from ROS's pre-built components even as larger teams can afford custom development.

The discussion revealed hidden costs that only emerge at scale. Real-time system complexity becomes apparent when you accidentally add non-priority-inversion-safe mutexes or introduce memory access patterns that affect processor cache performance. ROS systems can hit scalability walls in production when event-driven architectures create performance bottlenecks that weren't apparent during development.

Perhaps most importantly, the panel highlighted developer quality of life considerations that are often overlooked. In competitive talent markets, forcing engineers to use tools they perceive as inferior can impact retention, even when those tools are better for the business.

Wayve's Global Generalization

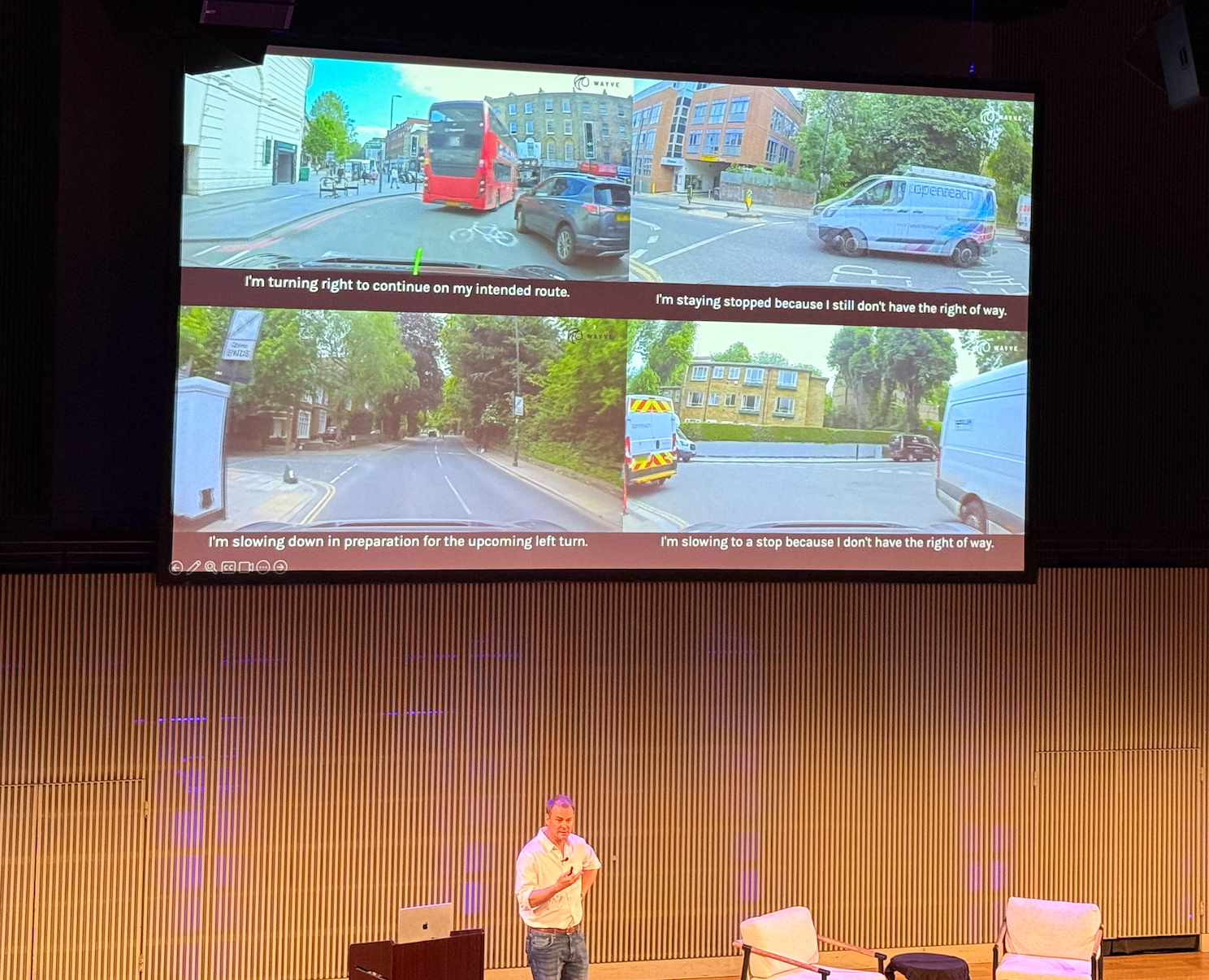

James Shotton's keynote from Wayve provided one of the most comprehensive technical presentations on end-to-end autonomous driving, showcasing remarkable progress in generalization across different countries and driving conditions. Their AV 2.0 paradigm represents a fundamental departure from traditional autonomous vehicle approaches that rely on expensive sensor suites, detailed HD mapping, and rigid planning algorithms.

Wayve's end-to-end learning approach uses standard cameras and basic sensors rather than expensive LIDAR arrays, processes sensor inputs through a single large neural network that outputs driving commands, operates without detailed prior mapping of environments, and adapts to different vehicles and sensor configurations. The results demonstrate remarkable generalization capabilities that would have seemed impossible just a few years ago.

The global generalization results are particularly striking. Initial zero-shot performance when moving from UK to US was poor, with the system attempting to drive on the wrong side of the road. However, with just 100-500 hours of US data, performance rapidly improved to match UK levels. More impressively, zero-shot performance in Germany was significantly better than initial US performance, showing clear knowledge transfer between different driving environments.

Specific examples included successfully navigating the Magic Roundabout in the UK, a complex multi-level roundabout with counter-flowing traffic; handling the chaotic traffic circle around the Arc de Triomphe in Paris with no lane markings or clear traffic rules; navigating extremely narrow Tokyo streets with complex human traffic patterns; and managing steep, winding Italian mountain roads never seen in training data.

The technical capabilities extend beyond just driving. Wayve has integrated language models to provide explanations of driving decisions, enabling real-time commentary where the system can describe what it's seeing and why it's making specific decisions. Users can ask the system about its decision-making process, and language explanations help validate that the system is making decisions for the right reasons.

The commercial validation came through their partnership with Nissan for next-generation ProPilot systems, with integration planned within 2-3 years. This represents a scalable approach that works with existing vehicle hardware and provides a path from L2 driver assistance to L4 full autonomy through software updates.

Symbotic's Thousand-Robot Orchestration

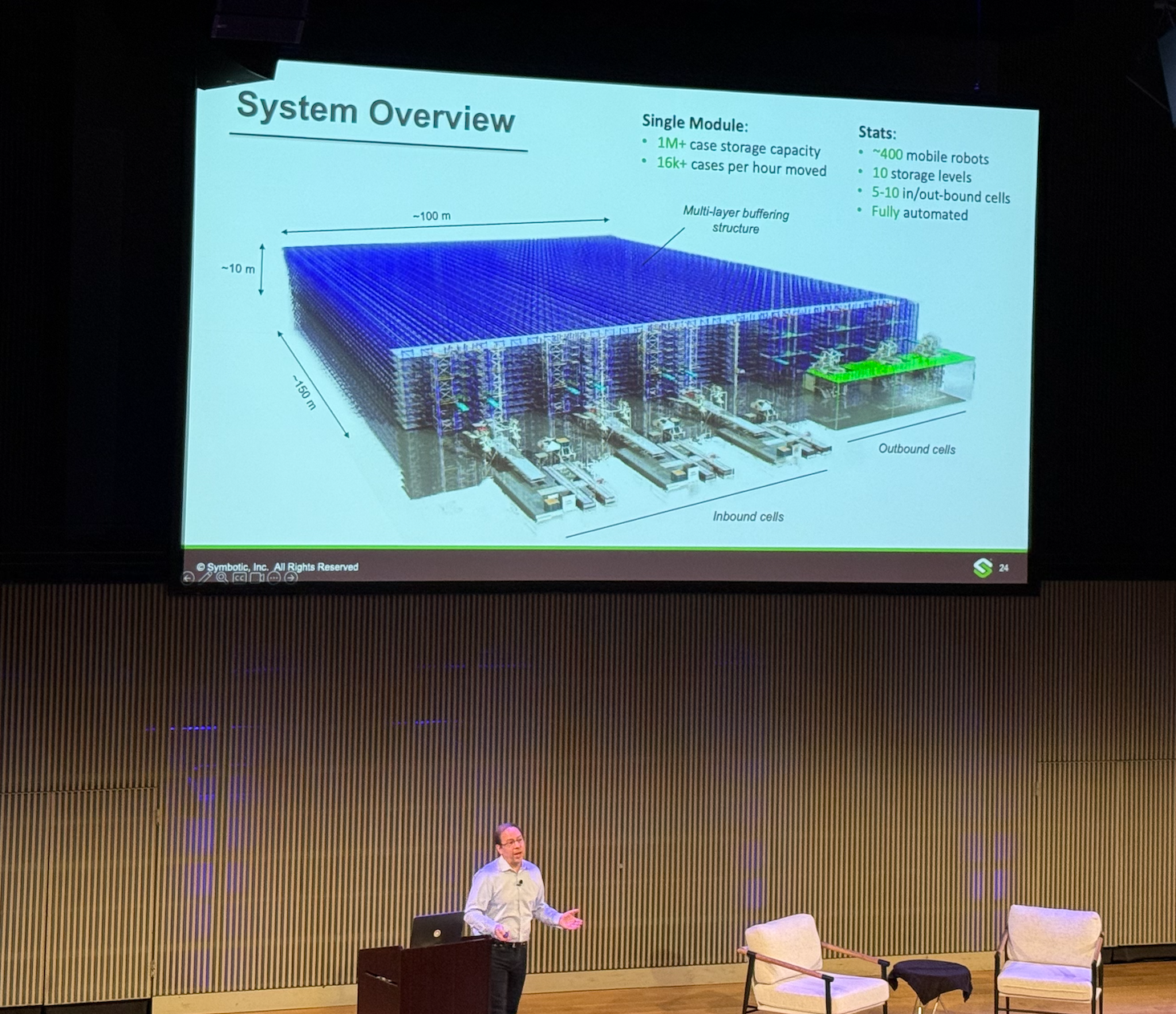

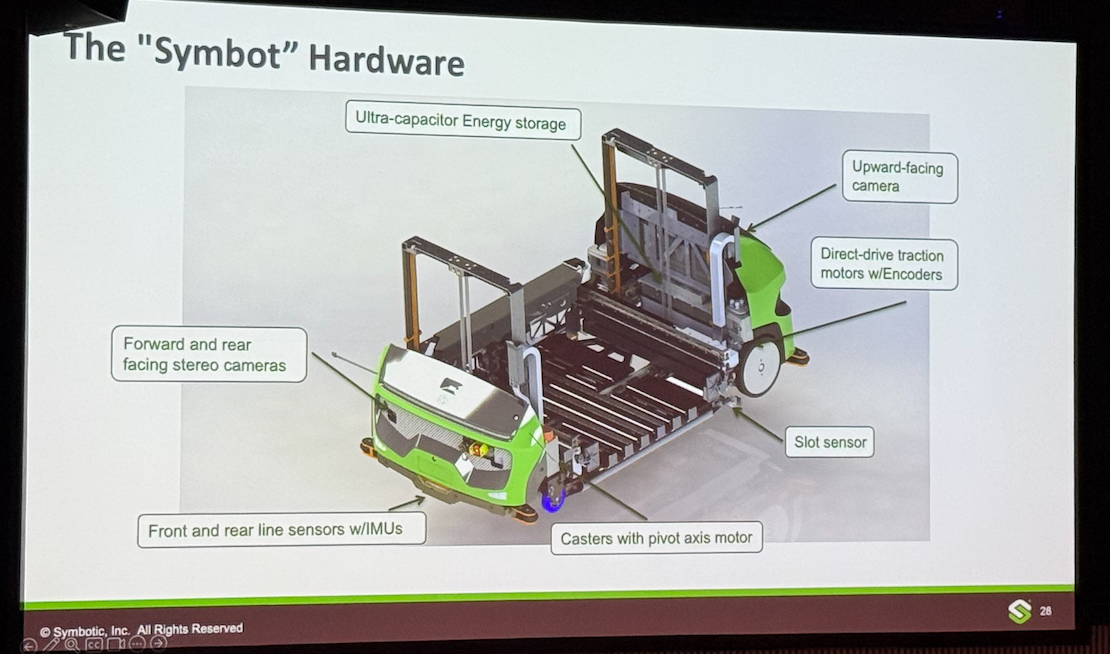

James Kuffner's presentation on Symbotic's warehouse automation provided insights into one of the most successful large-scale robotics deployments, with systems managing thousands of robots in real-time warehouse operations. The scale of coordination required - thousands of robots operating simultaneously in single warehouses, millions of cartons processed per day, real-time coordination of robot movements and task allocation, and 24/7 operations with minimal human intervention - represents challenges that most robotics companies haven't even begun to consider.

The algorithmic innovations required for this scale include distributed path planning where each robot contributes to overall system optimization, predictive collision avoidance that anticipates and prevents conflicts before they occur, dynamic load balancing that redistributes work based on real-time system state, and fault-tolerant coordination that maintains system performance despite individual robot failures.

Machine learning integration enables demand prediction for anticipating warehouse workload patterns, predictive maintenance for identifying robots likely to need maintenance before failures occur, performance optimization through continuous improvement of robot behavior based on operational data, and anomaly detection for identifying unusual patterns that might indicate problems.

The operational metrics demonstrate the commercial viability of large-scale robotics: 99.9%+ uptime across deployed systems, 50%+ improvement in warehouse throughput compared to manual operations, significant reduction in labor costs and workplace injuries, and rapid ROI for customers, typically within 2-3 years.

Rivian's Autonomous Manufacturing Vision

RJ Scaringe's keynote from Rivian provided insights into how autonomous systems are transforming automotive manufacturing, moving beyond traditional automation to intelligent systems that can adapt to changing production requirements. Rivian's approach to manufacturing automation represents a fundamental shift from rigid, pre-programmed systems to adaptive, AI-driven manufacturing that can handle the complexity of modern electric vehicle production.

The manufacturing challenges in electric vehicle production are particularly complex due to battery integration requiring precise handling of heavy, sensitive components, quality control demands that exceed traditional automotive standards, production flexibility needed to handle multiple vehicle configurations, and safety requirements for working with high-voltage systems. Rivian's autonomous manufacturing systems incorporate advanced computer vision for quality inspection and defect detection, collaborative robots that work safely alongside human workers, adaptive assembly systems that can handle different vehicle configurations, and predictive maintenance that minimizes production downtime.

The integration of AI throughout the manufacturing process enables real-time quality control with immediate feedback and correction, predictive analytics for supply chain optimization, adaptive scheduling based on demand and component availability, and continuous improvement through analysis of production data. Scaringe emphasized that the future of manufacturing lies not in replacing humans but in creating intelligent systems that augment human capabilities and enable more flexible, responsive production.

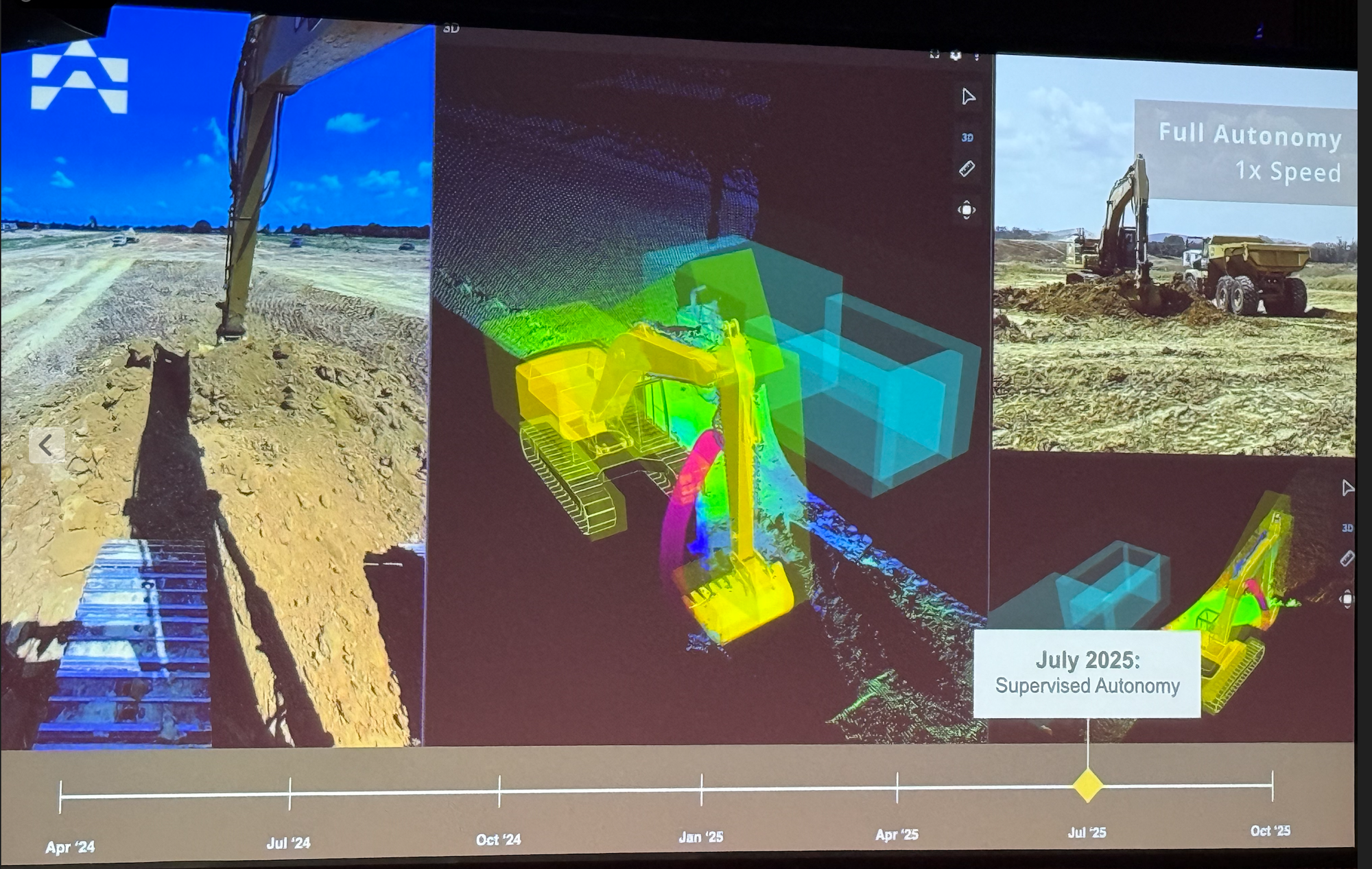

Bedrock Robotics: Underground Autonomy

Boris Sofman's presentation from Bedrock Robotics showcased one of the most challenging environments for autonomous systems: underground construction and tunneling operations. The technical challenges of operating in underground environments include GPS denial requiring alternative navigation systems, dynamic conditions where tunnel conditions change constantly, safety-critical operations where failures can have catastrophic consequences, and harsh environments with dust, vibration, and extreme temperatures.

Bedrock's autonomous systems combine LIDAR and inertial navigation for GPS-free positioning, real-time geological analysis to adapt to changing rock conditions, predictive maintenance to prevent equipment failures in remote locations, and safety systems that can operate independently of human oversight. Their approach focuses on augmenting human expertise rather than replacing it, with autonomous systems handling routine operations while human operators focus on complex decision-making and oversight.

The commercial impact includes 40% improvement in tunneling speed compared to traditional methods, significant reduction in safety incidents through automated hazard detection, cost savings through optimized equipment utilization, and improved project predictability through better data collection and analysis. Bedrock's success demonstrates that autonomous systems can operate effectively in some of the most challenging physical environments.

Mytra: Warehouse Automation Innovation

Mike Brevoort's presentation from Mytra showcased innovative approaches to warehouse automation that go beyond traditional conveyor systems to create flexible, adaptive storage and retrieval systems. The warehouse automation challenges include handling diverse product types and sizes, adapting to changing inventory patterns, maximizing storage density while maintaining accessibility, and integrating with existing warehouse management systems.

Mytra's approach uses modular robotic systems that can reconfigure based on inventory needs, AI-driven optimization for storage location and retrieval paths, collaborative robots that work alongside human workers, and real-time inventory tracking with automated cycle counting. Their system architecture enables rapid deployment in existing warehouses without major infrastructure changes, scalable capacity that can grow with business needs, integration with existing WMS and ERP systems, and detailed analytics for operational optimization.

The commercial results include 300% improvement in storage density compared to traditional shelving, 50% reduction in order fulfillment time, significant reduction in labor costs for inventory management, and rapid ROI typically within 18 months. Mytra's success demonstrates that warehouse automation can be both flexible and cost-effective, providing a compelling alternative to traditional automation approaches.

Dexterity: Structured Intelligence at Scale

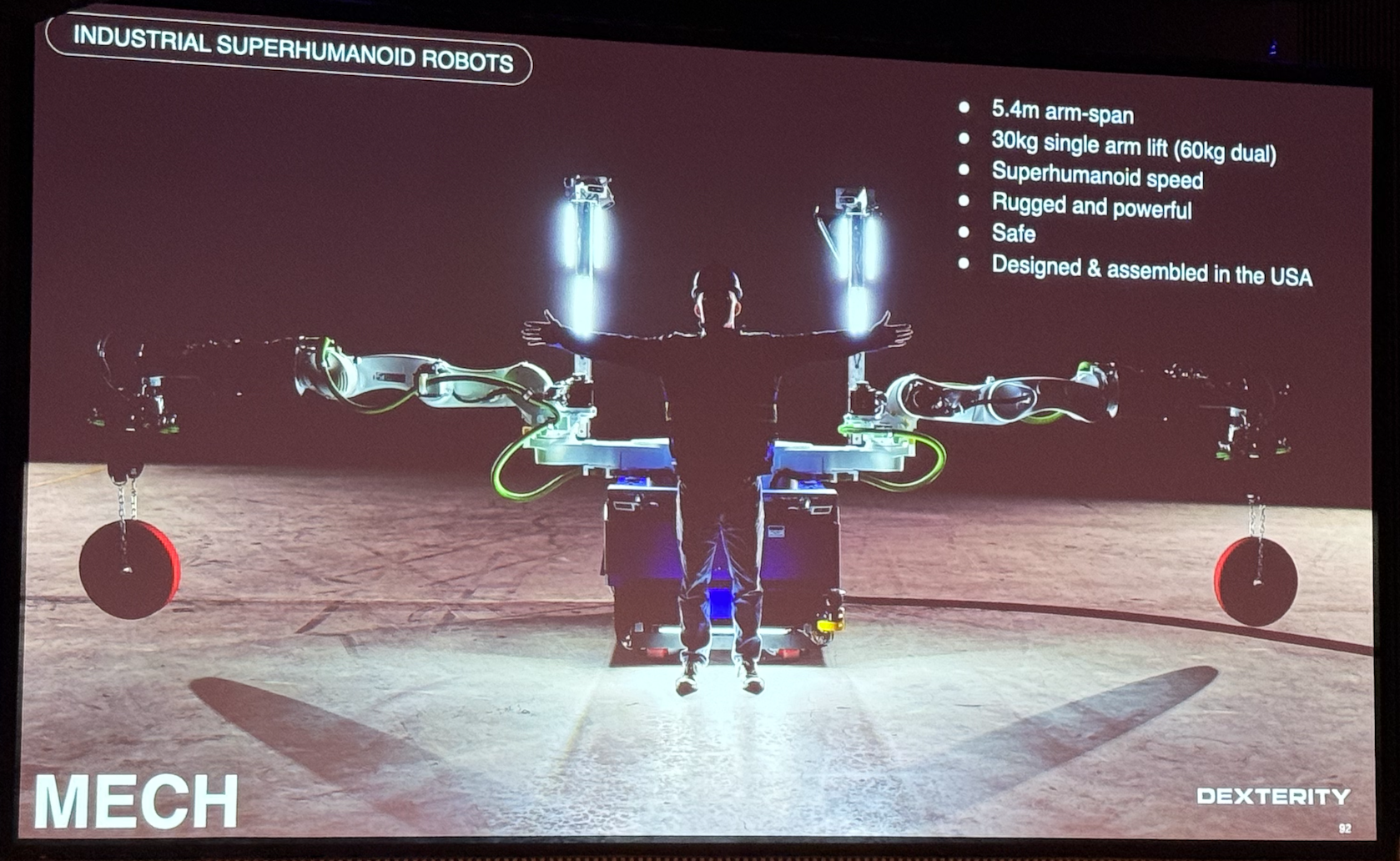

The presentation from Dexterity provided detailed insights into their structured approach to robotics intelligence, representing the counterpoint to end-to-end foundation models in the ongoing industry debate. Dexterity's philosophy centers on combining AI capabilities with engineered reliability to create systems that can operate in production environments with predictable performance.

Their technical approach combines computer vision for object recognition and manipulation planning, structured decision-making algorithms that provide explainable behavior, safety systems with multiple layers of redundancy, and continuous learning that improves performance while maintaining reliability guarantees. The structured approach enables detailed debugging and validation of robot behavior, predictable performance characteristics for production planning, integration with existing industrial systems and processes, and compliance with safety and regulatory requirements.

Dexterity's deployment results include millions of successful pick-and-place operations across multiple customer sites, 99.5%+ success rate in structured warehouse environments, integration with major logistics and e-commerce companies, and demonstrated ability to handle thousands of different product types. Their approach proves that structured intelligence can achieve the reliability and scalability required for large-scale commercial deployment.

The Structure vs Scale Debate

The panel discussion on "Structure vs Scale" represented one of the most important strategic debates in modern robotics, reflecting fundamental questions about the role of AI in robotics and the balance between engineered solutions and learned behaviors.

Evan Morikawa from Generalist made a passionate case for the foundation model approach, arguing that the same transformer architectures powering ChatGPT can understand physics and the physical world. His position centered on the belief that sufficient compute and data can solve robotics challenges, similar to language models, with architecture convergence pointing toward similar solutions across different domains.

The technical argument drew direct parallels to language model development: "The architectures are the same systems that drive ChatGPT. I believe that, within limits, a token is a token. These systems can understand the world and understand physics. It really is a function of getting enough data to train these models and putting enough compute into it."

Shitij Kumar from Dexterity provided the counterpoint, emphasizing the importance of engineered components for safety-critical applications that require predictable, verifiable behavior. Structured approaches allow for better debugging, validation, and component reuse while incorporating decades of robotics and control theory knowledge. Most importantly, structured systems can provide stronger reliability guarantees than black-box models.

Chris Paxton from Agility Robotics offered a nuanced perspective based on humanoid robotics experience, suggesting that different tasks may require different levels of structure versus learning. Humanoid robots operating around humans require careful balance of capabilities and safety, with practical considerations of development time and resources influencing architectural decisions.

The discussion revealed that both approaches have significant merits and limitations, with the optimal choice depending heavily on specific application requirements. Safety-critical applications may require structured approaches, at least initially, while the field is likely to evolve toward hybrid architectures combining benefits of both approaches.

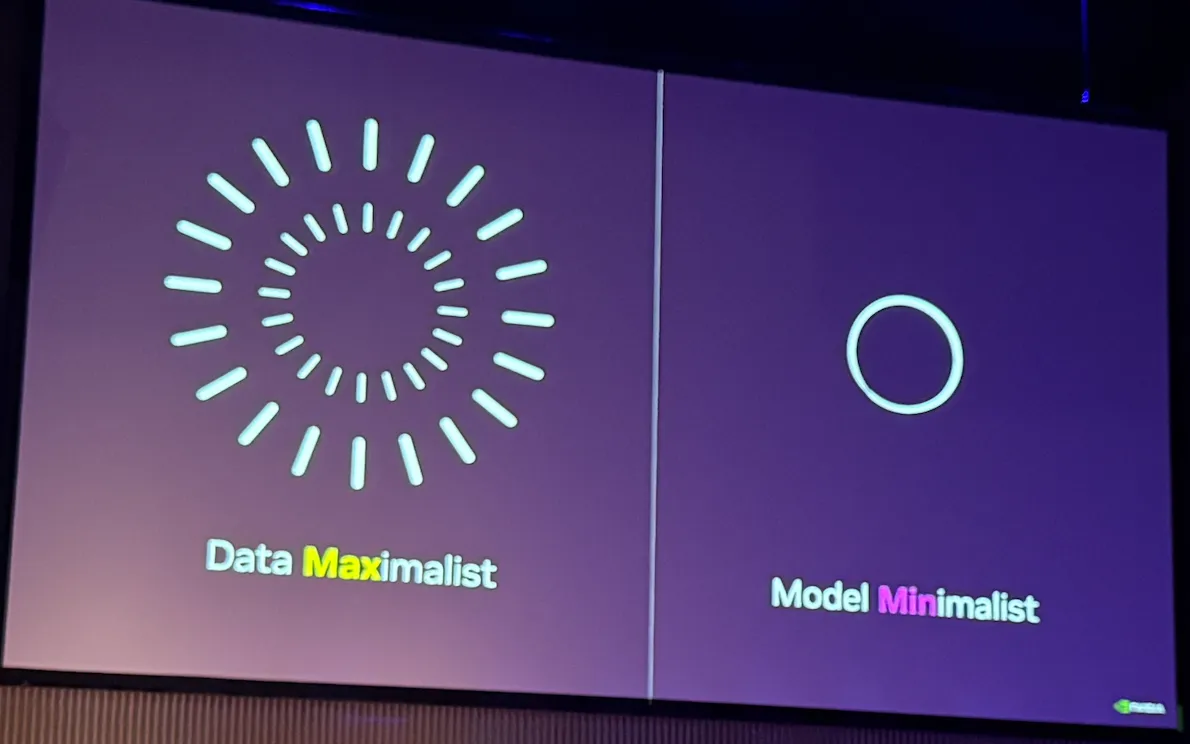

Physical Intelligence: The π0 Foundation Model Revolution

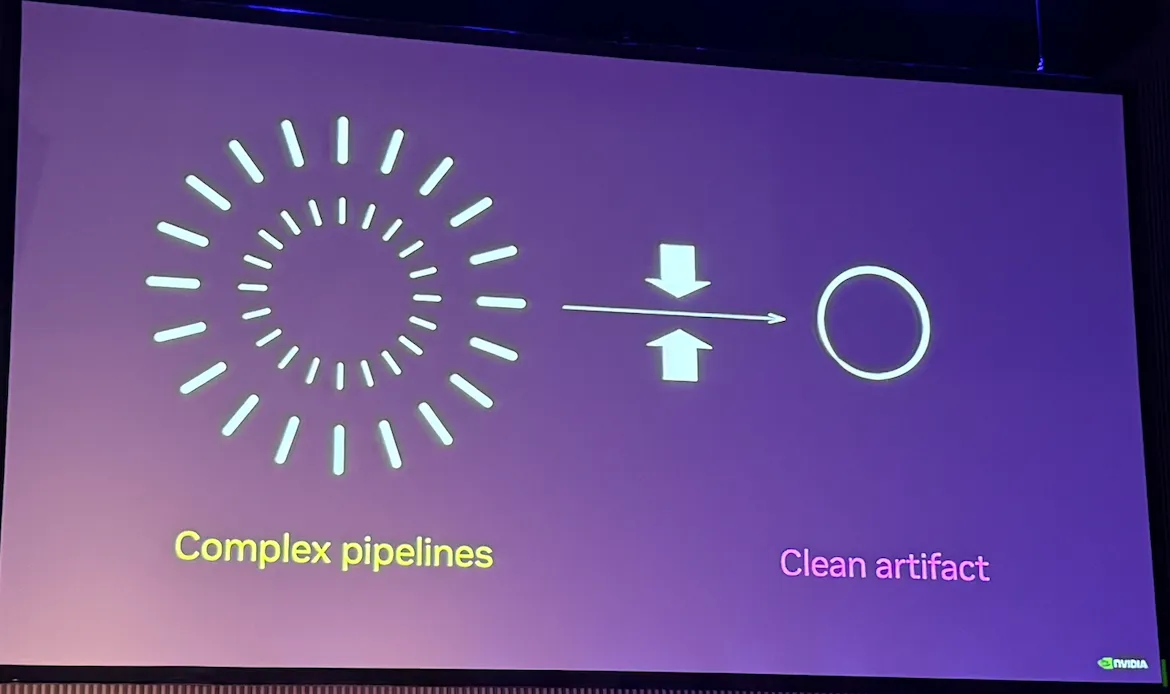

Kay Ke and Sergey Levine's presentation from Physical Intelligence represented one of the most significant technical breakthroughs showcased at Actuate 2025. Their π0 (pi-zero) foundation model demonstrates what many consider the first truly general-purpose robotic policy capable of controlling diverse robots across a wide range of dexterous manipulation tasks. Backed by over $400 million in funding from investors including Jeff Bezos, OpenAI, Sequoia Capital, and Lux Capital, Physical Intelligence is pursuing the ambitious goal of bringing general-purpose AI into the physical world.

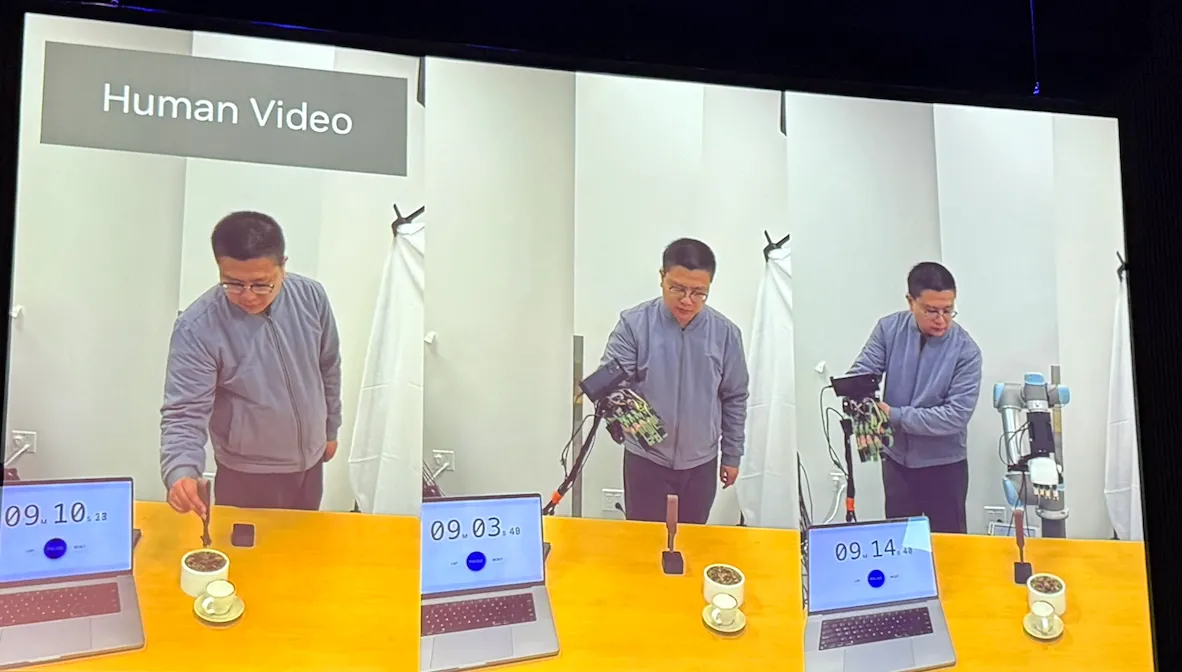

The company's approach addresses one of the fundamental challenges in robotics: while language models can leverage internet-scale text data, robotics has historically suffered from data scarcity. Traditional robotic systems require extensive manual engineering for each specific task and robot combination, making complex behaviors in unstructured environments like homes practically infeasible. Physical Intelligence's solution is to create a single generalist robot policy that can perform a wide range of skills across different robot platforms, requiring only modest amounts of task-specific data for specialization.

The π0 Architecture: Vision-Language-Action Integration

The technical innovation behind π0 centers on a novel architecture that combines internet-scale vision-language pretraining with continuous action outputs via flow matching, a variant of diffusion models. Starting from a pre-trained 3 billion parameter vision-language model (VLM), Physical Intelligence developed a method to augment discrete language token outputs with high-frequency motor commands capable of operating at up to 50 Hz for real-time dexterous manipulation.

This vision-language-action flow matching model inherits semantic knowledge and visual understanding from internet-scale pretraining while learning to output precise motor commands through training on diverse robot interaction data. The approach represents a fundamental departure from traditional robotics approaches that separate perception, planning, and control into distinct modules.

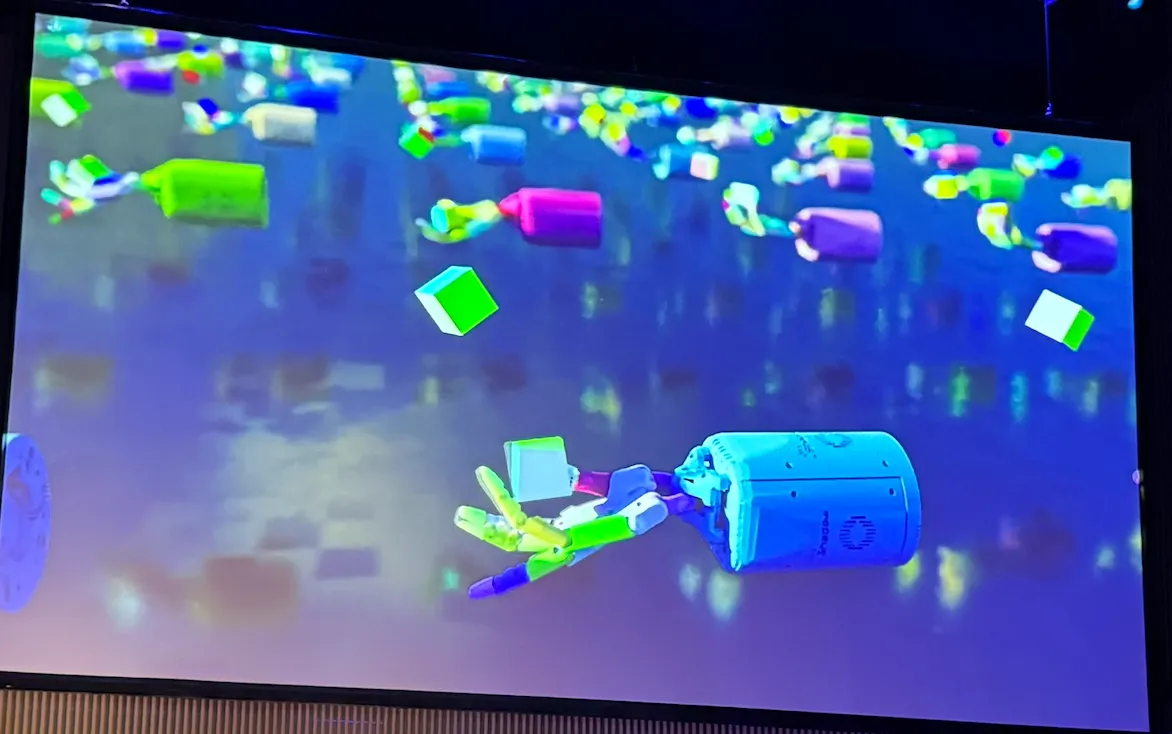

Cross-Embodiment Training at Unprecedented Scale

Physical Intelligence has assembled what they claim is the largest robot interaction dataset to date, combining open-source datasets like Open X-Embodiment with their own extensive collection of dexterous tasks performed across eight distinct robot platforms. Their training mixture includes UR5e arms, bimanual UR5e systems, Franka robots, bimanual Trossen manipulators, bimanual Arx systems, mobile Trossen platforms, and mobile Fibocom robots.

The diversity of tasks in their dataset is remarkable, covering laundry folding, coffee making, grocery bagging, table bussing, box assembly, cable routing, and trash disposal. Each task exhibits a wide variety of motion primitives, objects, and environmental conditions. The goal is not to solve any particular application but to provide the model with a general understanding of physical interactions - a foundation for physical intelligence.

Skild AI: The Omni-Bodied Intelligence Vision

Deepak Pathak's presentation from Skild AI represented one of the most ambitious visions shared at Actuate 2025: building a truly general-purpose robotic brain that can control any robot for any task. Founded by Carnegie Mellon University faculty and backed by Amazon, NVIDIA, and other major investors with a $300 million Series A, Skild AI is pursuing what they call "omni-bodied intelligence" - a single foundation model that transcends hardware limitations.

The core philosophy behind Skild AI challenges the prevailing approach in robotics where AI systems are tightly coupled to specific robot designs and narrow tasks. Instead of building separate models for different robots, Skild's approach trains a unified model across diverse morphologies including quadrupeds, humanoids, tabletop arms, mobile manipulators, and even human demonstration data. This cross-embodiment training strategy dramatically expands the available training dataset while providing inherent robustness to hardware changes or failures.

The Skild Brain Architecture

The technical innovation centers on their hierarchical foundation model architecture called the "Skild Brain." The system operates at two levels: a low-frequency, high-level manipulation and navigation policy that provides strategic commands, and a high-frequency, low-level action policy that translates these commands into precise joint angles and motor torques. This separation allows the same high-level reasoning to work across dramatically different robot morphologies while the low-level policy handles the specifics of each hardware platform.

What distinguishes Skild AI from other "robotics foundation models" is their emphasis on building a true robotics foundation model rather than adapting existing vision-language models. As Pathak explained, many competitors start with existing VLMs and add minimal real-world robot data, creating what he termed "Potemkin village" models that have semantic understanding but lack grounded actionable information. These models can handle semantic generalization in simple pick-and-place tasks but fail to develop true physical common sense.

Scaling Through Simulation and Internet Data

The fundamental challenge in robotics foundation models is the scarcity of action data at the scale required for true generalization. Pathak's team, drawing from over a decade of research in self-supervised learning, curiosity-driven exploration, and sim-to-real transfer, has developed a multi-pronged approach to achieve the trillions of examples needed for robust foundation models.

Their strategy combines large-scale simulation environments that can generate diverse robotic interaction scenarios, internet video data that provides rich examples of physical manipulation and navigation, and targeted real-world data collection for post-training refinement. This approach acknowledges that real-world data collection alone cannot achieve the necessary scale - even if the entire global population collected robotics data, it would take years to reach the hundred trillion trajectories needed for robust generalization.

Industry Transformation and Future Outlook

The Maturation Indicators

Several indicators throughout the conference pointed to the robotics industry's rapid maturation. The $120 million Series A for Dyna Robotics represents one of the largest robotics funding rounds in history, indicating significant investor confidence in the market's potential. Major corporate partnerships like Nissan-Wayve show traditional industries embracing robotics innovation rather than viewing it as a distant future possibility.

Multiple companies discussed proactive engagement with regulators, showing industry maturation beyond pure technology development toward understanding the broader ecosystem requirements for successful deployment. The consistent mention of talent competition across presentations indicates a growing and competitive market where skilled robotics engineers are in high demand.

Perhaps most importantly, the conversations had fundamentally shifted from technical feasibility to commercial viability. Companies were discussing deployment metrics, customer satisfaction, regulatory compliance, and business model optimization rather than just demonstrating that their technology works.

Technical Breakthroughs and Reliability Achievements

The conference showcased multiple companies achieving production-level reliability that would have seemed impossible just a few years ago. Dyna Robotics' 99.4% success rate over 24 hours of continuous operation, Diligent Robotics' 1 million successful hospital tasks, and Zipline's 100 million autonomous miles with zero ground injuries represent concrete evidence that robotics has moved beyond demonstration to commercial-grade performance.

The generalization capabilities demonstrated by several companies are equally impressive. Wayve's same model driving across multiple countries, Physical Intelligence's foundation models working across different robot platforms, and Dyna Robotics' zero-shot performance in new environments show that the long-promised benefits of AI are finally being realized in physical systems.

Large-scale deployments are proving commercial viability with Serve Robotics' 2,000 robot deployment planned, Symbotic's thousands of robots in single warehouses, and Chef Robotics' millions of bowls served. These aren't pilot programs or demonstrations - they're commercial operations generating real revenue and providing real value to customers.

The Path Forward

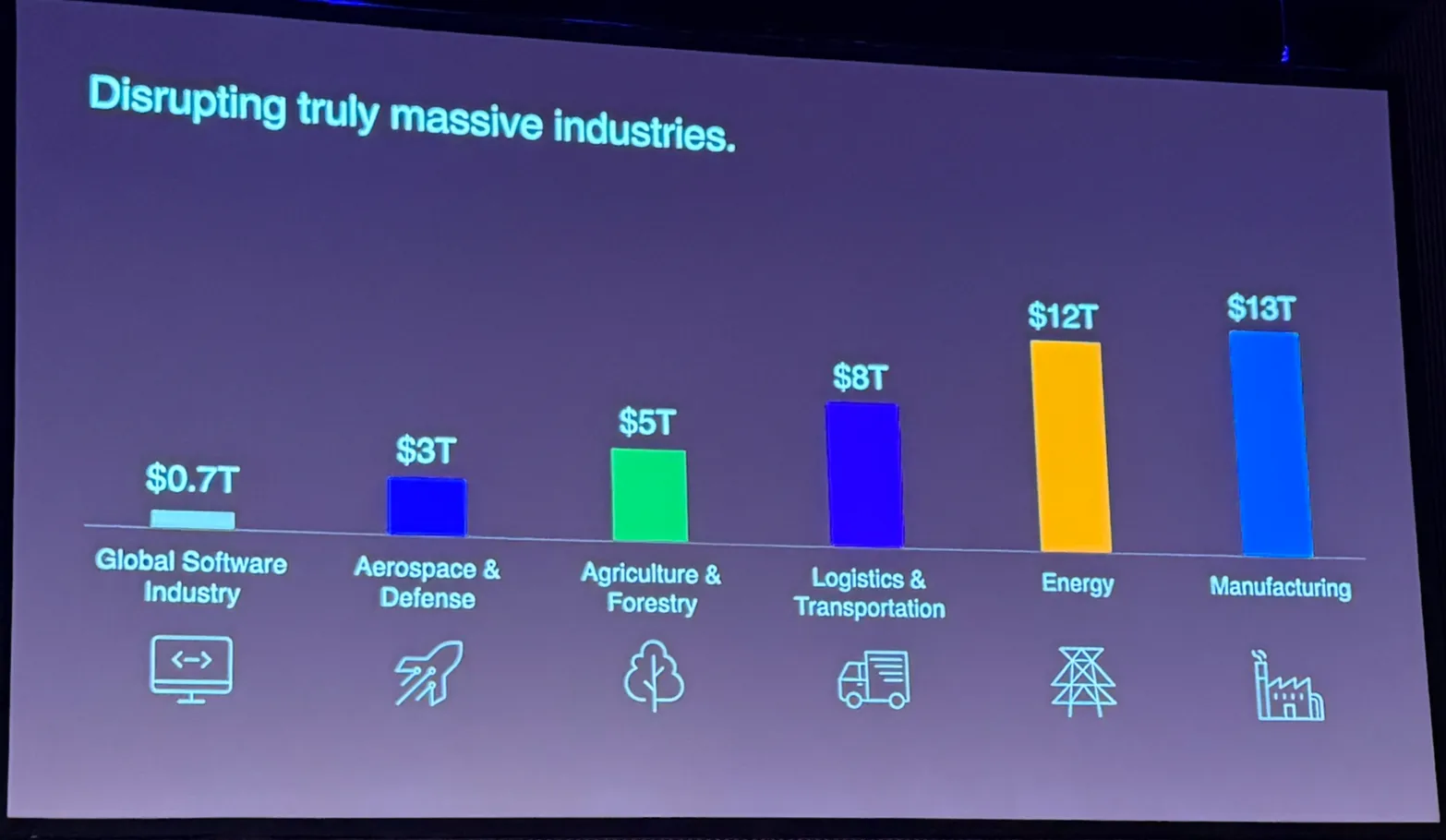

The conference made clear that we're at a critical inflection point in robotics development. The next 2-3 years will likely determine which architectural approaches, business models, and deployment strategies succeed at scale. Companies that master the combination of technical excellence, safety frameworks, and real-world deployment will shape the future of physical AI.

The debates and discussions revealed a maturing industry grappling with fundamental questions about architecture, safety, and deployment strategies. The success stories shared throughout the conference provide concrete evidence that the physical AI revolution is not just coming - it's here. The question is no longer whether robots will transform our world, but how quickly and in what ways.

The lessons learned and shared at Actuate 2025 will undoubtedly influence the next generation of robotic systems and the companies building them. The physical AI revolution is underway, and the companies and technologies showcased at this conference are leading the charge toward a future where robots are not just tools but collaborative partners in human endeavors.

References

[1] Foxglove. (2025). Actuate 2025 Conference. https://actuate.foxglove.dev/