Embodied AI and the Path to a "ChatGPT Moment" in Robotics

Embodied AI and the Path to a "ChatGPT Moment" in Robotics

Table of Contents

- Introduction and Background

- Current State of Embodied AI in Robotics

- Fundamental Differences: Language Models vs. Robotics

- The Bullish Case: Why a Robotics Breakthrough May Be Imminent

- The Bearish Case: Why a "ChatGPT Moment" in Robotics Is Challenging

- Sensor Technology and Real-Time Data Processing Challenges

- Conclusion and Future Outlook

Introduction and Background

Purpose of the Report

This report examines the current state of embodied AI in robotics and analyzes the prospects for a transformative "ChatGPT moment" in the field. It specifically addresses why applying the same approaches that led to breakthroughs in language models presents unique challenges in robotics, with particular attention to sensors, inputs/outputs, real-time data processing, and the physical constraints of operating in the real world. By presenting both optimistic and pessimistic perspectives, this report aims to provide a balanced assessment of when and how robotics might achieve its own revolutionary breakthrough.

Defining Embodied AI

Embodied AI refers to artificial intelligence systems that are integrated into physical forms, allowing them to sense, interact with, and learn from their environment through direct physical experience. Unlike purely digital AI systems that operate in virtual environments, embodied AI must navigate the complexities and unpredictability of the physical world. This integration of AI with physical embodiment creates both unique capabilities and significant challenges.

The concept of embodied AI has roots in the "embodiment hypothesis," which suggests that intelligence emerges from the interaction between an agent and its environment. This idea challenges traditional AI approaches that emphasize abstract symbolic reasoning divorced from physical context. Instead, embodied AI recognizes that cognition is deeply influenced by physical experience and sensorimotor capabilities.

The Concept of a "ChatGPT Moment"

The term "ChatGPT moment" refers to a transformative breakthrough that dramatically accelerates adoption and capabilities in a field, similar to how ChatGPT revolutionized natural language processing and brought conversational AI to mainstream awareness in late 2022. This moment represented the culmination of years of incremental progress in language models that suddenly crossed a threshold of usability and capability, creating widespread impact across industries and society.

For robotics, a comparable moment would involve robots suddenly demonstrating levels of adaptability, dexterity, and general-purpose intelligence that enable them to perform a wide range of tasks in unstructured environments with minimal human intervention. Such a breakthrough would likely transform industries from manufacturing and logistics to healthcare and domestic services, potentially reshaping human-machine relationships and economic structures.

Current Landscape in AI and Robotics

As of 2025, the AI landscape is dominated by large language models (LLMs) and generative AI systems that have demonstrated remarkable capabilities in understanding and generating text, code, images, and other digital content. These systems benefit from massive datasets, powerful computational resources, and architectures that scale effectively with more data and parameters.

The robotics landscape, meanwhile, shows a more uneven pattern of development. Industrial robots excel in structured environments performing repetitive tasks, while more advanced research prototypes demonstrate impressive but often specialized capabilities. Companies like Boston Dynamics, Figure AI, and Unitree have showcased robots with increasing agility and dexterity, while research labs continue to push the boundaries of what robots can perceive and manipulate.

Recent developments in embodied AI have begun to bridge the gap between these fields, with efforts to apply techniques from language models to robotics and to develop more general-purpose robotic platforms. However, fundamental differences in how these systems operate and learn present significant challenges to achieving a ChatGPT-like breakthrough in robotics.

This report examines these differences in detail, explores the arguments for and against an imminent robotics revolution, and analyzes the specific technical challenges that must be overcome to realize the full potential of embodied AI.

Current State of Embodied AI in Robotics

Historical Development of Embodied AI

The journey of embodied AI began in the 1960s with "Shakey," a pioneering robot developed by Stanford Research Institute (SRI). Named for its trembling movements, Shakey was the first robot capable of perceiving its surroundings and making autonomous decisions. This early experiment marked a crucial shift as artificial intelligence moved beyond abstract computation into physical embodiment.

The concept gained significant momentum in the 1990s following Rodney Brooks's influential 1991 paper, "Intelligence without representation." Brooks challenged traditional AI approaches by proposing that intelligence could emerge from direct environmental interaction rather than complex internal models. This perspective shift laid the groundwork for modern embodied AI by emphasizing the importance of physical engagement with the world.

Over subsequent decades, progress in machine learning—particularly deep learning and reinforcement learning—has enabled robots to enhance their capabilities through experiential learning. This evolution has transformed robotics from pre-programmed machines to systems capable of adaptation and improvement through interaction with their environments.

Key Players and Recent Advancements

As of 2025, several companies and research institutions stand at the forefront of embodied AI development:

-

Figure AI has made headlines with its Helix model, a vision-language-action AI system that enables unprecedented control over humanoid robots. Their breakthrough in 2025 was significant enough that they ended their partnership with OpenAI, claiming their internal capabilities had surpassed what external models could provide. Figure's robots demonstrate full upper-body control, multi-robot collaboration, and versatile object handling capabilities.

-

Unitree Robotics has developed the G1 humanoid robot, which showcases remarkable agility and fine motor control at a relatively affordable price point of $16,000. Their robots demonstrate improved dexterity and movement capabilities that were previously only seen in much more expensive systems.

-

Boston Dynamics continues to advance with robots like Atlas and Spot, which demonstrate impressive mobility and task execution in varied environments. Their focus on agility and stability in unstructured environments has set industry standards.

-

NVIDIA has introduced Cosmos, a suite of foundational AI models designed to enhance robot training. Unlike language models that generate text, Cosmos produces images and 3D models to help machines better understand and navigate the physical world. Trained on 20 million hours of human activity footage, it enables robots to simulate and learn from real-world scenarios.

-

Academic institutions like UC Berkeley, Stanford, and MIT continue to push boundaries in embodied AI research, developing new algorithms for robot learning and perception.

Current Capabilities and Limitations

Modern embodied AI systems demonstrate several impressive capabilities:

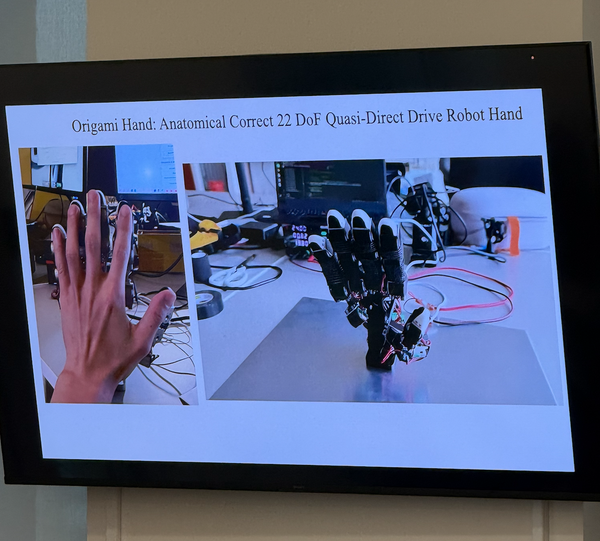

- Improved mobility and dexterity: Robots can now navigate complex terrains, climb stairs, and perform dynamic movements like jumps and flips.

- Enhanced perception: Advanced sensor integration allows robots to build detailed models of their environments.

- Task learning: Some systems can learn new tasks through demonstration or reinforcement learning.

- Limited adaptability: More advanced robots can adjust to minor environmental changes and unexpected obstacles.

However, significant limitations remain:

- Generalization challenges: Most robots excel at specific tasks but struggle to transfer skills to new contexts or tasks.

- Data inefficiency: Unlike humans who can learn from few examples, robots typically require extensive training data.

- Physical constraints: Power limitations, mechanical wear, and physical design constraints restrict operational capabilities.

- Real-time processing bottlenecks: Processing sensory data and making decisions in real-time remains computationally challenging.

- Cost and complexity: Advanced robotic systems remain expensive to build and maintain, limiting widespread adoption.

Notable Examples of Embodied AI Systems

Several systems exemplify the current state of embodied AI:

-

Figure's humanoid robots demonstrate advanced manipulation capabilities, allowing them to handle unfamiliar objects and perform complex tasks through their Helix vision-language-action model.

-

Phoenix, developed by Sanctuary AI, represents a general-purpose humanoid robot designed to interact with the physical world and make autonomous decisions. It incorporates advanced sensors, actuators, and AI systems for natural interaction with its surroundings.

-

Unitree's G1 humanoid robot showcases improved fine-motor control and agility at a relatively accessible price point, suggesting potential for wider adoption.

-

Spot from Boston Dynamics exemplifies adaptable mobility in varied environments, with applications ranging from construction site monitoring to remote inspection of hazardous areas.

-

Warehouse robots like those used by Amazon demonstrate practical applications of embodied AI in controlled environments, optimizing logistics operations through autonomous navigation and item handling.

These examples illustrate both the impressive progress made in embodied AI and the specialized nature of current solutions. While no system yet demonstrates the general-purpose intelligence and adaptability that would constitute a "ChatGPT moment" for robotics, the field continues to advance rapidly through both incremental improvements and targeted breakthroughs.

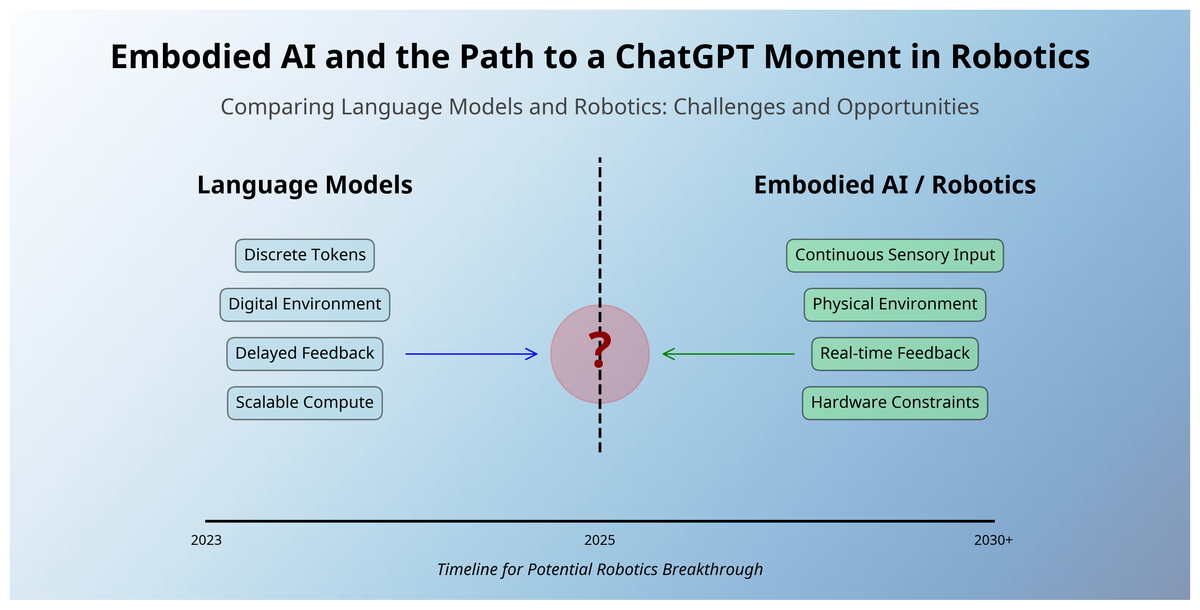

Fundamental Differences: Language Models vs. Robotics

The gap between large language models (LLMs) and embodied AI in robotics represents more than just a difference in application domains—it reflects fundamental disparities in how these systems process information, interact with their environments, and learn from experience. Understanding these differences is crucial to recognizing why the path to a "ChatGPT moment" in robotics faces unique challenges.

Processing Paradigms: Discrete Tokens vs. Continuous Sensory Inputs

Language Models: Discrete, Symbolic Processing

Language models operate in a purely digital realm, processing discrete tokens (words, subwords, or characters) in a sequential manner. This discrete nature creates several advantages:

- Clear boundaries between units of information

- Finite vocabulary with well-defined relationships

- Predictable input and output formats

- Consistent computational requirements per token

The symbolic nature of language allows LLMs to operate in a relatively constrained space where patterns, though complex, follow linguistic rules and statistical regularities that can be learned from text corpora.

Robotics: Continuous, Multimodal Sensory Processing

In stark contrast, embodied AI systems must process continuous streams of sensory data from multiple modalities:

- Visual information (often from multiple cameras)

- Tactile feedback from various surfaces and materials

- Proprioceptive data about joint positions and movements

- Inertial measurements for balance and orientation

- Environmental sounds and other sensory inputs

This continuous, multimodal nature creates significant challenges:

- No clear boundaries between units of information

- Infinite possible sensor states and combinations

- Unpredictable variations in input quality and relevance

- Variable computational demands based on environmental complexity

As Tim Urista notes in his analysis of scaling laws in embodied AI, "While LLMs work with discrete tokens in a purely digital space, embodied AI systems must process continuous sensory inputs, handle real-time feedback, and generate precise motor controls."

Feedback Mechanisms: Delayed vs. Real-Time

Language Models: Delayed, Text-Based Feedback

Language models typically receive feedback in the form of:

- Human evaluations after generation

- Reinforcement learning from human feedback (RLHF)

- Comparison against reference outputs

- Loss functions calculated over entire sequences

This feedback is often delayed, allowing for careful evaluation and optimization without strict time constraints. The model can take seconds or even minutes to generate a response without significantly impacting its utility.

Robotics: Immediate, Physical Feedback

Embodied AI systems must respond to immediate physical feedback:

- Contact forces when touching objects

- Balance adjustments when moving

- Collision avoidance in dynamic environments

- Real-time adaptation to changing conditions

As noted in Encord's analysis of embodied AI, "Processing this data quickly is crucial. Therefore real-time data processing solutions (e.g., GPUs, neuromorphic chips) are required to allow the AI to make immediate decisions, such as avoiding obstacles or adjusting its actions as the environment changes."

This requirement for real-time processing creates fundamental constraints on model complexity and decision-making approaches. A robot that takes too long to process sensory information might fall over, collide with objects, or fail to respond appropriately to dynamic situations.

Environmental Interaction: Digital vs. Physical World

Language Models: Controlled Digital Environment

Language models operate in environments that are:

- Perfectly reproducible

- Free from physical constraints

- Consistent across instances

- Bounded by clear rules of syntax and semantics

These properties allow for efficient training through techniques like massive parallelization, exact reproduction of scenarios, and controlled variation of inputs.

Robotics: Unpredictable Physical Reality

Embodied AI systems must navigate environments that are:

- Highly variable and unpredictable

- Subject to physical laws and constraints

- Potentially dangerous to the system or surroundings

- Impossible to perfectly reproduce for training

As the Coatue analysis on robotics points out, "Unlike other modalities in the digital world, robotics is severely bottlenecked by a lack of quality training data, a major gating factor to achieving general-purpose intelligence."

The physical nature of robotics also introduces safety concerns and hardware limitations that don't exist for language models. A language model generating inappropriate text has very different consequences than a robot making a physical error that could damage property or harm people.

Computational Requirements and Constraints

Language Models: Scalable Compute with Clear Scaling Laws

Language models benefit from:

- Well-established scaling laws relating model size to performance

- Ability to distribute computation across multiple devices

- Primarily focused on a single task (next-token prediction)

- Clear diminishing returns that can guide resource allocation

These properties have enabled systematic scaling of language models from millions to trillions of parameters, with predictable improvements in capabilities.

Robotics: Complex Compute with Physical Limitations

Embodied AI systems face:

- Unclear scaling relationships between model size and physical performance

- Energy and heat constraints for onboard computing

- Need to balance multiple competing objectives simultaneously

- Physical limitations on sensor quality and actuator precision

As Ben Bolte, founder of K-Scale Labs, notes in his 2025 predictions, robotics will not experience a sudden breakthrough akin to ChatGPT in language models because "achieving general-purpose embodied intelligence requires extensive, diverse data—on the order of millions of hours—which necessitates iterative improvements rather than a single, transformative release."

These fundamental differences help explain why progress in robotics follows a different trajectory than language models. While both fields benefit from advances in AI research, the physical embodiment of robotics introduces constraints and challenges that cannot be overcome through computational scaling alone. The path to a "ChatGPT moment" in robotics will likely require innovations that address these unique aspects of embodied intelligence.

The Bullish Case: Why a Robotics Breakthrough May Be Imminent

Despite the significant challenges that differentiate robotics from language models, there are compelling reasons to believe that a transformative "ChatGPT moment" in robotics may be on the horizon. Proponents of this bullish perspective point to several converging technological trends and recent breakthroughs that could catalyze rapid advancement in embodied AI.

Technological Enablers

Improved Fine-Motor Control and Agility

Recent advancements in robotics hardware have dramatically improved the physical capabilities of robots:

-

Enhanced dexterity: Companies like Figure AI have demonstrated robots with unprecedented fine-motor control, allowing for manipulation of unfamiliar objects with human-like precision.

-

Increased agility: Robots from Unitree and Boston Dynamics now showcase mobility that was unimaginable just a few years ago, with the ability to run, jump, and navigate complex terrain.

-

Affordable humanoids: As noted in the Lucid Bots 2025 report, "By the end of 2025, humanoid robots are expected to become commoditized, with standardized components driving down costs... full-size humanoid robots will be priced below $8,000, and home robots around $4,000."

These hardware improvements provide the physical foundation necessary for more advanced embodied AI applications, removing barriers that previously limited what robots could physically accomplish.

Better World Models for Synthetic Data Training

One of the most significant developments supporting the bullish case is the emergence of sophisticated world models that enable effective training in simulated environments:

-

NVIDIA's Cosmos: This suite of foundational AI models helps robots understand and navigate the physical world by producing images and 3D models based on 20 million hours of human activity footage.

-

Digital twins: As highlighted in a 2025 review, "This method synchronizes multi-sensor features with real-time robot motion data to predict defects such as cracks and keyhole pores," allowing robots to learn from simulated experiences that transfer effectively to real-world applications.

-

Synthetic data generation: Advanced simulation environments can now generate diverse, realistic training scenarios that help robots learn to handle variations and edge cases they might encounter in the physical world.

These world models address one of the fundamental challenges of robotics—the data bottleneck—by allowing systems to learn from synthetic data that effectively transfers to real-world performance.

Increased Funding and Research Focus

The financial and institutional support for robotics has grown substantially:

-

National defense applications: As noted in the AI Supremacy report, "China is so far ahead in drone manufacturing now there is a push to weaponize robots for national defense in both the U.S. and China. Anduril's Arsenal-1 hyperscale plant in Ohio and Palantir's $200 million funding of drone-startup Shield AI signals a new era."

-

Manufacturing investment: With over 600,000 manufacturing vacancies reported in the U.S. in 2024, there is strong economic incentive to accelerate robotics development for industrial applications.

-

Corporate coalitions: Government and corporate collaborations like the Beijing Humanoid Robot Innovation Center in China and similar initiatives in the U.S. are pooling resources to accelerate progress.

This influx of capital and strategic focus creates the conditions for rapid innovation and deployment of advanced robotics technologies.

Emergence of Fast-Learning Robots

Recent breakthroughs in how robots learn represent a potential inflection point:

-

Figure AI's Helix: This vision-language-action AI model enables robots to learn new tasks more efficiently and adapt to unfamiliar objects and environments.

-

Reinforcement learning advances: Improvements in reinforcement learning algorithms allow robots to learn complex behaviors through trial and error with increasing efficiency.

-

Transfer learning: Techniques that allow robots to apply knowledge from one domain to another are improving, enabling faster adaptation to new tasks.

These learning advances could dramatically reduce the time and data required for robots to master new skills, potentially leading to exponential improvements in capabilities.

Potential Catalysts for a Robotics Revolution

Several factors could serve as catalysts that accelerate the path to a "ChatGPT moment" in robotics:

Convergence of AI and Robotics Expertise

The increasing overlap between AI and robotics research communities is creating fertile ground for breakthrough innovations:

-

OpenAI's robotics program: OpenAI, the creator of ChatGPT, is actively hiring for roles to kickstart their robotics program, potentially bringing their expertise in large-scale AI models to embodied systems.

-

Cross-disciplinary collaboration: Researchers with backgrounds in language models, computer vision, and robotics are increasingly working together, creating opportunities for novel approaches.

Economic and Demographic Pressures

External factors are creating urgent demand for advanced robotics solutions:

-

Labor shortages: ABI Research predicts that "the global installed base of commercial and industrial robots could reach 16.3 million in 2030 as manufacturers attempt to offset the baby boomer exodus."

-

Aging populations: In many developed countries, demographic trends are creating pressure to develop robots that can assist with healthcare and eldercare.

Standardization and Ecosystem Development

The maturation of the robotics ecosystem could accelerate adoption and innovation:

-

Hardware standardization: The emergence of standardized components and interfaces reduces development costs and enables faster iteration.

-

Software platforms: Common software frameworks for robotics are making it easier to develop, deploy, and improve robotic systems.

The bullish case for a robotics breakthrough rests on the convergence of these technological enablers and catalysts. While the challenges of embodied AI are substantial, proponents argue that we are approaching a tipping point where multiple advances will combine to produce a sudden leap in capabilities—much as we saw with language models in 2022. As Michael Spencer writes in AI Supremacy, "Compared to Generative AI's historical timeline, we are at about 2018 for robotics," suggesting that a ChatGPT-equivalent moment for robotics could arrive within the 2025-2030 period.

The Bearish Case: Why a "ChatGPT Moment" in Robotics Is Challenging

While the bullish perspective offers compelling reasons for optimism about an imminent breakthrough in robotics, there are equally strong arguments suggesting that a "ChatGPT moment" for embodied AI faces fundamental obstacles that cannot be easily overcome. The bearish case doesn't deny progress in robotics but contends that the path forward will be more gradual and incremental than the sudden leap seen with language models.

Physical Constraints to Adoption

Unlike software-based AI systems, robots face inherent physical limitations that software alone cannot overcome:

-

Hardware dependencies: As Coatue's analysis points out, "Robotics won't have a ChatGPT Moment due to physical constraints to adoption, high upfront costs of ownership, and the nascency of the ecosystem."

-

Physical wear and tear: Robots experience degradation over time, requiring maintenance and replacement of parts that digital systems don't face.

-

Energy limitations: Physical movement requires substantial energy, creating constraints on operational duration and capability that don't apply to digital systems.

-

Safety requirements: Physical systems that interact with humans and environments must meet rigorous safety standards, limiting how quickly new technologies can be deployed.

These physical constraints create fundamental barriers to the kind of rapid scaling and deployment that characterized the ChatGPT moment in language models.

High Upfront Costs and Infrastructure Requirements

The economics of robotics present significant barriers to widespread adoption:

-

Capital investment: Even with recent cost reductions, advanced robots remain expensive to develop, manufacture, and deploy compared to software solutions.

-

Infrastructure adaptation: Environments often need to be modified to accommodate robots, adding significant costs beyond the robots themselves.

-

Maintenance and operations: Ongoing costs for maintenance, repairs, and operational support create financial barriers that don't exist for digital AI systems.

-

Training and integration: Organizations must invest in training staff and integrating robotic systems with existing processes and infrastructure.

These economic factors mean that even if technological breakthroughs occur, the path to widespread adoption will likely be slower and more selective than what we saw with language models.

Data Scarcity and Quality Issues

One of the most significant challenges for embodied AI is the data bottleneck:

-

Limited real-world data: As noted in a 2024 survey on embodied AI, "Data scarcity has been a persistent challenge in embodied AI research. Nonetheless, collecting real-world robot data poses numerous challenges."

-

Labeling complexity: Data labeling for robotics is particularly challenging, requiring precise annotation of physical states, objects, and actions in three-dimensional space.

-

Diversity requirements: Robots need exposure to incredibly diverse scenarios to develop generalized capabilities, far beyond what most training datasets currently provide.

-

Sim-to-real gap: While simulation environments help address data scarcity, there remains a significant gap between simulated and real-world performance that limits the effectiveness of synthetic data.

As Ben Bolte of K-Scale Labs argues, "Achieving general-purpose embodied intelligence requires extensive, diverse data—on the order of millions of hours—which necessitates iterative improvements rather than a single, transformative release."

Ecosystem Nascency and Fragmentation

The robotics ecosystem lacks the maturity and integration seen in other AI domains:

-

Fragmented standards: Unlike the relatively standardized field of language models, robotics encompasses diverse hardware platforms, software frameworks, and application domains with limited interoperability.

-

Specialized solutions: Most robotic systems are designed for specific use cases rather than general-purpose applications, limiting cross-domain learning and transfer.

-

Limited developer tools: The tools and platforms for developing robotic applications remain less accessible and comprehensive than those for language models and other AI domains.

This fragmentation slows the pace of innovation and makes it difficult to achieve the kind of ecosystem-wide momentum that drove the rapid adoption of language models.

Historical Patterns of Incremental Progress

The history of robotics suggests a pattern of steady but incremental advancement rather than revolutionary leaps:

-

Decades of gradual improvement: Despite periodic hype cycles, robotics has historically progressed through steady incremental improvements rather than sudden breakthroughs.

-

Domain-specific advances: Progress in robotics typically occurs in specific domains or capabilities rather than across the entire field simultaneously.

-

Persistent challenges: Some fundamental challenges in robotics, such as dexterous manipulation of arbitrary objects, have remained difficult despite decades of research.

As the Coatue analysis concludes, "We believe robotics cannot have a ChatGPT Moment... Rather, we see robotics crossing the chasm more gradually, as each of us experience our own unique Robot Moments when we interact with robots in a coffee shop or in people's homes as capabilities mature at a rapid clip."

Scaling Law Differences

The scaling laws that enabled the breakthrough in language models may not apply in the same way to embodied AI:

-

Different scaling relationships: As Tim Urista notes in his analysis of scaling laws in embodied AI, "While scaling laws in language models are now well-documented — following roughly power-law relationships between model size, compute, and performance — the same comprehensive understanding has yet to be established for embodied AI systems designed for physical tasks."

-

Diminishing returns: There's evidence that simply scaling model size and training data may yield diminishing returns for embodied AI due to the complexity of physical interaction.

-

Multi-objective optimization: Unlike language models that optimize for next-token prediction, robots must balance multiple competing objectives simultaneously (e.g., speed, safety, energy efficiency, task completion), making scaling more complex.

The bearish perspective doesn't deny the significant progress being made in robotics or the potential for embodied AI to transform industries over time. Rather, it suggests that the nature of physical embodiment creates fundamental differences that will lead to a different trajectory of advancement—one characterized by steady progress across multiple fronts rather than a single transformative moment. As Thane Ruthenis writes in their 2025 bear case for AI progress, we should expect "continued progress. But the dimensions along which the progress happens are going to decouple from the intuitive 'getting generally smarter' metric, and will face steep diminishing returns."

Sensor Technology and Real-Time Data Processing Challenges

The gap between language models and embodied AI in robotics is perhaps most evident when examining the specific challenges related to sensor technology and real-time data processing. These technical hurdles represent some of the most significant obstacles to achieving a "ChatGPT moment" in robotics.

Multimodal Sensory Integration Requirements

Unlike language models that process a single modality (text), embodied AI systems must integrate multiple sensory streams simultaneously:

Diverse Sensor Types

Modern robots rely on a complex array of sensors to perceive their environment:

- Visual sensors: Cameras (RGB, depth, stereo, thermal) provide visual information about the environment, objects, and people.

- Tactile sensors: Pressure, force, and texture sensors enable robots to "feel" objects and surfaces.

- Proprioceptive sensors: Joint encoders, inertial measurement units (IMUs), and accelerometers provide information about the robot's own body position and movement.

- Auditory sensors: Microphones capture sound information for navigation, communication, and environmental awareness.

- Specialized sensors: Depending on the application, robots may incorporate LIDAR, radar, ultrasonic sensors, or other specialized sensing technologies.

Integration Challenges

The challenge isn't just in having these sensors but in integrating their data streams coherently:

- Temporal alignment: Different sensors operate at different frequencies and with varying latencies, requiring sophisticated synchronization.

- Spatial registration: Data from different sensors must be mapped to a common coordinate system to create a unified representation of the environment.

- Conflicting information: When sensors provide contradictory information, the system must resolve these conflicts to form a coherent understanding.

- Contextual interpretation: The meaning of sensor data often depends on context, requiring sophisticated models to interpret raw signals appropriately.

As noted in Encord's analysis of embodied AI, "Embodied AI systems use sensors like cameras, LIDAR, and microphones to see, hear, and feel their surroundings. Processing this data quickly is crucial."

Real-Time Processing Demands

Perhaps the most significant difference between language models and embodied AI is the strict real-time processing requirements of the latter:

Time-Critical Decision Making

Robots operating in the physical world face strict timing constraints:

- Reaction time requirements: A robot must respond quickly to unexpected obstacles, human movements, or changing conditions.

- Control loop frequencies: Stable control of physical systems often requires high-frequency feedback loops (e.g., 1000Hz for fine motor control).

- Deadline guarantees: Missing processing deadlines can lead to physical failures, unlike in language models where slower processing merely causes delay.

Computational Constraints

These real-time requirements create significant computational challenges:

- Edge computing limitations: Onboard processors must balance computational power against energy consumption, heat generation, and physical space constraints.

- Latency vs. throughput: While language models can optimize for throughput, robotics systems must prioritize low-latency responses even at the cost of overall throughput.

- Parallel processing complexity: Efficiently distributing real-time processing across multiple cores or specialized hardware remains challenging.

As the Medium article on scaling laws in embodied AI notes, "While LLMs work with discrete tokens in a purely digital space, embodied AI systems must process continuous sensory inputs, handle real-time feedback, and generate precise motor controls."

Data Labeling and Quality Control Issues

The data challenges for embodied AI extend beyond collection to labeling and quality control:

Labeling Complexity

Labeling data for robotics presents unique challenges:

- 3D annotation requirements: Many robotics tasks require precise 3D annotations of objects, surfaces, and spaces.

- Temporal labeling: Actions and movements must be labeled across time sequences, not just in static snapshots.

- Physical property annotation: Labels often need to include physical properties like weight, material, or fragility that aren't visually apparent.

- Intention and affordance labeling: Understanding how objects can be used often requires labeling their affordances and typical usage patterns.

As Encord's guide explains, "Data labeling is a process to give meaning to raw data (e.g., 'this is a door,' 'this is an obstacle'). It is used to guide supervised learning models to recognize patterns correctly. Poor labeling leads to errors, like a robot misidentifying a pet as trash."

Quality Control Challenges

Ensuring data quality for robotics is particularly difficult:

- Physical accuracy requirements: Small errors in physical measurements can lead to significant failures in robot behavior.

- Consistency across modalities: Quality control must ensure consistency across different sensor modalities.

- Environmental variation: Data must capture the full range of environmental conditions the robot might encounter.

- Edge case representation: Rare but critical scenarios must be adequately represented in training data.

Scaling Laws in Embodied AI vs. Language Models

The scaling behavior of embodied AI systems differs fundamentally from language models:

Different Scaling Dimensions

While language models scale primarily along three dimensions (model size, dataset size, and compute), embodied AI systems must consider additional factors:

- Sensor quality and diversity: Higher resolution sensors and more diverse sensor types can improve performance but add complexity.

- Actuator precision and responsiveness: Better motors and actuators enable more precise control but require more sophisticated control algorithms.

- Environmental complexity: Training in more complex and diverse environments improves generalization but increases data requirements exponentially.

- Task diversity: Learning to perform a wider range of tasks requires not just more data but fundamentally different types of data.

Unclear Performance Metrics

Unlike language models, which have relatively well-established evaluation metrics:

- Multi-objective evaluation: Robotics systems must balance multiple competing objectives (speed, safety, energy efficiency, task completion).

- Context-dependent success criteria: What constitutes successful performance often depends heavily on the specific context and application.

- Physical risk assessment: Evaluation must consider not just task completion but also safety and reliability under various conditions.

Hardware Limitations and Energy Constraints

Physical embodiment introduces hardware and energy constraints that don't apply to language models:

Hardware Limitations

- Sensor resolution and range: Physical sensors have fundamental limitations in resolution, range, and operating conditions.

- Actuator precision and force: Motors and actuators have physical limits on precision, speed, and force generation.

- Physical size and weight constraints: Many applications require robots to be compact and lightweight, limiting onboard computing capabilities.

- Durability and reliability requirements: Hardware must withstand physical stresses and environmental conditions over extended periods.

Energy Constraints

- Battery limitations: Mobile robots face severe energy constraints that limit operational time and computing power.

- Thermal management: High-performance computing generates heat that must be managed in compact robotic systems.

- Energy-efficient algorithms: Unlike data center-based language models, robotics algorithms must prioritize energy efficiency.

These sensor technology and real-time data processing challenges represent some of the most significant obstacles to achieving a "ChatGPT moment" in robotics. While advances in hardware, algorithms, and data collection methodologies continue to address these challenges, they represent fundamental differences from the language model domain that cannot be overcome through simple scaling of existing approaches. Instead, they require innovative solutions specifically designed for the unique requirements of embodied AI systems operating in the physical world.

Conclusion and Future Outlook

Synthesis of Bullish and Bearish Perspectives

The path to a "ChatGPT moment" in robotics presents a complex landscape where both optimistic and pessimistic perspectives offer valuable insights. The truth likely lies somewhere between these viewpoints, with different aspects of robotics advancing at varying rates.

The bullish case highlights significant technological enablers that are converging to accelerate progress: improved fine-motor control and agility in robots, better world models for synthetic data training, increased funding and research focus, and the emergence of fast-learning robots. These developments suggest that we may be approaching an inflection point where robotics capabilities could advance rapidly.

Conversely, the bearish perspective emphasizes fundamental constraints that differentiate robotics from language models: physical limitations that software alone cannot overcome, high upfront costs and infrastructure requirements, data scarcity and quality issues, ecosystem fragmentation, and historical patterns of incremental rather than revolutionary progress. These factors suggest that robotics will follow a more gradual evolution than the sudden leap seen with ChatGPT.

What emerges from this analysis is not a binary outcome but a nuanced understanding of how embodied AI might evolve. As Coatue's analysis suggests, "We see robotics crossing the chasm more gradually, as each of us experience our own unique Robot Moments when we interact with robots in a coffee shop or in people's homes as capabilities mature at a rapid clip." This perspective acknowledges both the transformative potential of robotics and the practical constraints that shape its development path.

Likely Timeline for Significant Breakthroughs

Based on the evidence examined in this report, we can outline a potential timeline for robotics advancement:

2025-2027: Foundation Building

- Continued improvements in hardware capabilities and cost reduction

- Expansion of synthetic data training environments

- Incremental advances in specific domains (warehouse automation, industrial robotics)

- Early consumer adoption of specialized robots

2027-2030: Acceleration Phase

- Emergence of more general-purpose robotic platforms

- Significant improvements in transfer learning and adaptation

- Wider deployment in controlled commercial environments

- Increasing integration of language models with embodied systems

2030-2035: Potential Transformation

- Possible emergence of truly general-purpose robots

- Widespread adoption across multiple industries

- More natural human-robot interaction

- Ecosystem standardization and maturation

This timeline suggests that while we may not see a single "ChatGPT moment" for robotics, the 2025-2035 period could witness a series of significant breakthroughs that collectively transform the field. As Michael Spencer notes in AI Supremacy, "Compared to Generative AI's historical timeline, we are at about 2018 for robotics," suggesting that the most significant advances may still be several years away.

Key Indicators to Watch

Several indicators will signal progress toward a potential robotics breakthrough:

Technical Indicators:

- Improvements in robots' ability to generalize from limited examples

- Advances in multimodal sensory integration

- Breakthroughs in real-time processing of complex sensory data

- Development of more energy-efficient computing for edge deployment

Market Indicators:

- Decreasing costs for advanced robotic systems

- Emergence of standardized platforms and development tools

- Increasing venture capital investment in robotics startups

- Adoption rates in early commercial applications

Research Indicators:

- Publication of new scaling laws specific to embodied AI

- Breakthroughs in sim-to-real transfer learning

- Advances in unsupervised learning for physical tasks

- Development of better benchmarks for embodied AI performance

Implications for Industry, Research, and Society

The evolution of embodied AI will have far-reaching implications across multiple domains:

Industry Implications:

- Manufacturing and logistics will likely see the earliest and most significant transformations

- Healthcare robotics may advance more gradually due to regulatory constraints and safety requirements

- Consumer robotics will likely focus on specific use cases before general-purpose applications emerge

- New business models will develop around robot deployment, maintenance, and training

Research Implications:

- Increasing convergence between language model research and embodied AI

- Greater focus on data-efficient learning methods for physical tasks

- More emphasis on safety, reliability, and robustness in AI systems

- New approaches to human-robot collaboration and interaction

Societal Implications:

- Gradual rather than sudden workforce transformation

- New skill requirements for working alongside advanced robotic systems

- Ethical and regulatory frameworks will need to evolve with capabilities

- Potential for both increased productivity and new forms of human-machine partnership

Final Assessment

The question of whether robotics will experience its own "ChatGPT moment" ultimately depends on how we define such a breakthrough. If we expect a single technological advancement that suddenly transforms the entire field, the evidence suggests this is unlikely due to the fundamental differences between language models and embodied AI systems.

However, if we consider a "ChatGPT moment" more broadly as a period of accelerated progress and adoption that fundamentally changes how we interact with technology, then robotics may indeed be approaching such a transformation—albeit one that unfolds over years rather than months.

The unique challenges of embodied AI—from sensor integration to real-time processing, from physical constraints to data limitations—mean that robotics will follow its own developmental trajectory. This path will be shaped by the interplay between hardware and software advances, between simulation and real-world experience, and between specialized and general-purpose applications.

What seems most likely is that robotics will experience a series of domain-specific breakthroughs that collectively drive the field forward, with progress accelerating as these advances build upon each other. The result may not be as sudden or as universally visible as ChatGPT's emergence, but its long-term impact on how we live and work could be even more profound.

As we navigate this transition, maintaining a balanced perspective that acknowledges both the tremendous potential of embodied AI and the significant challenges it faces will be essential for researchers, investors, policymakers, and society as a whole. The future of robotics may not arrive in a single moment, but its gradual unfolding promises to be one of the most significant technological transformations of our time.

References and Sources

Academic and Research Sources

-

Brooks, R. (1991). Intelligence without representation. Artificial Intelligence, 47(1-3), 139-159. https://doi.org/10.1016/0004-3702(91)90053-M

-

Bolte, B. (2025). Scaling laws in embodied AI: Challenges and opportunities. K-Scale Labs Technical Report.

-

Ruthenis, T. (2025). A bear case: My predictions regarding AI progress. LessWrong. https://www.lesswrong.com/posts/oKAFFvaouKKEhbBPm/a-bear-case-my-predictions-regarding-ai-progress

-

Urista, T. (2024). Understanding scaling laws in embodied AI: Beyond language models. Medium. https://medium.com/@timothy-urista/understanding-scaling-laws-in-embodied-ai-beyond-language-models-c1c34a30675f

Industry Reports and Analyses

-

ABI Research. (2025). Robotics market forecast 2025-2030: Industrial and commercial applications.

-

Coatue. (2025). Robotics won't have a ChatGPT moment. Coatue Perspective. https://www.coatue.com/blog/perspective/robotics-wont-have-a-chatgpt-moment

-

Encord. (2025). What is embodied AI? A guide to AI in robotics. https://encord.com/blog/embodied-ai/

-

Fundación Bankinter. (2024). Embodied AI: The challenge of building robots that learn from the real world. https://www.fundacionbankinter.org/en/noticias/embodied-ai-the-challenge-of-building-robots-that-learn-from-the-real-world/

-

Lucid Bots. (2025). Robot rundown: Future at your fingertips. https://lucidbots.com/robot-rundown/future-at-your-fingertips

-

NVIDIA. (2025). National robotics week 2025. NVIDIA Blog. https://blogs.nvidia.com/blog/national-robotics-week-2025/

-

Spencer, M. (2025). ChatGPT robotics moment in 2025. AI Supremacy. https://www.ai-supremacy.com/p/chatgpt-robotics-moment-in-2025

-

The Robot Report. (2025). The state of AI robotics heading into 2025. https://www.therobotreport.com/the-state-of-ai-robotics-heading-into-2025/

-

WisdomTree. (2025). Titans of tomorrow: Quantum computing and robotics on the brink of revolution. https://www.wisdomtree.com/investments/blog/2025/01/16/titans-of-tomorrow-quantum-computing-and-robotics-on-the-brink-of-revolution

News Articles and Blog Posts

-

de Gregorio Noblejas, I. (2024). The robotics breakthrough that obsoleted OpenAI. Medium. https://medium.com/@ignacio.de.gregorio.noblejas/the-robotics-breakthrough-that-obsoleted-openai-075015d9fe91

-

Forward Future. (2025). The rise of embodied AI. https://www.forwardfuture.ai/p/the-rise-of-embodied-ai

-

Nature Machine Intelligence. (2025). Special issue: Embodied AI challenges and opportunities. Nature Machine Intelligence, 7(4). https://www.nature.com/articles/s42256-025-01005-x

Company Resources

-

Boston Dynamics. (2025). Atlas: The next generation.

-

Figure AI. (2025). Helix: Vision-language-action AI for humanoid robots.

-

Sanctuary AI. (2025). Phoenix: General-purpose humanoid robot.

-

Unitree Robotics. (2025). G1 humanoid robot technical specifications.

Note1: This reference list includes sources directly cited in the report as well as additional resources that informed the analysis and perspectives presented.

Note2: This report has been created with one of my favorite tools to use as of April 2025: manus.im