From Hype to Hiring: Navigating the Physical AI Frontier at the Nebius Summit

I recently had the privilege of participating in one of those rare conversations that cuts through the hype and gets to the heart of what’s really happening in robotics and physical AI. Hosted by Nebius at the iconic Computer History Museum, the Robotics & Physical AI Summit brought together a curated group of founders building the future, investors funding it, and researchers pushing the boundaries of what’s possible. The format was intimate, fostering the kind of candid, unfiltered dialogue that’s increasingly hard to find.

The air was thick with more than just the usual Silicon Valley optimism. A palpable tension defined the evening-a productive friction between the boundless ambition of creating intelligent machines and the gritty realities of deploying them in the physical world. With over 850 registrants and 254 award applicants from 62 countries, the event marked a clear inflection point for the industry, signaling a collective shift in focus from crafting viral videos to securing commercial contracts.

Before diving into what I learned, I want to thank the incredible group of people who made this conversation so valuable, including speakers from Nebius, NVIDIA, Agility Robotics, Cobot, Foxglove, and a host of leading venture firms like Shanda Grab Ventures, Radical Ventures, N47, Qualcomm Ventures, and Khosla Ventures. What follows is my synthesis of the key insights from the panels, presentations, and sideline conversations. In keeping with the Chatham House Rule, I’m sharing the ideas and learnings without attributing specific comments to specific individuals. The goal is to capture the collective wisdom that emerged in a way that’s useful for others navigating this complex landscape.

Part I: The Infrastructure Revolution

For years, the dream of robotics has outpaced the reality of its underlying infrastructure. The summit made it clear that this is changing. The conversation has moved beyond just the robots themselves to the foundational layers of simulation, data, and compute that make them possible.

NVIDIA’s Three-Computer Framework

The keynote address from NVIDIA’s Head of Robotics and Edge Computing Ecosystem, Amit Goel, provided a powerful framework for understanding this shift. He argued that physical AI presents a set of unique challenges that distinguish it from its digital counterparts. Unlike LLMs, which can draw upon the vast expanse of the internet, physical AI models are starved for relevant, high-quality data. You can’t scrape the web for millions of examples of a robot successfully navigating a cluttered warehouse or delicately handling a fragile object. This data must be created.

Furthermore, testing in the real world is slow, expensive, and often dangerous. As one speaker memorably put it, you can’t simply “vibe check a robot” when a mistake could have catastrophic safety and financial consequences. This necessitates a robust virtual environment where robots can be trained and validated at scale before they ever touch a factory floor.

To address this, NVIDIA has architected its strategy around three distinct but interconnected “computers”:

| Computer Type | Purpose | Key Technologies |

|---|---|---|

| Simulation Computer | Data generation, reinforcement learning, and model testing in a physically accurate virtual world. | Omniverse, Isaac Sim, Cosmos, RTX |

| Training Computer | Training the AI “brains” on massive, multimodal datasets (video, audio, force, touch). | Blackwell, AI Factory |

| Edge Computer | Deploying the trained AI models on the physical robot for low-latency, real-time inference. | High-performance on-device compute |

This framework highlights a fundamental truth: the future of robotics is as much about data centers and simulation engines as it is about motors and gears. The idea that a robot must “live a thousand lifetimes in the digital one” was a recurring theme, emphasizing that the path to real-world autonomy runs through the virtual world first.

The Synthetic Data Imperative

Given the data scarcity problem, it was no surprise that synthetic data was a major topic of conversation, particularly among investors. Multiple VCs on the investment panel pointed to synthetic data as one of the most critical and exciting frontiers. They are tracking the progress of visual synthetic data platforms that can generate realistic sensor data for a variety of environments, allowing models to be trained on a much wider range of scenarios than could ever be captured in the real world.

One investor noted that the ability to move seamlessly from one simulated environment to another is a key breakthrough they are watching. This is crucial for generalization. A robot trained only in a pristine, perfectly lit virtual warehouse will fail the moment it encounters the chaotic reality of an actual loading dock. The quality and diversity of synthetic data are therefore paramount. This is not just about creating photorealistic images, but about accurately modeling the physics of interaction, the nuances of sensor noise, and the endless variability of the real world.

The Unsung Hero: Observability

One of the most telling moments of the awards ceremony was the Pioneer Award given to Foxglove. Foxglove doesn’t build robots; it builds the tools to understand what robots are doing. Their platform for data visualization, debugging, and analysis addresses a critical, often-overlooked problem: observability.

As robotics systems become more complex, understanding why a robot failed-or succeeded-becomes exponentially harder. Was it a perception error? A planning bug? A mechanical issue? Without robust tooling, engineers are flying blind. Foxglove’s success in creating an industry-standard platform (including the now-ubiquitous MCAP data format) highlights the maturation of the ecosystem. The industry is moving past the point where every team builds its own fragmented, homegrown tools, and toward a shared infrastructure layer.

The award recognized that Foxglove has transitioned from a “helpful tool to the default observability layer for device development.” This is the kind of “boring” but essential infrastructure that enables the entire industry to move faster. It’s a sign that we’re building a real industry, not just a collection of one-off projects.

Part II: From Prototype to Product

The most significant theme of the night was the industry’s collective journey across the chasm from a working prototype to a scalable product. This is where the rubber meets the road-or, more accurately, where the robot gripper meets the customer’s package.

The 100,000-Tote Milestone

This transition was perfectly crystallized by the Pioneer Award given to Agility Robotics. The award wasn’t for a new acrobatic demo, but for a hard-won, operational metric: their bipedal robot, Digit, has successfully moved over 100,000 totes in live GXO fulfillment centers. This number matters. It represents the line between a science project and a solution, between a pilot and a product.

As the award citation noted, Agility was being recognized for taking humanoids “from viral videos to commercial reality.” Their deep partnerships with logistics giants like Amazon and GXO are not just impressive customer logos; they are proof that bipedal robots can be a scalable, economically viable solution to real-world labor shortages. This achievement, many believe, has unlocked a category that was thought to be a decade away from commercial viability.

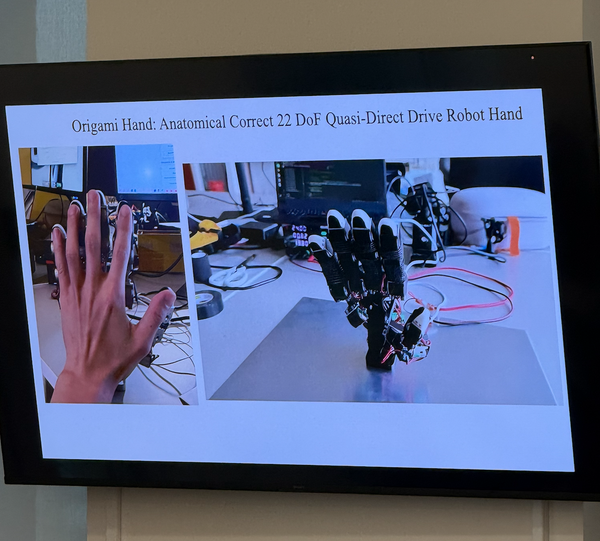

The Locomotion vs. Manipulation Divide

The Agility milestone also highlights a key distinction in the technical landscape: the difference between locomotion and manipulation. As multiple speakers pointed out, locomotion is becoming a largely solved problem. Thanks to years of research and powerful simulation tools like NVIDIA’s Isaac Lab, most advanced robots in the world have learned to walk, run, and navigate complex terrains with remarkable agility.

Manipulation, however, is another story. While robots are proficient at simple pick-and-place tasks in structured environments, fine-grained manipulation-the kind of dexterity humans use to assemble a product, sort a variety of objects, or handle a delicate item-remains a grand challenge. One investor described the current state of the art as “Level 3 manipulation,” capable of gross movements in forgiving environments like a warehouse, but far from the human-level dexterity required for more complex tasks.

The consensus in the room was that true, generalizable manipulation is likely still a decade away. The path forward involves starting with simpler manipulation tasks in more constrained environments and gradually increasing complexity. The dream of a robot that can tidy a child’s room is still on the horizon, but the robot that can move a standardized box from a shelf to a conveyor belt is here today.

Part III: The Capital Conversation

As the technology matures, the nature of the investment conversation is changing with it. The panels and sideline discussions revealed a venture community that is both excited by the opportunity and sober about the challenges.

The ROI Imperative: Months, Not Years

If there was one point of universal agreement, it was that customer ROI must be measured in months, not years. The days of selling a multi-year payback period are over. The robotics solution needs to be a “no-brainer” for the customer, with a payback so rapid that the decision to purchase is easy. This forces an intense focus on high-value applications where the economic impact is immediate and quantifiable.

This also puts a premium on utilization. A robot that performs a single task for only an hour a day will struggle to justify its cost. The most successful products are those that are flexible enough to be deployed across multiple tasks and shifts, maximizing their value. This is why software and hardware must be developed in tandem-the intelligence of the software is what unlocks the full potential of the hardware.

The Domain Specificity Paradox

This led to one of the most interesting paradoxes of the evening: the tension between the allure of the general-purpose humanoid and the profitability of the narrow-domain workhorse. One VC astutely observed that general-purpose robots capture the imagination (and a disproportionate share of funding) because they align with our sci-fi visions of the future. The potential outcome is massive, so everyone wants to back the winner.

However, the real, tangible wins today are often in hyper-specific, unglamorous niches. The example given was Gecko Robotics, a company whose robots crawl up and down industrial tanks to perform inspections, replacing a dangerous job that involved humans dangling from ropes.

The advice to founders was unanimous: be “very choosy about the domain you initially go into.” The path to the general-purpose “Rosie the Robot” runs through a series of very specific, profitable, industrial applications first.

Part IV: The Road Ahead

As the evening drew to a close, a clear consensus emerged. The physical AI industry is at a crucial inflection point. The question is no longer if intelligent robots will become a major part of our economy, but how and when. The next 24 months are expected to be a period of rapid acceleration, as the foundational infrastructure matures and more companies cross the chasm from prototype to product.

The closing remarks from Nebius’s Head of Global Storage Ecosystem, Mona Li, captured the spirit of the evening perfectly. She spoke of her vision for a retirement where she would have ten robots-some serving food, some cleaning the house, and the rest playing poker with her. It was a lighthearted image, but it spoke to the profound, long-term ambition that fuels this industry.

The summit was a powerful reminder that building the future is a marathon, not a sprint. It requires a community of builders, researchers, and investors who are willing to tackle hard problems, who are sober about the challenges, and who are unshakably optimistic about the future. The robots are, slowly but surely, clocking in for work.

Speakers

- Marc Boroditsky, Nebius, Chief Revenue Officer

- Amit Goel, NVIDIA Head of Robotics and Edge Computing

- Evan Helda, Nebius Head of Physical AI

- Jonathan Hurst, Agility Robotics Co-founder

- Robert Nishihara, Anyscale Co-founder CEO

- Lindon Gao, Dyna Robotics CEO

- Adrian Macneil, Foxglove CEO

- Charles Wong, Bifrost AI CEO

- Kevin Black, Physical Intelligence, Member of Technical Staff

- Raj Mirpuri, NVIDIA VP Enterprise & Cloud Sales

- Daniel Bounds, Nebius, Chief Marketing Officer

- Christine Qing, Shanda Grab Ventures, Partner

- Daniel Mulet, Radical Ventures, Partner

- Colton Dempsey, N47, Partner

- Christine Qing, Partner at Shanda Grab Ventures

- Daniel Mulet, Partner at Radical Ventures

- Colton Dempsey, Partner at Next 47

- Nan Zhou, Director at Qualcomm Ventures

- Nikolas Ciminelli, Principal at Khosla Ventures

- Anu Maheshwari, Head of Venture Partnerships at Nebius

- Richard Liaw, Founding Engineer at Anyscale

- Adrian Macneil, Co-Founder and CEO at Foxglove

- Kevin McNamara, Founder and CEO at Parallel Domain

Written by Bogdan Cristei & Manus AI | Wed Dec 10 2025