DeepTech Pulse Vol. 2: The Convergence Accelerates

Executive Summary: As we enter the second half of 2025, the artificial intelligence and robotics landscape is experiencing unprecedented convergence. From China's world-first subway delivery robots to North Carolina State's breakthrough in autonomous materials discovery, the boundaries between digital intelligence and physical capability are dissolving at an accelerating pace. This issue examines the critical developments shaping our transition from software-first AI to embodied intelligence, the hidden technical debt accumulating in AI systems, and the emerging paradigm of morphology-agnostic robotics that promises to redefine how we think about machine intelligence.

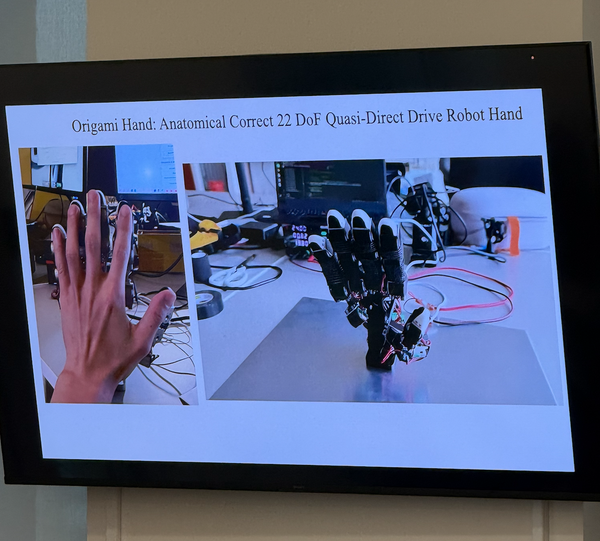

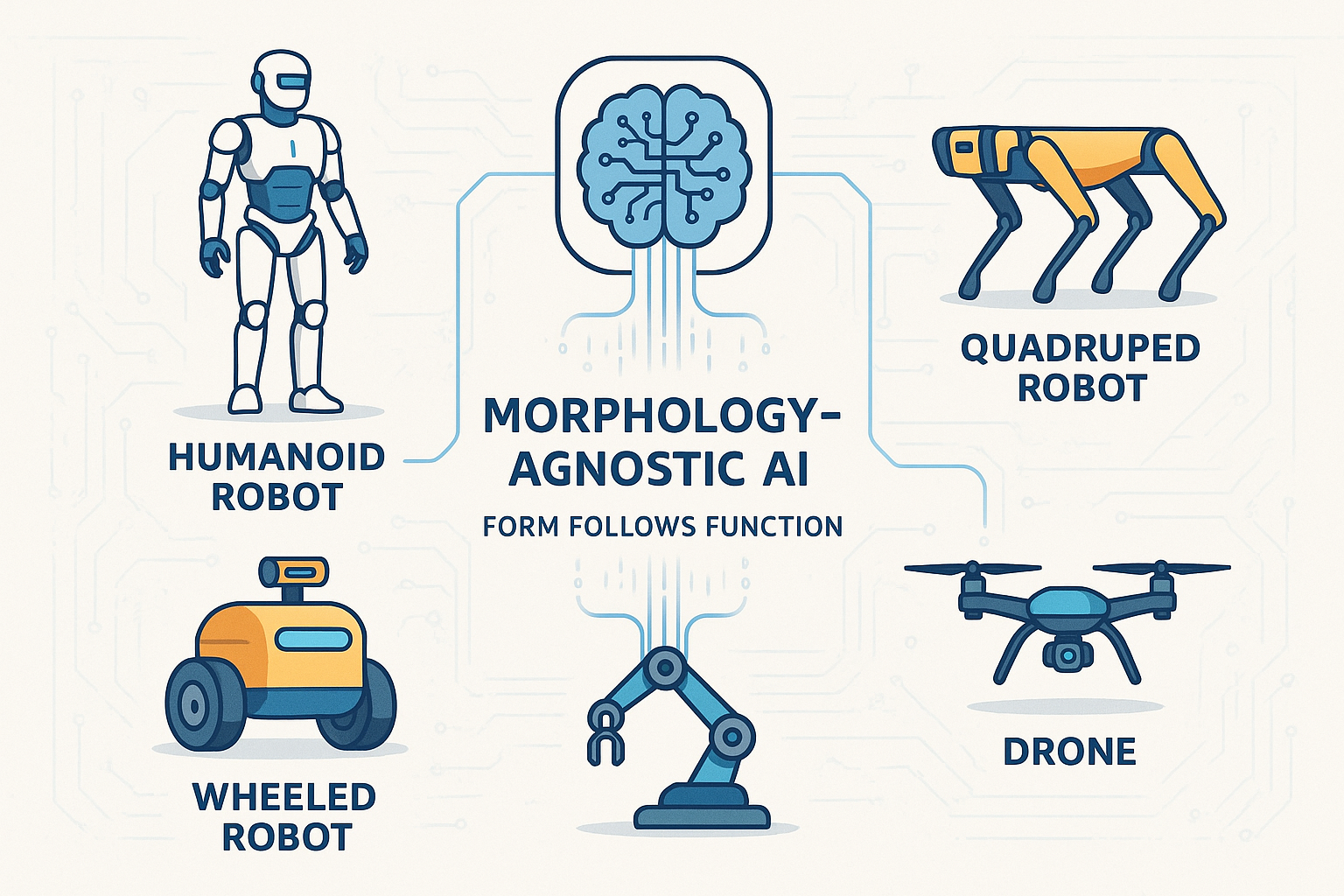

The Morphology-Agnostic Revolution: Why Form No Longer Follows Fiction

The robotics industry stands at a philosophical crossroads. While billions of dollars flow into humanoid robot development—from Tesla's Optimus to the dozens of bipedal "universal workers" emerging from companies like Sanctuary AI, Figure, and Agility—a fundamental question emerges: Are we building the future, or merely recreating our past? [1]

Deena Shakir of Lux Capital presents a compelling counterargument in her recent analysis of embodied intelligence, drawing an unexpected parallel from evolutionary biology. The phenomenon of carcinization—where unrelated species independently evolve crab-like forms—offers a profound lesson for robotics. Just as nature doesn't converge on a single "optimal" form but rather adapts to specific environmental pressures, the future of robotics may lie not in human mimicry but in task-specific optimization. [1]

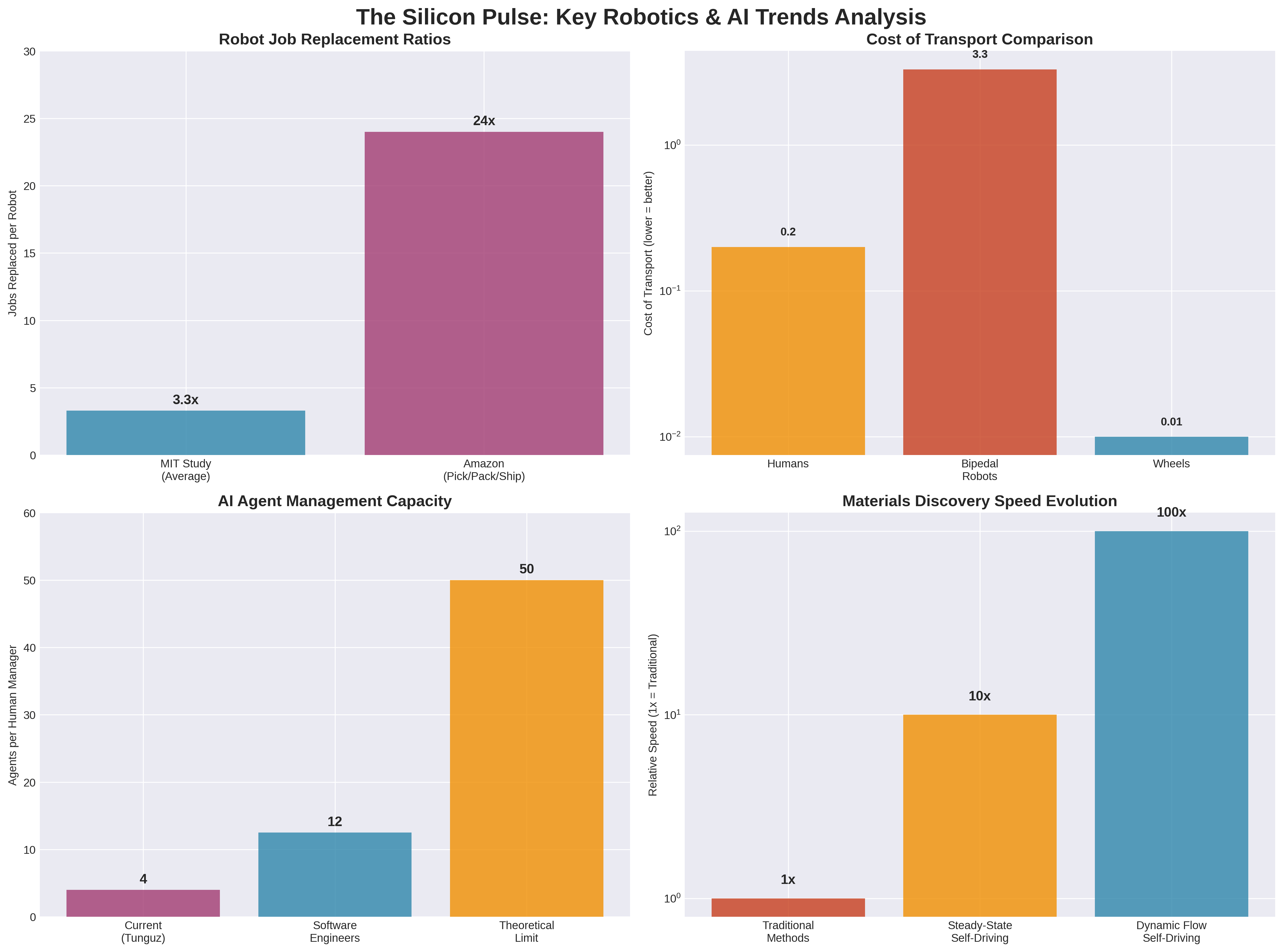

The data supporting this thesis is striking. Bipedal robots demonstrate a cost of transport around 3.3, making them fifteen times less energy-efficient than humans (who achieve approximately 0.2) and up to three hundred times less efficient than wheeled alternatives. [1] This isn't merely an engineering challenge—it represents a fundamental misallocation of resources toward solving problems that may not need solving.

Consider the Star Wars analogy that Shakir employs: C-3PO, the humanoid protocol droid, consistently fails under pressure, while R2-D2—non-anthropomorphic and utilitarian—repairs starships under fire, disables battle droids, hacks enemy systems, and delivers crucial intelligence. This fictional contrast reflects a very real tension in today's robotics boom. [1]

Boston Dynamics provides perhaps the most instructive real-world example. Despite decades of development and viral videos showcasing Atlas's gymnastic capabilities, the company's actual revenue streams from Stretch (a wheeled industrial robot) and Spot (a four-legged inspection robot), with over 1,500 Spot units deployed in the field. Atlas, meanwhile, remains uncommercial. [1] The market has spoken: utility trumps anatomy.

The implications extend beyond mere efficiency metrics. The "humanoid fallacy," as Shakir terms it, represents "the assumption that because our environments are human-centric, the most effective machines must be human-shaped." [1] This logic crumbles under scrutiny. Computers bear no resemblance to humans yet demonstrate billions of times greater capability in memory, logic, and computation. Airplanes don't flap wings like birds; submarines don't swim the breaststroke. As Shakir notes, "Productivity doesn't demand mimicry; exponential innovation perhaps precludes it." [1]

The morphology-agnostic approach championed by companies like Physical Intelligence represents a paradigm shift toward intelligence that generalizes across forms, learns across modalities, and acts with agility regardless of physical embodiment. [1] This approach acknowledges that embodiment matters—but conformity to human form does not.

The economic implications are profound. Rather than forcing intelligence into human-shaped constraints, morphology-agnostic systems can optimize for specific tasks: wheeled bases for stability and energy efficiency, specialized manipulators for precision tasks, and form factors designed for their operational environment rather than human aesthetic preferences.

This shift represents more than technological evolution—it signals a maturation of the robotics industry beyond anthropocentric assumptions toward functional optimization. As we witness the deployment of penguin-like delivery robots in Chinese subways and the success of non-humanoid industrial systems, the evidence increasingly supports a future where form truly follows function, not fiction.

From Laboratory to Subway Platform: Real-World Robotics Deployment Accelerates

The transition from research prototype to operational deployment reached a milestone in July 2025 with China's launch of the world's first subway-based robotic delivery system. In Shenzhen, forty-one autonomous delivery robots now commute alongside human passengers, boarding trains during off-peak hours to restock over one hundred 7-Eleven stores throughout the city's vast metro network. [2]

These meter-tall machines, operated by VX Logistics (a Vanke unit partly owned by Shenzhen Metro), embody the morphology-agnostic principle in practice. Rather than attempting human-like navigation, they feature specialized chassis systems designed specifically for urban transit challenges: crossing platform gaps, navigating elevators, and maneuvering through train doors with clinical precision. [2] Their penguin-like appearance, complete with LED faces displaying friendly expressions, demonstrates how functional design can incorporate human-friendly elements without sacrificing operational efficiency.

The technical sophistication underlying this deployment is remarkable. Each robot employs AI-powered scheduling systems and multi-sensor navigation to plan optimal delivery routes while avoiding pedestrians and adapting to shifting train schedules. [2] The system represents a practical solution to a long-standing logistics challenge—previously, human workers had to park above ground, unload goods, and manually push trolleys down to subway platforms. As Li Yanyan, a manager at one participating 7-Eleven, observed: "Now, with robots, it's much easier and more convenient." [2]

This deployment occurs within the broader context of Shenzhen's "Embodied Intelligent Robot Action Plan," released in March 2025, which targets large-scale adoption of service and industrial robots by 2027. [2] The city, home to more than 1,600 robotics companies, serves as a national testbed for automation, with officials explicitly focused on transitioning machines from enclosed factory environments to public-facing roles.

The strategic implications extend beyond logistics efficiency. China's rapidly aging population has driven explicit policy support for humanoid robots in elderly care, with projects like Ant Group's Ant Lingbo developing systems capable of patient transfer, room maintenance, and package delivery. [2] Beijing officials have identified humanoid robots for "dangerous, monotonous, or specialized jobs" including deep-sea inspections and space exploration, positioning automation as a productivity multiplier rather than a job displacement mechanism. [2]

Meanwhile, in North Carolina, researchers have achieved a different but equally significant breakthrough in autonomous systems. The state's engineers have developed a robotic laboratory system capable of running experiments independently, gathering data every half second to accelerate materials discovery by a factor of ten compared to traditional methods. [3] This system could potentially compress years of human research into days, with applications spanning clean energy, electronics, and sustainable chemistry.

The North Carolina State breakthrough, led by Professor Milad Abolhasani, represents a fundamental shift from steady-state to dynamic flow experiments. [3] Rather than waiting up to an hour for each experiment to complete, the system continuously varies chemical mixtures while monitoring reactions in real-time. As Abolhasani explains: "Instead of having one data point about what the experiment produces after 10 seconds of reaction time, we have 20 data points—one after 0.5 seconds of reaction time, one after 1 second of reaction time, and so on. It's like switching from a single snapshot to a full movie of the reaction as it happens." [3]

The implications for materials science are profound. The system identified optimal material candidates on the first attempt after training, while dramatically reducing chemical consumption and waste generation. [3] Published in Nature Chemical Engineering, this work demonstrates how autonomous systems can accelerate scientific discovery while advancing sustainability—a critical consideration as research institutions face increasing pressure to minimize environmental impact.

Across the Pacific, former Waymo engineers have emerged from stealth with Bedrock Robotics, securing $80 million to retrofit construction vehicles with autonomous driving capabilities. [4] This development signals the expansion of autonomous systems beyond traditional automotive applications into heavy industry, where the combination of structured environments and high-value use cases creates favorable conditions for deployment.

These developments collectively illustrate a maturation of robotics deployment strategies. Rather than pursuing general-purpose humanoid systems, successful implementations focus on specific operational contexts: urban logistics, scientific research, and industrial applications. Each deployment demonstrates how task-specific optimization, rather than human mimicry, drives practical value creation.

The pattern emerging across these implementations suggests a future where autonomous systems proliferate through targeted applications rather than universal replacement of human workers. The penguin-like delivery robots succeed not by resembling humans but by optimizing for subway navigation. The autonomous laboratory succeeds not by replicating human researchers but by exceeding human data collection capabilities. This functional approach to robotics deployment may prove more sustainable and economically viable than the humanoid-focused alternatives currently attracting venture capital attention.

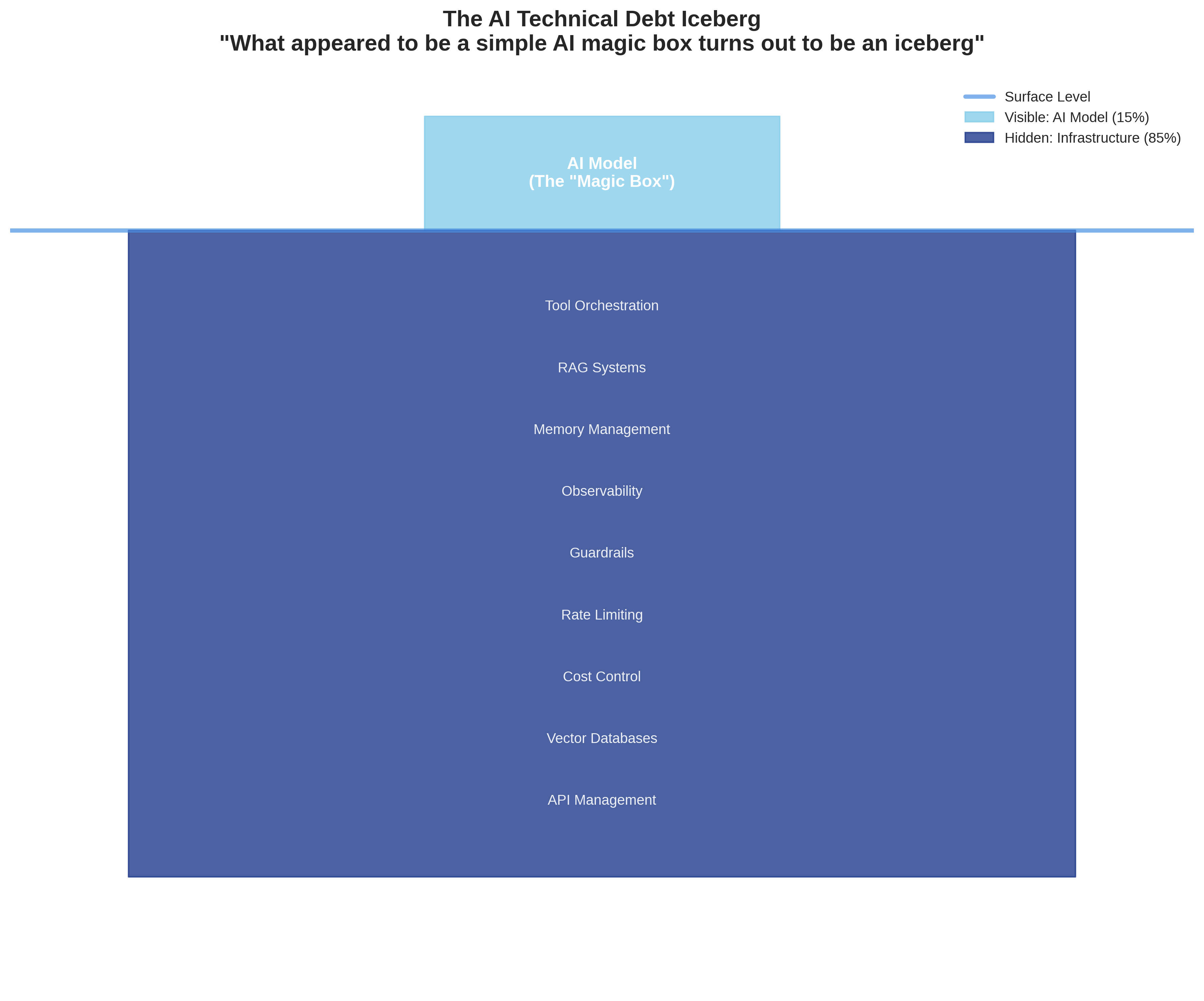

The AI Iceberg: Confronting Hidden Technical Debt in Production Systems

The promise of large language models was elegant simplicity: drop in an AI system and watch it handle everything from customer service to code generation, eliminating complex pipelines and brittle integrations. [5] Yet as organizations deploy AI systems at scale, a familiar pattern emerges—one that echoes Google's prescient 2015 paper on hidden technical debt in machine learning systems. The visible AI model represents merely the tip of an iceberg, with the vast majority of engineering effort submerged beneath the surface in infrastructure, data management, and operational complexity.

Tomasz Tunguz's analysis of AI technical debt reveals how the "AI magic box" myth has obscured the true cost of production deployment. [5] While the machine learning code itself remains a small component, the surrounding infrastructure has expanded dramatically. Modern AI systems require extensive tool orchestration, cost control mechanisms, memory management, observability frameworks, and guardrail systems—each adding layers of complexity that dwarf the original model.

The phenomenon Tunguz terms "Hungry, Hungry AI" illustrates a core challenge: AI agents require extensive context to function effectively, but input costs scale dramatically with context size. [5] This creates pressure to develop deterministic software that can replace AI reasoning for routine tasks. For example, automating email management necessitates building tools for CRM integration and task creation—precisely the type of brittle integration that AI was supposed to eliminate.

Tool orchestration presents another scaling challenge. While AI systems can effectively manage up to ten or fifteen tools, beyond this threshold, tool selection itself becomes a machine learning problem requiring additional models. [5] This recursive complexity mirrors the challenges that plagued earlier generations of ML systems, where solutions to one problem created new problems requiring additional solutions.

The infrastructure requirements extend far beyond tool management. Production AI systems demand sophisticated observability to monitor performance, evaluation frameworks to assess output quality, and routing mechanisms to direct queries to appropriate models. [5] Rate limiting becomes essential to prevent cost spirals when systems malfunction, while guardrails must prevent inappropriate responses across diverse use cases.

Information retrieval through Retrieval-Augmented Generation (RAG) has become fundamental to production systems. Tunguz's email application employs a LanceDB vector database to match sender patterns and tone preferences—a requirement that extends across virtually all production AI applications. [5] The ecosystem has evolved to include graph RAG techniques and specialized vector databases, each adding operational overhead.

Memory management represents another critical infrastructure component. Modern AI command-line interfaces save conversation history as markdown files, while systems like Tunguz's chart generation tool maintain cascading directories of .gemini and .claude files containing style preferences, fonts, colors, and formatting specifications. [5] This persistent state management, while essential for user experience, requires sophisticated data management and synchronization mechanisms.

The parallel to Google's 2015 analysis is striking. Just as traditional machine learning systems revealed that the actual ML code comprised a tiny fraction of the total system, modern AI deployments demonstrate that the language model itself represents a small component of the overall infrastructure. [5] The "simple AI magic box" has proven to be "an iceberg, with most of the engineering work hidden beneath the surface."

This technical debt accumulation has profound implications for AI adoption strategies. Organizations expecting plug-and-play AI solutions instead encounter complex engineering projects requiring significant infrastructure investment. The hidden costs include not only initial development but ongoing maintenance of observability systems, cost optimization, security frameworks, and integration layers.

The emergence of Forward Deployed Engineers (FDEs) as a customer success model reflects this complexity. [6] Companies like OpenAI, Anthropic, and Palantir have recognized that successful AI deployment requires extensive customization and integration work. These engineers spend their time understanding business challenges and molding AI platforms to specific use cases—a far cry from the simple API integration originally envisioned.

The economic implications are significant. FDE-supported deployments typically require contract values exceeding $100,000 to justify the $200,000 annual cost of specialized engineering support. [6] This threshold effectively limits sophisticated AI deployments to enterprise customers, potentially slowing adoption among smaller organizations that lack the resources for extensive customization.

The technical debt phenomenon also explains the productivity paradox observed in AI coding tools. Despite users feeling 20% faster when using AI assistance, METR studies indicate that developers actually slow down by 19% when using these tools. [7] The cognitive overhead of managing AI interactions, reviewing outputs, and correcting errors often exceeds the time savings from automated code generation.

Sam Altman's observation that "$10,000 worth of knowledge work a year ago now costs $1 or 10 cents" [8] captures the dramatic cost reduction in AI capabilities. However, this metric focuses solely on the model inference cost, ignoring the substantial infrastructure investment required to deliver that capability reliably in production environments.

The pattern suggests that successful AI deployment requires a more nuanced approach than simple model integration. Organizations must budget for significant infrastructure development, ongoing operational overhead, and specialized engineering talent. The companies that recognize and plan for this hidden complexity will likely achieve more sustainable AI implementations than those expecting plug-and-play solutions.

As the AI industry matures, acknowledging and addressing technical debt will become increasingly critical. The organizations that invest in robust infrastructure, comprehensive observability, and sustainable operational practices will be better positioned to capture the long-term value of AI systems while avoiding the pitfalls of technical debt accumulation.

The Rise of Agent Managers: Redefining Human-AI Collaboration

As artificial intelligence capabilities mature, a new professional category emerges: the agent manager. These individuals coordinate teams of AI agents, orchestrating their activities to achieve complex objectives that exceed the capabilities of any single system. The role represents a fundamental shift in human-computer interaction, from direct tool operation to supervisory coordination of autonomous systems. [9]

Tomasz Tunguz's analysis of agent management reveals the current limitations and future potential of this emerging discipline. Most practitioners, including Tunguz himself, struggle to manage more than four AI agents simultaneously. [9] The cognitive overhead is substantial: agents request clarification, seek permission for actions, issue web searches, and require constant attention. Task completion times vary unpredictably from thirty seconds to thirty minutes, while maintaining awareness of multiple concurrent activities proves challenging.

The comparison to physical robotics provides instructive context. MIT's 2020 analysis suggested that the average robot replaced 3.3 human jobs, while Amazon's 2024 pick-pack-ship robots achieved a replacement ratio of 24 workers per robot. [9] This dramatic improvement in robot productivity over just four years suggests similar potential for AI agent management, albeit with important distinctions.

Unlike physical robots, AI agents exhibit non-deterministic behavior. They interpret instructions, improvise solutions, and occasionally ignore directions entirely. [9] As Tunguz observes, "A Roomba can only dream of the creative freedom to ignore your living room & decide the garage needs attention instead." This unpredictability fundamentally changes the management dynamic compared to traditional automation.

Management theory typically recommends a span of control around seven people for human teams. [9] However, early evidence suggests that AI agent management may scale differently. Productive AI software engineers report managing 10-15 agents by specifying detailed tasks, dispatching them to AI systems, and reviewing completed work. [9] Approximately half of the output requires revision and resubmission with improved prompts, indicating that agent management involves significant quality control overhead.

The emergence of agent inbox tools represents a critical infrastructure development for scaling agent management. [9] These project management systems allow users to request AI work, track progress, and evaluate results—similar to how GitHub pull requests or Linear tickets organize software development workflows. While not yet broadly available, such tools appear essential for managing larger agent teams effectively.

The productivity implications are profound. If ARR per employee serves as a vanity metric for startups, "agents managed per person" may become the equivalent productivity metric for knowledge workers. [9] The question facing organizations is not whether this transition will occur, but how quickly they can develop the infrastructure and skills to capitalize on it.

The economic transformation extends beyond individual productivity to fundamental business model changes. The Forward Deployed Engineer (FDE) model, pioneered by Palantir and now adopted by OpenAI, Anthropic, and other AI companies, represents a new approach to customer success in rapidly evolving technology markets. [6] These engineers serve as hybrid customer success managers and solutions architects, working directly with clients to understand business challenges and configure AI systems accordingly.

The FDE model emerges from the unique characteristics of AI markets. Model capabilities evolve at 10x improvements every two years, while buyers struggle to understand optimal processes for leveraging these advances. [6] Traditional software sales processes, optimized for stable products with well-understood workflows, prove inadequate for technologies where the underlying capabilities change dramatically between sales cycles.

This dynamic has led to a reinvention of customer success focused on delivering outcomes rather than software features. FDEs take core AI platforms and mold them to specific customer needs, defining new approaches to sales and marketing that blur the lines between engineering and customer success. [6] The model requires contract values exceeding $100,000 to justify the economics, effectively limiting sophisticated AI deployments to enterprise customers.

The agent management paradigm also reflects broader changes in software development and deployment. The traditional assembly line approach to software sales—from SDR to AE to customer success manager—assumed stable products and well-understood buyer needs. [6] AI disrupts both assumptions, requiring more flexible and technically sophisticated customer engagement models.

The implications for workforce development are significant. Future agent managers will need skills combining project management, prompt engineering, quality assurance, and system orchestration. The ability to "vibe code new platforms to deliver success on a basic platform" becomes a requisite capability for customer success teams in an age where customer expectations for value delivery continue to compress. [6]

The transition also raises questions about organizational structure and career progression. If individual contributors can manage dozens of AI agents, traditional management hierarchies may flatten dramatically. The most valuable professionals may be those who can effectively coordinate large numbers of autonomous systems while maintaining quality and alignment with business objectives.

Early indicators suggest that agent management productivity will continue improving rapidly. The combination of better tooling, refined management practices, and more capable AI systems points toward a future where human professionals routinely coordinate hundreds of AI agents. The organizations that develop these capabilities first will likely achieve significant competitive advantages in knowledge work productivity.

The agent manager role represents more than a new job category—it signals a fundamental shift toward human-AI collaboration models that leverage the complementary strengths of both human judgment and artificial intelligence capabilities. As this transition accelerates, the ability to effectively manage AI agents may become as fundamental to professional success as computer literacy proved to be in previous decades.

The Deep Tech Renaissance: From Software-First to Physics-Enabled Innovation

The venture capital landscape is experiencing a fundamental shift as investors recognize the limitations of pure software solutions and embrace the integration of artificial intelligence with physical systems. DCVC's 2025 Deep Tech Opportunities Report frames this transition as an "American Industrial Renaissance," highlighting the convergence of AI capabilities with manufacturing, energy, climate, and biotechnology applications. [10]

This shift reflects a broader recognition that the most significant remaining challenges—climate change, sustainable energy, advanced manufacturing, and biotechnology—require solutions that bridge digital intelligence and physical reality. The software-first era, which dominated the previous two decades of technology investment, is giving way to physics-enabled innovation that leverages AI to solve complex real-world problems.

The market dynamics supporting this transition are compelling. Elad Gil's analysis of AI market crystallization reveals that while certain AI segments have identified clear leaders, the integration of AI with physical systems remains largely unsettled. [11] This creates opportunities for companies that can effectively combine artificial intelligence with domain-specific expertise in manufacturing, materials science, energy systems, and biotechnology.

The deep tech opportunity spans multiple domains, each representing billions of dollars in potential market value. Energy storage and generation technologies, enhanced by AI-driven optimization, promise to accelerate the transition to sustainable power systems. Manufacturing applications leverage AI for quality control, predictive maintenance, and process optimization, while climate technologies employ machine learning for carbon capture, emissions monitoring, and environmental remediation.

Biotechnology represents perhaps the most promising intersection of AI and physical systems. The combination of machine learning with laboratory automation, as demonstrated by North Carolina State's breakthrough in materials discovery, suggests similar potential for drug discovery, protein engineering, and synthetic biology applications. [3] The ability to accelerate experimental cycles from years to days could fundamentally transform pharmaceutical development timelines and costs.

The investment implications are significant. Traditional software ventures typically required minimal capital expenditure and could scale through pure digital distribution. Deep tech companies, by contrast, require substantial upfront investment in research and development, specialized equipment, and regulatory approval processes. However, successful deep tech ventures often achieve more defensible market positions through intellectual property, regulatory moats, and capital intensity barriers that discourage competition.

The talent requirements for deep tech ventures differ markedly from software-focused companies. Success requires teams that combine AI expertise with deep domain knowledge in physics, chemistry, biology, or engineering. This interdisciplinary requirement creates both opportunities and challenges for talent acquisition, as companies compete for professionals who understand both artificial intelligence and specific technical domains.

The regulatory environment also shapes deep tech opportunities differently than pure software applications. While software companies often operated in regulatory gray areas until achieving scale, deep tech ventures frequently require regulatory approval before market entry. This dynamic favors companies with strong regulatory expertise and sufficient capital to navigate approval processes, but also creates more predictable competitive landscapes once approval is achieved.

The geographic distribution of deep tech opportunities reflects the concentration of research institutions, manufacturing capabilities, and regulatory expertise. The United States maintains advantages in AI research, venture capital availability, and regulatory frameworks, while China leads in manufacturing scale and deployment speed. European markets offer strengths in regulatory sophistication and sustainability focus, creating opportunities for companies that can navigate multiple regulatory regimes.

The timeline for deep tech value creation typically extends beyond software venture expectations. While software companies might achieve significant scale within 3-5 years, deep tech ventures often require 7-10 years to reach commercial viability. This extended timeline demands patient capital and management teams capable of sustained execution through multiple development phases.

The exit strategies for deep tech companies also differ from software ventures. While software companies typically exit through acquisition by technology giants or public offerings, deep tech companies may find strategic buyers among industrial conglomerates, energy companies, pharmaceutical firms, or government agencies. This diversity of potential acquirers can create competitive bidding situations that enhance exit valuations.

The integration of AI with physical systems also creates new categories of intellectual property that may prove more defensible than pure software innovations. Patents covering AI-enabled manufacturing processes, materials discovery algorithms, or biotechnology applications may provide stronger competitive moats than software patents, which face increasing scrutiny and invalidation rates.

The deep tech renaissance represents more than an investment trend—it signals a maturation of artificial intelligence from experimental technology to practical tool for solving complex physical problems. The companies that successfully navigate this transition will likely define the next generation of technology leaders, while those that remain focused solely on software applications may find themselves increasingly marginalized in a physics-enabled future.

The convergence of AI capabilities with physical systems creates unprecedented opportunities for innovation across multiple industries. The organizations that recognize this shift early and develop the capabilities to execute in deep tech markets will be positioned to capture disproportionate value as the technology landscape continues evolving toward physics-enabled solutions.

Scaling Challenges: Culture, Safety, and Organizational Dynamics in AI Companies

The rapid growth of AI companies has created unprecedented organizational challenges that extend far beyond technical development. Calvin French-Owen's candid analysis of his experience at OpenAI provides rare insight into the cultural dynamics of hypergrowth AI companies, while recent incidents at xAI highlight the ongoing tensions between rapid deployment and safety considerations. [12]

French-Owen, the former Segment founder who joined OpenAI to build Codex, describes a period of "chaotic hypergrowth" that saw the company expand from 1,000 to 3,000 employees within a single year. [12] This explosive scaling created organizational stress that extends beyond typical startup growing pains. The combination of intense public scrutiny, existential mission pressure, and unprecedented technical challenges creates a unique cultural environment that few organizations have navigated successfully.

The cultural implications of AI company scaling differ fundamentally from traditional technology ventures. While software companies typically scale through incremental feature development and market expansion, AI companies face the dual challenge of advancing fundamental research while simultaneously deploying systems that could reshape entire industries. This duality creates tension between research culture, which values long-term exploration and academic rigor, and product culture, which prioritizes rapid iteration and market feedback.

The talent acquisition challenges facing AI companies compound these cultural pressures. The limited pool of AI researchers with relevant expertise creates intense competition for key personnel, while the interdisciplinary nature of AI development requires teams that span computer science, mathematics, psychology, philosophy, and domain-specific expertise. Managing such diverse teams while maintaining cultural coherence proves increasingly difficult as organizations scale.

The xAI safety incidents referenced in recent reports illustrate the ongoing tension between deployment speed and safety considerations. [13] While specific details remain limited, these incidents highlight the broader challenge facing AI companies: balancing the competitive pressure for rapid deployment against the need for comprehensive safety testing and risk mitigation.

The safety challenge is particularly acute given the potential consequences of AI system failures. Unlike traditional software bugs, which typically affect specific users or applications, AI system failures can have cascading effects across multiple domains. The interconnected nature of modern AI systems means that a failure in one component can propagate through entire networks of dependent applications.

The regulatory environment adds another layer of complexity to AI company operations. Unlike traditional software companies, which often operated in regulatory gray areas until achieving scale, AI companies face increasing scrutiny from government agencies, academic institutions, and civil society organizations. This attention creates pressure for transparency and accountability that can conflict with competitive dynamics and intellectual property protection.

The international dimension of AI development further complicates organizational dynamics. Companies must navigate different regulatory frameworks, cultural expectations, and competitive landscapes across multiple jurisdictions. The geopolitical implications of AI development mean that companies face scrutiny not only from business competitors but also from national security agencies and international policy organizations.

The employee retention challenges in AI companies reflect these broader organizational pressures. The combination of intense work environments, public scrutiny, and ethical concerns about AI development creates unique stress factors that traditional retention strategies may not address effectively. Companies must develop new approaches to employee support that acknowledge the psychological and ethical dimensions of AI development work.

The compensation dynamics in AI companies also create organizational challenges. The limited supply of AI talent has driven compensation levels far above traditional software engineering roles, creating internal equity issues and budget pressures. Companies must balance competitive compensation packages against sustainable business models, while managing the cultural implications of significant pay disparities within their organizations.

The decision-making processes in AI companies face unique challenges due to the uncertainty surrounding AI capabilities and limitations. Traditional product development relies on established user research methods and market feedback mechanisms. AI companies must make decisions about capabilities that may not exist yet, for use cases that haven't been fully explored, with potential consequences that remain poorly understood.

The communication challenges facing AI companies extend beyond typical corporate communications. Companies must explain complex technical concepts to diverse stakeholders including investors, regulators, customers, and the general public. The tendency for AI capabilities to be either overhyped or misunderstood creates ongoing communication challenges that require sophisticated public relations strategies.

The partnership dynamics in AI companies also differ from traditional technology ventures. The computational requirements for AI development create dependencies on cloud infrastructure providers, while the data requirements create complex relationships with content creators, publishers, and data aggregators. These partnerships often involve novel legal and business arrangements that lack established precedents.

The long-term sustainability of current AI company growth models remains uncertain. The combination of massive computational costs, intense talent competition, and regulatory uncertainty creates business model challenges that may require fundamental restructuring as the industry matures. Companies that develop sustainable approaches to these challenges will likely achieve more durable competitive advantages than those focused solely on technical capabilities.

The organizational lessons emerging from AI company scaling experiences will likely influence broader technology industry practices. The unique challenges of AI development—combining research and product development, managing diverse stakeholder expectations, and navigating complex ethical considerations—require new organizational models that may prove applicable to other deep technology ventures.

The companies that successfully navigate these organizational challenges while maintaining technical innovation will likely emerge as the long-term leaders in the AI industry. The ability to scale culture, manage safety considerations, and maintain organizational coherence under intense pressure may prove as important as technical capabilities in determining competitive success.

Synthesis: The Convergence Thesis and Future Implications

The developments analyzed in this issue of The DeepTech Pulse reveal a fundamental transformation in the artificial intelligence and robotics landscape. The convergence of several key trends—morphology-agnostic robotics, practical deployment strategies, hidden technical debt recognition, agent management evolution, deep tech investment, and organizational maturation—suggests we are entering a new phase of AI development characterized by practical implementation rather than theoretical exploration.

The morphology-agnostic robotics movement represents more than a technical preference; it signals a philosophical maturation of the robotics industry. The recognition that form should follow function rather than fiction reflects a shift from anthropocentric assumptions toward engineering optimization. This transition, evidenced by the success of specialized systems like Boston Dynamics' Spot and the Chinese subway delivery robots, suggests that the future of robotics lies in task-specific optimization rather than general-purpose humanoid systems.

The practical deployment of robotic systems in real-world environments—from Shenzhen's subway platforms to North Carolina's autonomous laboratories—demonstrates that the technology has reached sufficient maturity for operational use in structured environments. These deployments share common characteristics: they focus on specific operational contexts, optimize for functional requirements rather than human resemblance, and deliver measurable value improvements over existing processes.

The recognition of hidden technical debt in AI systems represents a crucial maturation of industry understanding. The acknowledgment that AI deployment requires substantial infrastructure investment, ongoing operational overhead, and specialized engineering talent corrects earlier assumptions about plug-and-play AI solutions. This recognition will likely lead to more realistic deployment timelines, appropriate budget allocation, and sustainable implementation strategies.

The emergence of agent management as a professional discipline reflects the evolution of human-AI collaboration models. The transition from direct tool operation to supervisory coordination of autonomous systems requires new skills, organizational structures, and management approaches. The potential for individual professionals to coordinate dozens or hundreds of AI agents suggests dramatic productivity improvements, but also requires new frameworks for quality control, task allocation, and performance measurement.

The deep tech renaissance, characterized by the integration of AI with physical systems, represents a fundamental shift in venture capital allocation and technological development priorities. The recognition that the most significant remaining challenges require physics-enabled solutions rather than pure software applications will likely drive investment toward companies that can effectively combine artificial intelligence with domain-specific expertise.

The organizational challenges facing AI companies—from cultural scaling to safety management—highlight the unique pressures created by developing technologies with potentially transformative societal impact. The companies that develop sustainable approaches to these challenges while maintaining technical innovation will likely achieve more durable competitive advantages than those focused solely on capabilities development.

These trends collectively suggest several implications for the future development of AI and robotics:

Specialization Over Generalization: The success of task-specific robotic systems suggests that the future may favor specialized AI applications over general-purpose systems. Companies that focus on solving specific problems exceptionally well may achieve more sustainable competitive positions than those pursuing general artificial intelligence.

Infrastructure Investment Requirements: The recognition of hidden technical debt implies that successful AI deployment requires substantial infrastructure investment. Organizations should budget for comprehensive observability, robust operational frameworks, and specialized engineering talent rather than expecting simple API integration.

Human-AI Collaboration Models: The evolution of agent management suggests that the future of work will involve humans coordinating multiple AI systems rather than being replaced by them. This requires new skills, organizational structures, and performance metrics that organizations should begin developing proactively.

Physics-Enabled Innovation: The deep tech renaissance suggests that the most valuable AI applications will involve integration with physical systems. Companies should consider how AI can enhance their physical operations rather than focusing solely on digital applications.

Organizational Sustainability: The scaling challenges facing AI companies highlight the importance of sustainable organizational practices. Companies should prioritize cultural development, safety frameworks, and stakeholder management alongside technical capabilities.

The convergence of these trends suggests that we are transitioning from the experimental phase of AI development to the implementation phase. The companies, organizations, and individuals that recognize this transition and adapt their strategies accordingly will be best positioned to capture value in the emerging AI-enabled economy.

The next phase of AI development will likely be characterized by practical deployment, infrastructure maturation, and organizational learning rather than breakthrough capabilities development. The winners will be those who can effectively bridge the gap between AI potential and operational reality, creating sustainable value through thoughtful implementation rather than technological novelty.

Looking Ahead: The DeepTech Pulse Continues

As we monitor the pulse of DeepTech-enabled innovation, the rhythm continues to accelerate. The developments covered in this issue represent early indicators of broader transformations that will reshape industries, organizations, and professional practices over the coming years. The convergence of artificial intelligence with physical systems, the maturation of deployment strategies, and the evolution of human-AI collaboration models suggest that we are entering the most practically significant phase of the AI revolution.

The organizations that recognize these trends early and adapt their strategies accordingly will be positioned to capture disproportionate value as the technology landscape continues evolving. The DeepTech Pulse will continue monitoring these developments, providing analysis and insights to help navigate the accelerating convergence of digital intelligence and physical capability.

References

[1] Shakir, D. (2025, July 15). "The Age of Embodied Intelligence: Why The Future of Robotics Is Morphology-Agnostic." Lux Capital. https://www.luxcapital.com/news/the-age-of-embodied-intelligence-why-the-future-of-robotics-is-morphology-agnostic

[2] Shaikh, K. (2025, July 15). "World-first: Penguin-like delivery robots ride trains to courier goods." Interesting Engineering. https://interestingengineering.com/innovation/subway-penguin-style-delivery-robots

[3] Shipman, M. (2025, July 14). "Researchers Hit 'Fast Forward' on Materials Discovery with Self-Driving Labs." NC State News. https://news.ncsu.edu/2025/07/fast-forward-for-self-driving-labs/

[4] Superhuman AI. (2025, July). "Robotics Special: Ex-Waymo engineers emerge with Bedrock Robotics." Referenced from provided content.

[5] Tunguz, T. (2025, July 17). "Hidden Technical Debt in AI." Tomasz Tunguz Newsletter. Referenced from provided PDF content.

[6] Tunguz, T. (2025). "The Sales Strategy Conquering the AI Market." Tomasz Tunguz Newsletter. Referenced from provided content.

[7] METR Study. (2025). "AI coding tools slow developers by 19%." Referenced from provided content.

[8] Altman, S. (2025). Economic statement on AI cost reduction. Referenced from provided content: "$10,000 worth of knowledge work a year ago, now only costs $1 or 10 cents."

[9] Tunguz, T. (2025). "The Rise of the Agent Manager." Tomasz Tunguz Newsletter. Referenced from provided content.

[10] DCVC. (2025). "Deep Tech Opportunities Report 003: An American Industrial Renaissance." DCVC Capital. Referenced from provided PDF content.

[11] Gil, E. (2025, July 22). "AI Market Clarity." Elad Gil Substack. Referenced from provided content.

[12] French-Owen, C. (2025). OpenAI culture insights and employee experience. Referenced from provided content describing "chaotic hypergrowth" and scaling from 1,000 to 3,000 employees.

[13] Various sources. (2025). "xAI safety breakdown and researcher backlash." Referenced from provided content noting multiple incidents and documentation.

Additional Sources and Context:

- Abolhasani, M., et al. (2025). "Flow-Driven Data Intensification to Accelerate Autonomous Inorganic Materials Discovery." Nature Chemical Engineering. DOI: 10.1038/s44286-025-00249-z

- Boston Dynamics deployment statistics: 1,500+ Spot robots in field deployment vs. Atlas remaining uncommercial

- MIT 2020 analysis: Average robot replacement ratio of 3.3 human jobs

- Amazon 2024 data: Pick-pack-ship robots replacing 24 workers per robot

- Shenzhen Metro robot deployment: 41 autonomous delivery robots serving 100+ 7-Eleven stores

- VX Logistics operational details: Vanke unit partly owned by Shenzhen Metro

- Shenzhen "Embodied Intelligent Robot Action Plan" (March 2025): Target for large-scale robot adoption by 2027

- Physical Intelligence: Morphology-agnostic AI development company

- Humanoid robotics companies: Tesla (Optimus), Sanctuary AI, Figure, 1X, Apptronik, Boardwalk Robotics, Agility, K-Scale Labs, EngineAI, Noetix, Unitree

- Energy efficiency metrics: Bipedal robots (3.3 cost of transport) vs. humans (0.2) vs. wheels (0.01)

- Agent management capacity: Current practitioners (4 agents), software engineers (10-15 agents), theoretical scaling potential

- Forward Deployed Engineer model: Minimum $100K contract value to justify $200K annual FDE cost

Written by Bogdan Cristei and Manus AI