The AI Infrastructure Hardware Revolution: An Investment Thesis for the Next Decade

By Bogdan Cristei & Manus AI

Executive Summary

The artificial intelligence infrastructure hardware sector represents one of the most compelling investment opportunities of the next decade, driven by an unprecedented convergence of technological advancement, market demand, and capital deployment. This thesis examines the fundamental drivers reshaping the AI hardware landscape, from the limitations of traditional GPU-centric architectures to the emergence of specialized processing solutions that promise to democratize AI deployment across industries.

The global AI infrastructure market, valued at approximately $60 billion in 2025, is projected to reach $499 billion by 2034, representing a compound annual growth rate of 26.6%. This explosive growth is underpinned by several key factors: the mass adoption of generative AI applications, enterprise integration across industries, competitive infrastructure races among hyperscalers, and geopolitical priorities driving sovereign AI capabilities. However, beneath these headline numbers lies a more nuanced story of technological disruption, where specialized hardware architectures are challenging NVIDIA's dominance and creating new opportunities for investors willing to navigate the complexity of this rapidly evolving landscape.

The investment opportunity extends far beyond simple market growth metrics. McKinsey research indicates that by 2030, the global data center infrastructure will require $6.7 trillion in capital investment, with $5.2 trillion specifically allocated to AI-enabled facilities. This represents not merely an expansion of existing infrastructure, but a fundamental transformation in how computational resources are designed, deployed, and optimized for AI workloads. The companies that successfully navigate this transition—whether through innovative chip architectures, novel cooling solutions, or breakthrough software optimization—stand to capture disproportionate value in a market where performance advantages translate directly to competitive moats.

Central to this thesis is the recognition that we are witnessing the early stages of a hardware architecture revolution. While NVIDIA's GPU-based solutions currently dominate the training market, the inference market—projected to become the larger segment by 2030—presents opportunities for specialized architectures that prioritize efficiency over raw computational power. Field-Programmable Gate Arrays (FPGAs) and Application-Specific Integrated Circuits (ASICs) are emerging as viable alternatives for specific use cases, offering superior power efficiency and cost-effectiveness for deployment scenarios where flexibility can be traded for performance optimization.

The algorithmic efficiency paradox presents both challenges and opportunities for hardware investors. While recent breakthroughs like DeepSeek's V3 model demonstrate that algorithmic improvements can reduce computational requirements by orders of magnitude, historical evidence suggests that efficiency gains are typically offset by increased experimentation and more sophisticated applications. This dynamic creates a sustained demand environment where hardware improvements enable new use cases rather than simply reducing overall computational needs.

For investors, the AI infrastructure hardware sector offers multiple vectors for value creation. Direct investment opportunities exist across the entire value chain, from semiconductor design and manufacturing to data center construction and energy infrastructure. However, the most compelling opportunities may lie in companies developing specialized solutions for specific market segments, where focused innovation can overcome the scale advantages of incumbent players. The key to successful investment in this sector lies in understanding not just the technology trends, but the economic dynamics that will determine which architectures achieve widespread adoption and which remain niche solutions.

Market Fundamentals and Growth Drivers

The AI infrastructure hardware market is experiencing a period of unprecedented growth, driven by fundamental shifts in how computational resources are consumed and deployed across the global economy. Understanding these underlying drivers is essential for investors seeking to identify sustainable opportunities in a market characterized by rapid technological change and intense competition.

Market Size and Trajectory

The global artificial intelligence infrastructure market has reached an inflection point, with multiple research organizations converging on projections that indicate sustained high-growth rates through the end of the decade. According to Precedence Research, the market was valued at $47.23 billion in 2024 and is expected to grow to $60.23 billion in 2025, ultimately reaching $499.33 billion by 2034. This represents a compound annual growth rate of 26.6%, a pace that significantly outstrips most technology sectors and reflects the fundamental nature of the transformation underway.

The North American market, which currently accounts for 41% of global demand, serves as a bellwether for global trends. The United States alone represents $14.52 billion in current market value, projected to reach $156.45 billion by 2034 with a CAGR of 26.84%. However, the Asia Pacific region is expected to witness the fastest growth during the forecast period, driven by aggressive government investment in AI capabilities and rapid enterprise adoption across key industries including manufacturing, healthcare, and financial services.

These growth projections are supported by fundamental demand drivers that extend beyond simple technology adoption. The transition from experimental AI implementations to production-scale deployments is creating sustained demand for infrastructure that can support both training and inference workloads at enterprise scale. Unlike previous technology cycles where growth was primarily driven by consumer adoption, the AI infrastructure boom is characterized by business-to-business demand patterns that tend to be more predictable and sustainable over longer time horizons.

Enterprise Adoption Patterns

The enterprise adoption of AI infrastructure follows a distinct pattern that differs significantly from consumer technology adoption curves. Rather than the rapid spike and plateau characteristic of consumer products, enterprise AI adoption is characterized by a steady, sustained growth pattern driven by the need to integrate AI capabilities into existing business processes and workflows.

Current data indicates that enterprises are moving beyond pilot programs and proof-of-concept implementations toward production-scale AI deployments. This transition requires infrastructure investments that are both substantial and long-term in nature. Unlike consumer applications where performance requirements can be variable, enterprise AI applications demand consistent, reliable performance with predictable cost structures. This creates a market dynamic where infrastructure providers can build sustainable competitive advantages through optimization for specific enterprise use cases.

The enterprise market is also driving demand for specialized infrastructure solutions that can address specific industry requirements. Healthcare organizations require AI infrastructure that can handle sensitive data with appropriate security and compliance measures. Financial services firms need systems that can provide real-time inference with extremely low latency requirements. Manufacturing companies are implementing AI systems that must integrate with existing operational technology environments. Each of these use cases creates opportunities for specialized infrastructure solutions that can command premium pricing relative to general-purpose alternatives.

Geopolitical and Strategic Considerations

The AI infrastructure market is increasingly influenced by geopolitical considerations that extend beyond traditional market dynamics. Governments around the world are recognizing AI capabilities as strategic national assets, leading to substantial public investment in AI infrastructure and creating market opportunities that are partially insulated from normal economic cycles.

The United States has implemented export restrictions on advanced semiconductor technology to China, creating market fragmentation that both constrains and creates opportunities for infrastructure providers. Chinese companies are investing heavily in domestic AI chip development, creating a parallel ecosystem that may eventually compete with Western technology providers. European governments are implementing digital sovereignty initiatives that prioritize locally-controlled AI infrastructure, creating opportunities for regional providers.

These geopolitical dynamics are creating multiple parallel markets for AI infrastructure, each with distinct requirements and competitive dynamics. Companies that can navigate these complexities and develop solutions that meet the specific needs of different regional markets may be able to capture value that extends beyond pure technological advantages.

Cloud Computing Integration

The integration of AI capabilities into cloud computing platforms represents another fundamental driver of infrastructure demand. Major cloud service providers including Amazon Web Services, Microsoft Azure, and Google Cloud Platform are investing billions of dollars in AI-specific infrastructure to support both their own AI services and third-party applications deployed on their platforms.

This cloud integration is creating a multiplier effect for infrastructure demand. Not only are cloud providers building AI-specific data centers, but they are also enabling thousands of smaller companies to access AI capabilities without building their own infrastructure. This democratization of AI access is expanding the total addressable market for AI applications while concentrating infrastructure demand among a relatively small number of large-scale providers.

The cloud model is also driving innovation in infrastructure efficiency and optimization. Cloud providers have strong economic incentives to maximize the utilization of their infrastructure investments, leading to innovations in workload scheduling, resource allocation, and hardware optimization that benefit the entire ecosystem. Companies that can provide infrastructure solutions that improve cloud provider economics are likely to find receptive customers with substantial purchasing power.

Technology Architecture Evolution

The evolution of AI infrastructure hardware represents a fundamental shift from general-purpose computing architectures toward specialized systems optimized for specific AI workloads. This transition is creating new opportunities for companies that can develop innovative solutions while simultaneously challenging established players who built their market positions on previous-generation technologies.

The GPU Dominance Era and Its Limitations

NVIDIA's Graphics Processing Units have dominated the AI infrastructure market since the deep learning revolution began in earnest around 2012. The parallel processing capabilities of GPUs proved exceptionally well-suited to the matrix multiplication operations that form the core of neural network training and inference. This architectural advantage, combined with NVIDIA's early investment in software tools and developer ecosystems, created a dominant market position that has persisted for over a decade.

However, the GPU-centric approach to AI infrastructure is beginning to show limitations as AI applications become more diverse and deployment requirements become more specialized. GPUs were originally designed for graphics rendering, and while their parallel processing capabilities translate well to AI workloads, they are not optimized specifically for the types of operations that modern AI applications require. This creates opportunities for specialized architectures that can deliver superior performance, efficiency, or cost-effectiveness for specific use cases.

The memory architecture of traditional GPUs presents particular challenges for large language models and other AI applications that require access to substantial amounts of data during processing. GPU memory is typically limited and expensive, creating bottlenecks that can significantly impact performance for memory-intensive AI workloads. Companies developing alternative architectures with different memory hierarchies and access patterns may be able to achieve significant advantages for specific types of AI applications.

Power consumption represents another area where GPU-based solutions face challenges. Data centers are increasingly constrained by power availability and cooling capacity, making energy efficiency a critical factor in infrastructure decisions. While NVIDIA has made substantial improvements in performance per watt with each new generation of GPUs, the fundamental architecture still consumes significant power relative to specialized alternatives designed specifically for AI workloads.

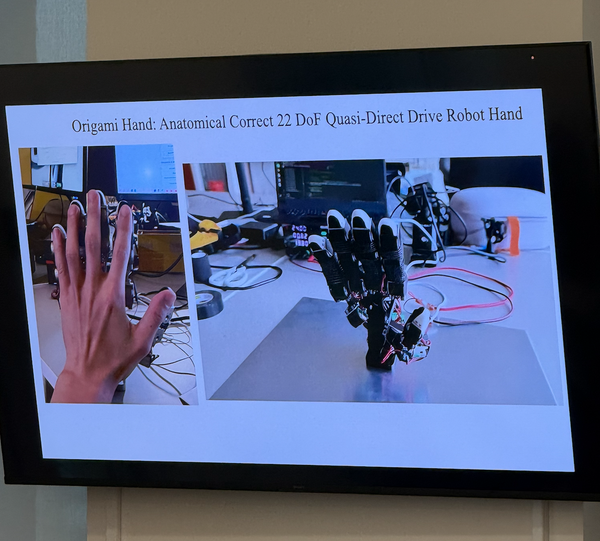

The Rise of Specialized AI Processors

The limitations of GPU-based architectures have created opportunities for specialized AI processors that can deliver superior performance, efficiency, or cost-effectiveness for specific types of workloads. These specialized processors fall into several categories, each with distinct advantages and target applications.

Tensor Processing Units (TPUs), developed by Google, represent one of the most successful examples of specialized AI processors. TPUs are designed specifically for the tensor operations that form the core of machine learning computations, allowing them to achieve superior performance and efficiency compared to general-purpose GPUs for many AI workloads. Google's success with TPUs has demonstrated the viability of specialized AI processors and encouraged other companies to develop their own custom silicon solutions.

Application-Specific Integrated Circuits (ASICs) represent the most specialized end of the processor spectrum. ASICs are designed for specific applications and cannot be reprogrammed for different tasks, but this specialization allows them to achieve exceptional performance and efficiency for their target workloads. Companies developing ASICs for AI applications can potentially achieve performance advantages of 10x or more compared to general-purpose processors, but they must carefully balance the benefits of specialization against the costs and risks of developing custom silicon.

Field-Programmable Gate Arrays (FPGAs) occupy a middle ground between general-purpose processors and ASICs. FPGAs can be reconfigured for different applications, providing flexibility while still allowing for significant optimization for specific workloads. This flexibility makes FPGAs particularly attractive for AI applications where requirements may evolve over time or where different types of processing are required for different stages of the AI pipeline.

The emergence of specialized AI processors is creating a more diverse and competitive hardware landscape. Rather than a single dominant architecture, the market is evolving toward a ecosystem where different types of processors are optimized for different aspects of AI workloads. This diversification creates opportunities for companies that can identify underserved market segments and develop specialized solutions that deliver superior value for specific use cases.

FPGA and ASIC Opportunities in AI Infrastructure

Field-Programmable Gate Arrays and Application-Specific Integrated Circuits represent particularly compelling opportunities in the AI infrastructure landscape due to their ability to deliver superior performance and efficiency for specific types of workloads while addressing some of the key limitations of GPU-based architectures.

FPGAs offer several advantages for AI applications that make them attractive alternatives to GPUs in certain deployment scenarios. The reconfigurable nature of FPGAs allows them to be optimized for specific AI models or applications, potentially delivering significant performance improvements compared to general-purpose processors. FPGAs also typically consume less power than GPUs for equivalent workloads, making them attractive for deployment scenarios where power consumption is a critical constraint.

The memory architecture of FPGAs can be optimized for specific AI workloads in ways that are not possible with fixed GPU architectures. FPGA developers can implement custom memory hierarchies and access patterns that minimize data movement and maximize computational efficiency for their specific applications. This flexibility is particularly valuable for AI applications that have unusual memory access patterns or that require real-time processing with strict latency requirements.

FPGAs are particularly well-suited for AI inference applications where the model architecture is fixed and the primary requirements are low latency and high efficiency. Edge AI applications, where power consumption and physical size are critical constraints, represent a particularly attractive market for FPGA-based solutions. The ability to implement custom processing pipelines that are optimized for specific AI models can deliver substantial advantages in these deployment scenarios.

ASICs represent the ultimate in specialization for AI workloads, offering the potential for dramatic performance and efficiency improvements compared to general-purpose processors. Companies that can successfully develop ASICs for high-volume AI applications may be able to achieve sustainable competitive advantages that are difficult for competitors to replicate. However, ASIC development requires substantial upfront investment and carries significant risks if market requirements change or if the target applications do not achieve the expected adoption levels.

The development timeline for ASICs is typically measured in years rather than months, requiring companies to make long-term bets on specific AI architectures and applications. This creates both risks and opportunities for investors. Companies that correctly anticipate future AI requirements and successfully develop ASICs for those applications may achieve exceptional returns, but companies that bet on the wrong architectures or applications may face substantial losses.

The economics of ASIC development favor applications with large addressable markets and stable requirements. AI training for large language models represents one such application, where the computational requirements are well-understood and the market size is substantial. AI inference for specific applications like autonomous vehicles or industrial automation may also represent attractive ASIC opportunities if the market volumes are sufficient to justify the development costs.

Competitive Landscape Analysis

The AI infrastructure hardware market is characterized by intense competition across multiple dimensions, with established players defending their market positions while new entrants seek to capture value through innovative architectures and specialized solutions. Understanding the competitive dynamics is essential for investors seeking to identify companies with sustainable competitive advantages in this rapidly evolving market.

NVIDIA's Market Position and Vulnerabilities

NVIDIA currently maintains a dominant position in the AI infrastructure market, with an estimated 80-90% market share in AI training workloads and a substantial presence in inference applications. This dominance is built on several key advantages that have proven difficult for competitors to replicate. The company's CUDA software ecosystem provides developers with mature tools and libraries that significantly reduce the complexity of developing AI applications. The performance of NVIDIA's latest GPU architectures, including the H100 and upcoming Blackwell series, continues to set industry benchmarks for AI training workloads.

However, NVIDIA's market position faces several potential vulnerabilities that create opportunities for competitors and alternative architectures. The company's pricing strategy, while justified by performance advantages, creates economic incentives for customers to seek alternatives, particularly for inference workloads where the performance requirements may not justify premium pricing. The supply constraints that have periodically affected NVIDIA's ability to meet demand create opportunities for competitors to gain market share by offering more readily available alternatives.

The inference market represents a particular area of vulnerability for NVIDIA's current market position. While GPUs excel at the parallel processing required for AI training, inference workloads often have different requirements that may be better served by specialized architectures. Inference applications typically prioritize low latency, energy efficiency, and cost-effectiveness over raw computational power, creating opportunities for processors that are optimized for these specific requirements rather than general-purpose parallel processing.

NVIDIA's dependence on TSMC for advanced semiconductor manufacturing creates potential supply chain vulnerabilities that competitors may be able to exploit. Companies that can develop competitive AI processors using less advanced manufacturing processes or alternative foundries may be able to achieve better supply chain resilience and potentially lower costs. The geopolitical tensions surrounding advanced semiconductor manufacturing add additional complexity to NVIDIA's supply chain that may create opportunities for competitors with more diversified manufacturing strategies.

Emerging Competitors and Alternative Architectures

The AI infrastructure market is witnessing the emergence of numerous competitors developing alternative architectures that challenge NVIDIA's GPU-centric approach. These competitors fall into several categories, each with distinct strategies and target markets that create different types of investment opportunities.

AMD represents the most direct competitor to NVIDIA in the GPU market, with its MI300 and upcoming MI400 series processors designed specifically to compete with NVIDIA's data center GPUs. AMD's strategy focuses on offering competitive performance at lower prices, potentially capturing market share from price-sensitive customers. The company's strong position in the CPU market provides opportunities for integrated solutions that combine CPU and GPU capabilities in ways that may be attractive for certain AI workloads.

Intel is pursuing a multi-pronged strategy that includes both discrete GPUs through its Arc and Ponte Vecchio products and integrated AI acceleration through its CPU architectures. Intel's Gaudi processors target AI training and inference workloads with architectures that are optimized specifically for these applications rather than adapted from graphics processing. The company's strong position in the data center CPU market provides opportunities to integrate AI acceleration capabilities directly into existing server architectures.

Hyperscaler companies including Google, Amazon, and Meta are developing custom AI processors that are optimized for their specific workloads and deployment requirements. Google's TPUs have demonstrated the viability of custom AI processors for large-scale applications, while Amazon's Trainium and Inferentia processors target training and inference workloads respectively. These custom processors are not directly available to external customers, but they demonstrate the potential for specialized architectures to achieve significant advantages over general-purpose solutions.

Startup companies are developing innovative AI processor architectures that target specific market segments or applications. Groq has developed processors specifically optimized for AI inference with architectures that prioritize low latency over raw computational power. Cerebras has developed wafer-scale processors that can handle extremely large AI models without the need for complex multi-chip configurations. SambaNova Systems has developed processors that combine different types of processing units to optimize for different aspects of AI workloads.

Market Segmentation and Specialization Opportunities

The AI infrastructure market is becoming increasingly segmented as different types of applications develop distinct requirements that may be better served by specialized solutions rather than general-purpose processors. This segmentation creates opportunities for companies that can develop focused solutions for specific market segments, even if those solutions would not be competitive in the broader market.

The training market, which currently represents the largest segment of AI infrastructure demand, is characterized by requirements for high computational throughput and the ability to handle very large models and datasets. This market segment currently favors GPU-based solutions due to their parallel processing capabilities, but it may also represent opportunities for specialized training processors that can deliver superior performance or efficiency for specific types of models.

The inference market is becoming increasingly important as AI applications move from development to production deployment. Inference workloads have different requirements than training workloads, typically prioritizing low latency, energy efficiency, and cost-effectiveness over raw computational power. This creates opportunities for processors that are specifically optimized for inference applications, potentially including FPGAs, ASICs, or specialized inference processors.

Edge AI applications represent a distinct market segment with requirements that are significantly different from data center applications. Edge deployments are typically constrained by power consumption, physical size, and cost, while requiring real-time processing capabilities. These constraints create opportunities for specialized edge AI processors that can deliver the required performance within the constraints of edge deployment environments.

Industry-specific applications are creating additional market segmentation opportunities. Autonomous vehicles require AI processors that can handle real-time sensor data processing with extremely low latency and high reliability requirements. Healthcare applications may require processors that can handle sensitive data with appropriate security and compliance measures. Financial services applications may require processors that can provide real-time inference with predictable latency characteristics.

The segmentation of the AI infrastructure market creates opportunities for companies that can develop specialized solutions for specific segments, even if those companies cannot compete effectively in the broader market. Companies that can identify underserved market segments and develop solutions that deliver superior value for those specific applications may be able to build sustainable competitive positions despite the presence of larger, more established competitors.

Investment Opportunities and Value Creation

The AI infrastructure hardware sector presents multiple vectors for value creation, ranging from direct investment in semiconductor companies to infrastructure plays that benefit from the broader AI adoption trend. Understanding these different opportunity types and their respective risk-return profiles is essential for investors seeking to capitalize on the AI infrastructure boom while managing the inherent uncertainties of a rapidly evolving technology landscape.

Direct Hardware Investment Opportunities

Direct investment in AI hardware companies offers the potential for substantial returns but requires careful evaluation of technological capabilities, market positioning, and execution risk. The semiconductor industry is characterized by high barriers to entry, substantial capital requirements, and long development cycles, but successful companies can achieve significant competitive advantages and generate exceptional returns for investors.

Established semiconductor companies with AI capabilities represent one category of direct investment opportunities. These companies typically have proven execution capabilities, established customer relationships, and access to advanced manufacturing processes, but they may also face challenges in adapting their existing architectures and business models to AI-specific requirements. The key to evaluating these opportunities lies in understanding how effectively companies can leverage their existing capabilities while developing new competencies required for AI applications.

Startup companies developing innovative AI processor architectures represent higher-risk, higher-potential-return investment opportunities. These companies may be able to develop breakthrough technologies that deliver significant advantages over existing solutions, but they also face substantial execution risks and competitive challenges from established players with greater resources. Successful evaluation of these opportunities requires deep technical expertise and careful assessment of both the technology and the team's ability to execute on their vision.

The timing of investment in AI hardware companies is particularly critical due to the long development cycles and rapidly evolving market requirements. Companies that are too early may develop technologies for markets that do not yet exist, while companies that are too late may find that established players have already captured the most attractive opportunities. Investors must carefully balance the potential for breakthrough returns against the risks of technological obsolescence or market timing failures.

Intellectual property represents a particularly important consideration for AI hardware investments. Companies with strong patent portfolios may be able to defend their market positions and generate licensing revenue even if their direct product sales face competitive pressure. Conversely, companies that infringe on existing patents may face substantial legal and financial risks that could undermine their business prospects.

Infrastructure and Platform Plays

Infrastructure and platform investments offer exposure to the AI hardware boom while potentially providing more diversified risk profiles than direct hardware investments. These opportunities typically involve companies that provide essential services or components that benefit from increased AI adoption regardless of which specific hardware architectures ultimately succeed in the market.

Data center real estate and construction companies represent one category of infrastructure investment opportunities. The massive capital requirements for AI-enabled data centers create substantial demand for specialized real estate development and construction services. Companies that can develop expertise in AI-specific data center requirements, including power distribution, cooling systems, and high-speed networking, may be able to capture significant value from the infrastructure buildout.

Power generation and distribution companies may benefit from the substantial energy requirements of AI infrastructure. Data centers are among the largest consumers of electrical power, and AI workloads typically require even more power than traditional data center applications. Companies that can provide reliable, cost-effective power solutions for AI data centers may be able to generate substantial returns from the infrastructure expansion.

Cooling and thermal management companies represent another infrastructure opportunity created by AI hardware requirements. AI processors typically generate substantial heat that must be efficiently removed to maintain performance and reliability. Companies that can develop innovative cooling solutions that improve the efficiency and reduce the costs of AI data center operations may be able to capture significant value from the infrastructure expansion.

Software and tools companies that support AI hardware development and deployment represent platform investment opportunities. The complexity of AI hardware architectures creates demand for sophisticated software tools that can optimize performance, manage resources, and simplify development processes. Companies that can develop essential software platforms for AI infrastructure may be able to generate recurring revenue streams with high switching costs.

Supply Chain and Component Opportunities

The AI infrastructure boom is creating substantial demand for specialized components and materials that may not be directly visible to end users but are essential for the functioning of AI systems. These supply chain opportunities often offer more stable, predictable returns than direct hardware investments while still providing exposure to the AI growth trend.

Advanced packaging and interconnect technologies represent critical components for AI processors that require high-bandwidth, low-latency connections between different parts of the system. Companies that can develop innovative packaging solutions that improve performance or reduce costs for AI processors may be able to capture significant value from the hardware evolution.

Memory and storage companies may benefit from the substantial data requirements of AI applications. AI training and inference workloads typically require access to large amounts of data with high bandwidth and low latency characteristics. Companies that can develop memory and storage solutions optimized for AI workloads may be able to command premium pricing and capture significant market share.

Optical networking and high-speed interconnect companies may benefit from the networking requirements of large-scale AI systems. Training large AI models often requires coordination between hundreds or thousands of processors, creating demand for high-bandwidth, low-latency networking solutions. Companies that can develop networking technologies optimized for AI workloads may be able to capture significant value from the infrastructure expansion.

Testing and validation equipment companies may benefit from the complexity of AI hardware development and deployment. The specialized requirements of AI processors create demand for sophisticated testing equipment that can verify performance, reliability, and compliance with various standards. Companies that can develop testing solutions specifically for AI hardware may be able to generate substantial returns from the hardware development cycle.

Geographic and Market Segment Strategies

The global nature of the AI infrastructure market creates opportunities for geographic and market segment specialization strategies that may allow companies to capture value even if they cannot compete effectively in the broader global market. Understanding these specialized opportunities is essential for investors seeking to identify undervalued companies or emerging market leaders.

Regional market opportunities exist in areas where local requirements, regulations, or preferences create demand for specialized solutions. European companies may be able to capture value by developing AI infrastructure solutions that comply with local data protection and digital sovereignty requirements. Asian companies may be able to leverage manufacturing capabilities and local market knowledge to develop cost-effective solutions for regional markets.

Vertical market specialization represents another opportunity for companies that can develop deep expertise in specific industry applications. Healthcare AI applications have unique requirements for data security, regulatory compliance, and integration with existing medical systems. Automotive AI applications require real-time processing capabilities with extremely high reliability requirements. Financial services AI applications need predictable latency and robust security measures.

The timing of market entry varies significantly across different geographic regions and market segments, creating opportunities for companies that can identify and capitalize on emerging opportunities before they become highly competitive. Companies that can establish strong positions in emerging markets or applications may be able to build sustainable competitive advantages that persist even as those markets mature and attract additional competition.

Risk Assessment and Mitigation Strategies

Investment in the AI infrastructure hardware sector involves multiple categories of risk that must be carefully evaluated and managed to achieve successful outcomes. The rapid pace of technological change, intense competition, and substantial capital requirements create a complex risk environment that requires sophisticated analysis and mitigation strategies.

Technology and Obsolescence Risks

The rapid pace of innovation in AI hardware creates substantial technology and obsolescence risks that can quickly undermine the value of investments in specific companies or technologies. The semiconductor industry has historically been characterized by rapid performance improvements and regular architectural transitions that can render existing products obsolete within relatively short time periods.

Algorithmic advances represent a particular category of technology risk that is unique to AI infrastructure investments. Improvements in AI algorithms can potentially reduce the computational requirements for specific applications, undermining demand for hardware solutions that were designed for previous-generation algorithms. The recent example of DeepSeek's V3 model, which achieved substantial efficiency improvements compared to previous models, demonstrates how algorithmic advances can potentially disrupt hardware market assumptions.

However, historical evidence suggests that algorithmic efficiency improvements are typically offset by increased experimentation and more sophisticated applications that consume the available computational resources. This pattern, sometimes referred to as Jevons' paradox in the context of computational resources, suggests that efficiency improvements may actually increase total demand for computational resources rather than reducing it. Investors must carefully evaluate whether specific algorithmic advances represent temporary disruptions or fundamental shifts in computational requirements.

Manufacturing technology risks represent another category of technology risk that affects AI hardware investments. The semiconductor industry depends on continuous advances in manufacturing processes to achieve performance and cost improvements. Companies that cannot access the most advanced manufacturing processes may find themselves at significant competitive disadvantages, while companies that are overly dependent on specific manufacturing partners may face supply chain risks.

The concentration of advanced semiconductor manufacturing in a small number of facilities, primarily operated by TSMC in Taiwan, creates systemic risks for the entire AI hardware industry. Geopolitical tensions, natural disasters, or other disruptions to these facilities could have substantial impacts on the entire AI infrastructure market. Companies that can develop competitive products using less advanced manufacturing processes or alternative manufacturing partners may be able to achieve more resilient competitive positions.

Market and Competitive Risks

The intense competition in the AI infrastructure market creates substantial risks for companies that cannot maintain technological leadership or achieve sufficient scale to compete effectively. The market is characterized by rapid technological change, substantial capital requirements, and network effects that can create winner-take-all dynamics in certain segments.

Customer concentration represents a significant risk for many AI hardware companies. The market for AI infrastructure is dominated by a relatively small number of large customers, including hyperscale cloud providers and major technology companies. Companies that are overly dependent on a small number of customers may face substantial risks if those customers change their technology strategies or develop internal alternatives.

The emergence of custom silicon development by major technology companies represents a particular competitive risk for AI hardware companies. Companies like Google, Amazon, and Meta have demonstrated the ability to develop custom AI processors that are optimized for their specific workloads and requirements. As more companies develop internal AI capabilities, they may choose to develop custom silicon solutions rather than purchasing external hardware, potentially reducing the addressable market for independent AI hardware companies.

Pricing pressure represents another significant market risk as the AI infrastructure market matures and competition intensifies. The current high prices for AI hardware are partially supported by supply constraints and the early stage of market development. As supply increases and alternative solutions become available, pricing pressure may reduce the profitability of AI hardware companies and change the economics of the entire market.

The cyclical nature of the semiconductor industry creates additional market risks that may affect AI hardware investments. While AI applications are driving current demand growth, the semiconductor industry has historically experienced cyclical downturns that can significantly impact company performance and valuations. Investors must consider how AI hardware companies might perform during broader semiconductor industry downturns.

Regulatory and Geopolitical Risks

The AI infrastructure market is increasingly subject to regulatory and geopolitical risks that can significantly impact company performance and market access. Export controls, trade restrictions, and national security considerations are becoming increasingly important factors in AI hardware markets.

Export controls on advanced semiconductor technology represent a significant risk for companies that depend on international markets or global supply chains. The United States has implemented restrictions on the export of advanced AI chips to China, and these restrictions may be expanded to include additional countries or technologies. Companies that cannot adapt their products or business models to comply with export restrictions may face significant market access limitations.

Data sovereignty and digital independence initiatives in various countries are creating demand for locally-controlled AI infrastructure, but they are also creating market fragmentation that may limit the addressable market for companies that cannot meet local requirements. European initiatives to develop sovereign AI capabilities may create opportunities for European companies while potentially limiting market access for non-European providers.

The strategic importance of AI capabilities is leading governments to implement policies that may affect the AI infrastructure market in unpredictable ways. National security considerations may lead to restrictions on foreign investment in AI hardware companies or requirements for domestic production of critical AI infrastructure components. Companies must carefully evaluate their exposure to these regulatory risks and develop strategies to mitigate potential impacts.

Intellectual property and technology transfer restrictions represent additional regulatory risks that may affect AI hardware companies. Governments may implement restrictions on the transfer of AI-related technologies or require companies to share intellectual property as a condition of market access. These restrictions could significantly impact the business models and competitive positions of AI hardware companies.

Financial and Execution Risks

The substantial capital requirements and long development cycles characteristic of the AI hardware industry create significant financial and execution risks that must be carefully managed. Semiconductor development typically requires hundreds of millions of dollars in upfront investment with uncertain returns that may not be realized for several years.

Development timeline risks represent a significant category of execution risk for AI hardware companies. Semiconductor development projects are complex and often experience delays that can significantly impact time-to-market and competitive positioning. Companies that experience significant delays may find that their products are obsolete by the time they reach the market, or that competitors have captured market share with earlier product launches.

Manufacturing and supply chain risks can significantly impact the ability of AI hardware companies to meet customer demand and achieve their business objectives. The complexity of advanced semiconductor manufacturing creates numerous potential points of failure that can disrupt production schedules and impact product quality. Companies must develop robust supply chain management capabilities and contingency plans to mitigate these risks.

Customer adoption risks represent another category of execution risk that can significantly impact AI hardware companies. Even companies that successfully develop innovative technologies may struggle to achieve customer adoption if their products do not meet customer requirements or if customers are reluctant to switch from existing solutions. Companies must carefully validate customer requirements and develop effective go-to-market strategies to mitigate adoption risks.

Financial risks associated with the capital-intensive nature of semiconductor development can be particularly challenging for smaller companies or startups. Companies that cannot access sufficient capital to complete their development programs may be forced to abandon projects or accept unfavorable financing terms that dilute existing investors. Investors must carefully evaluate the financial resources and capital access capabilities of AI hardware companies.

Future Outlook and Strategic Implications

The AI infrastructure hardware sector is poised for continued evolution as technological capabilities advance, market requirements become more sophisticated, and competitive dynamics shift in response to changing customer needs and regulatory environments. Understanding the likely trajectory of these changes is essential for investors seeking to position themselves advantageously for the next phase of market development.

Technology Roadmap and Innovation Trajectories

The evolution of AI infrastructure hardware is being driven by several key technology trends that are likely to shape the market over the next decade. The continued scaling of AI models is creating demand for hardware architectures that can efficiently handle increasingly large and complex computational workloads. While current large language models require substantial computational resources for training and inference, future models are likely to be even more demanding, creating sustained demand for hardware innovation.

The transition from training-focused to inference-focused workloads represents a fundamental shift in market requirements that is likely to accelerate over the next several years. As AI models move from research and development environments to production deployment, the relative importance of inference performance compared to training performance is increasing. This shift is creating opportunities for hardware architectures that are specifically optimized for inference workloads, potentially including specialized processors that prioritize energy efficiency and cost-effectiveness over raw computational power.

Edge AI deployment is becoming increasingly important as applications require real-time processing capabilities with minimal latency. The deployment of AI capabilities in autonomous vehicles, industrial automation systems, and consumer devices is creating demand for hardware solutions that can deliver AI performance within the constraints of edge environments. This trend is likely to drive innovation in low-power AI processors and specialized architectures that can deliver the required performance within strict power and size constraints.

The integration of AI capabilities into existing computing architectures represents another important trend that is likely to shape the future of AI infrastructure hardware. Rather than deploying separate AI processors, future systems may integrate AI acceleration capabilities directly into CPUs, GPUs, and other computing components. This integration could potentially reduce costs and improve efficiency while making AI capabilities more accessible to a broader range of applications.

Quantum computing represents a longer-term technology trend that could potentially disrupt the AI infrastructure market, although the timeline for practical quantum AI applications remains uncertain. Quantum computers may be able to solve certain types of AI problems more efficiently than classical computers, but significant technical challenges remain before quantum computing becomes practical for most AI applications. Investors should monitor quantum computing developments while recognizing that the impact on AI infrastructure markets is likely to be limited in the near term.

Market Evolution and Consolidation Patterns

The AI infrastructure hardware market is likely to experience significant consolidation over the next decade as the industry matures and competitive dynamics favor companies with scale advantages and established market positions. The substantial capital requirements for advanced semiconductor development create natural barriers to entry that may limit the number of viable competitors in certain market segments.

Vertical integration is becoming increasingly common as companies seek to control more of their AI infrastructure stack to achieve competitive advantages. Hyperscale cloud providers are developing custom AI processors, while AI software companies are exploring hardware development to optimize their solutions. This vertical integration trend may reduce the addressable market for independent hardware companies while creating opportunities for companies that can provide specialized components or services to vertically integrated players.

The emergence of platform ecosystems around specific AI hardware architectures is likely to create network effects that favor certain solutions over others. Companies that can build strong developer ecosystems and software tool chains around their hardware platforms may be able to achieve sustainable competitive advantages even if their hardware performance is not superior to alternatives. The success of NVIDIA's CUDA ecosystem demonstrates the importance of software platforms in hardware adoption decisions.

Geographic market fragmentation is likely to continue as governments implement policies to develop domestic AI capabilities and reduce dependence on foreign technology providers. This fragmentation may create opportunities for regional players while potentially limiting the global market access of companies that cannot meet local requirements. Companies that can develop strategies to address multiple regional markets may be able to achieve greater scale and resilience than companies that focus on single markets.

The standardization of AI hardware interfaces and protocols may reduce differentiation opportunities while potentially expanding the total addressable market by making it easier for customers to switch between different hardware solutions. Industry organizations are working to develop standards for AI hardware interfaces, but the rapid pace of technological change makes standardization challenging. Companies must balance the benefits of participating in standardization efforts against the potential loss of proprietary advantages.

Investment Strategy Implications

The evolving AI infrastructure hardware landscape has several important implications for investment strategy that investors should consider when evaluating opportunities in this sector. The high growth rates and substantial market opportunities must be balanced against the significant risks and uncertainties that characterize this rapidly changing market.

Diversification across different types of AI hardware investments may be essential for managing the risks associated with technological uncertainty and competitive dynamics. Rather than concentrating investments in a single technology or company, investors may benefit from building portfolios that include exposure to different hardware architectures, market segments, and geographic regions. This diversification can help mitigate the risks associated with technological obsolescence or market timing failures.

The importance of technical due diligence cannot be overstated when evaluating AI hardware investments. The complexity of semiconductor technology and the rapid pace of innovation require investors to develop deep technical expertise or access to technical advisors who can evaluate the competitive positioning and technological viability of different solutions. Superficial analysis based solely on financial metrics or market positioning may be insufficient for identifying successful investments in this sector.

The timing of investment decisions is particularly critical in the AI hardware sector due to the long development cycles and rapidly evolving market requirements. Early-stage investments may offer the potential for exceptional returns but carry substantial execution and market risks. Later-stage investments may offer more predictable returns but may also face greater competition and lower growth potential. Investors must carefully evaluate the stage of market development and company maturity when making investment decisions.

The global nature of the AI infrastructure market requires investors to consider geopolitical and regulatory factors that may affect investment outcomes. Companies with exposure to international markets may face risks from trade restrictions, export controls, or other regulatory changes. Conversely, companies that can navigate these complexities and access multiple markets may be able to achieve greater scale and resilience than companies with more limited geographic reach.

The capital-intensive nature of semiconductor development requires investors to carefully evaluate the financial resources and capital access capabilities of potential investments. Companies that cannot access sufficient capital to complete their development programs may be forced to abandon projects or accept unfavorable financing terms. Investors should consider not only the current financial position of companies but also their ability to access additional capital as needed to execute their business plans.

Conclusion

The AI infrastructure hardware sector represents one of the most significant investment opportunities of the current decade, driven by fundamental shifts in how computational resources are designed, deployed, and optimized for artificial intelligence applications. The convergence of massive market growth, technological innovation, and strategic necessity is creating a landscape where successful investments may generate exceptional returns while unsuccessful ones face substantial risks.

The market fundamentals supporting this investment thesis are compelling and multifaceted. With global AI infrastructure spending projected to reach $499 billion by 2034 and data center infrastructure requiring $6.7 trillion in capital investment by 2030, the scale of opportunity extends far beyond typical technology sector growth patterns. This growth is underpinned by enterprise adoption patterns that suggest sustained, long-term demand rather than speculative bubbles, supported by geopolitical considerations that provide additional stability and government backing for AI infrastructure development.

The technology architecture evolution currently underway represents both the greatest opportunity and the greatest risk for investors in this sector. While NVIDIA's GPU-based solutions continue to dominate the training market, the emergence of specialized processors optimized for inference workloads, edge applications, and specific industry use cases is creating new competitive dynamics that may reshape the entire market. The success of companies developing FPGA and ASIC solutions for specific AI applications demonstrates that technological specialization can overcome the scale advantages of incumbent players, but it also highlights the execution risks associated with betting on specific architectural approaches.

The competitive landscape analysis reveals a market in transition, where established players face new challenges while emerging competitors seek to capture value through innovation and specialization. NVIDIA's current dominance, while substantial, faces potential vulnerabilities in the inference market, supply chain dependencies, and the emergence of custom silicon development by major customers. These vulnerabilities create opportunities for companies that can develop competitive alternatives, but they also demonstrate the challenges of competing against well-established players with substantial resources and ecosystem advantages.

The investment opportunities span multiple categories and risk profiles, from direct hardware investments that offer the potential for exceptional returns but carry substantial technological and execution risks, to infrastructure and platform plays that provide more diversified exposure to the AI growth trend. Supply chain and component opportunities offer additional vectors for value creation, while geographic and market segment specialization strategies may allow companies to build sustainable competitive positions in specific niches.

Risk assessment and mitigation strategies are essential components of any AI infrastructure hardware investment approach. Technology and obsolescence risks, market and competitive pressures, regulatory and geopolitical uncertainties, and financial and execution challenges all require careful evaluation and management. The rapid pace of change in this sector means that risk profiles can shift quickly, requiring active monitoring and adaptive strategies.

The future outlook suggests continued evolution and consolidation as the market matures, with technology roadmaps pointing toward increased specialization, edge deployment, and integration of AI capabilities into existing computing architectures. Market evolution patterns indicate likely consolidation around platform ecosystems and vertical integration strategies, while geographic fragmentation may create both opportunities and challenges for companies seeking global scale.

For investors, the AI infrastructure hardware sector offers the potential for substantial value creation, but success requires sophisticated analysis, careful risk management, and adaptive strategies that can respond to rapid technological and market changes. The companies that successfully navigate this complex landscape—whether through breakthrough technologies, superior execution, or strategic positioning—stand to capture disproportionate value in a market where performance advantages translate directly to competitive moats and sustainable returns.

The investment thesis for AI infrastructure hardware is ultimately based on the recognition that we are witnessing a fundamental transformation in how computational resources are designed and deployed, driven by applications that represent genuine advances in human capability rather than incremental improvements to existing processes. This transformation creates both unprecedented opportunities and substantial risks, requiring investors to balance the potential for exceptional returns against the inherent uncertainties of a rapidly evolving technology landscape.

The key to successful investment in this sector lies not in predicting specific technological winners or market outcomes, but in understanding the fundamental drivers of change and positioning investments to benefit from multiple potential scenarios while managing downside risks. Companies that can deliver superior performance, efficiency, or cost-effectiveness for specific AI applications, while maintaining the flexibility to adapt to changing market requirements, are most likely to generate sustainable value for investors in this dynamic and rapidly growing market.

References

[1] Precedence Research. "Artificial Intelligence (AI) Infrastructure Market Size to Hit USD 499.33 Billion by 2034." February 2025. https://www.precedenceresearch.com/artificial-intelligence-infrastructure-market

[2] McKinsey & Company. "The cost of compute: A $7 trillion race to scale data centers." April 28, 2025. https://www.mckinsey.com/industries/technology-media-and-telecommunications/our-insights/the-cost-of-compute-a-7-trillion-dollar-race-to-scale-data-centers

[3] MIT FutureTech. "What drives progress in AI? Trends in Compute." January 3, 2025. https://futuretech.mit.edu/news/what-drives-progress-in-ai-trends-in-compute

[4] AIMultiple Research. "Top 20 AI Chip Makers: NVIDIA & Its Competitors in 2025." June 10, 2025. https://research.aimultiple.com/ai-chip-makers/

[5] Perplexity AI. "FPGA vs. ASIC for AI." November 20, 2024. https://www.perplexity.ai/page/fpga-vs-asic-for-ai-25yh4OdRRBCq4DUHuDddiQ

[6] Data Center Frontier. "The Evolving Landscape of Data Centers: Power, AI and Capital in 2025." April 18, 2025. https://www.datacenterfrontier.com/sponsored/article/55283194/the-evolving-landscape-of-data-centers-power-ai-and-capital-in-2025

[7] Equinix Blog. "How AI is Influencing Data Center Infrastructure Trends in 2025." January 8, 2025. https://blog.equinix.com/blog/2025/01/08/how-ai-is-influencing-data-center-infrastructure-trends-in-2025/

[8] RCR Wireless. "'Every data center is becoming an AI data center' in 2025." February 11, 2025. https://www.rcrwireless.com/20250211/ai-infrastructure/ai-data-center-2025

[9] The Network Installers. "25+ AI Data Center Statistics & Trends (2025 Updated)." May 5, 2025. https://thenetworkinstallers.com/blog/ai-data-center-statistics/

[10] Epoch AI. "Training Compute of Frontier AI Models Grows by 4-5x per Year." May 28, 2024. https://epoch.ai/blog/training-compute-of-frontier-ai-models-grows-by-4-5x-per-year