The AI Paradox: How Sam Altman's "Gentle Singularity" and Apple's Sobering Research Can Both Be True

A deep dive into the seemingly contradictory narratives shaping our understanding of artificial intelligence's current capabilities and future trajectory

By Bogdan Cristei & Manus AI

In the rapidly evolving landscape of artificial intelligence, two starkly different narratives have emerged that appear to be fundamentally at odds with each other. On one side, OpenAI CEO Sam Altman recently published a blog post titled "The Gentle Singularity," painting an optimistic picture of humanity having crossed an "event horizon" into an era of digital superintelligence. On the other side, Apple researchers have published damning findings showing that current AI models suffer from "complete accuracy collapse" when faced with complex reasoning tasks, suggesting fundamental limitations in what many consider to be our most advanced AI systems.

At first glance, these perspectives seem irreconcilable. How can we simultaneously be living through a gentle transition to superintelligence while our most sophisticated AI models are failing basic reasoning tests? How can both Sam Altman's vision of exponential progress and Apple's evidence of fundamental limitations be true at the same time?

The answer lies not in choosing sides, but in understanding that both perspectives capture different aspects of a complex reality. This apparent paradox reveals something profound about the nature of intelligence itself, the multifaceted character of AI progress, and the nuanced path we're actually taking toward artificial general intelligence. Rather than representing contradictory views, these perspectives illuminate different dimensions of the same phenomenon, offering us a more complete and realistic understanding of where we stand in the AI revolution.

This article explores how these seemingly opposing viewpoints can coexist, what this means for our understanding of current AI capabilities, and how this reconciliation might inform our expectations for the future of artificial intelligence. By examining the evidence from both sides and synthesizing their insights, we can develop a more nuanced and accurate picture of the AI landscape that acknowledges both the remarkable achievements and the significant limitations of today's systems.

Sam Altman's Vision: The Gentle Singularity

In his recent blog post, Sam Altman presents a compelling case that humanity has already crossed a critical threshold in artificial intelligence development [1]. His central thesis is both bold and measured: we are past the "event horizon" of the singularity, yet the transition is proving to be more gradual and manageable than many predicted. This perspective, which he terms the "gentle singularity," offers a framework for understanding AI progress that emphasizes continuity over disruption, adaptation over upheaval.

Altman's argument rests on several key observations about the current state of AI technology. First, he notes that we have "recently built systems that are smarter than people in many ways, and are able to significantly amplify the output of people using them" [1]. This isn't a claim about general intelligence, but rather an acknowledgment that in specific domains and tasks, AI systems have already surpassed human capabilities. The evidence for this is abundant: AI systems can process vast amounts of information faster than any human, generate creative content across multiple modalities, solve complex mathematical problems, and assist in scientific research at unprecedented scales.

Perhaps most significantly, Altman argues that "the least-likely part of the work is behind us" [1]. This represents a crucial shift in perspective about AI development. Rather than viewing current systems as primitive stepping stones to future intelligence, Altman suggests that the fundamental scientific breakthroughs that enable artificial intelligence have already been achieved. The systems we have today—GPT-4, o3, and their contemporaries—represent not just impressive demonstrations but proof-of-concept implementations of the core principles that will drive us toward superintelligence.

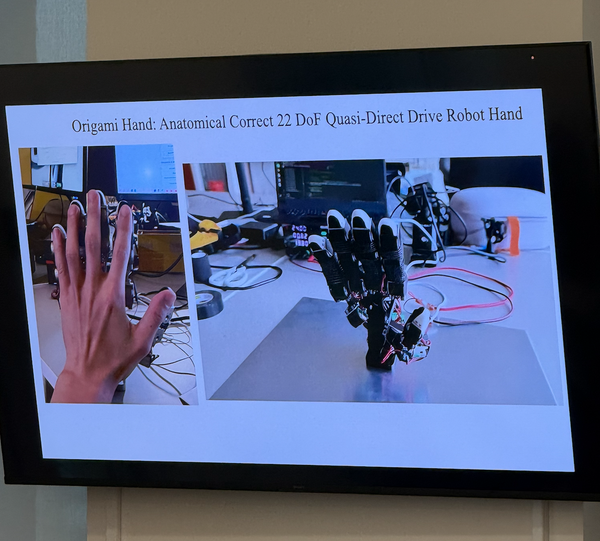

The timeline Altman presents is both ambitious and specific. He predicts that 2025 will see "the arrival of agents that can do real cognitive work," with 2026 bringing "systems that can figure out novel insights," and 2027 potentially delivering "robots that can do tasks in the real world" [1]. This progression suggests a steady advancement in AI capabilities across different domains, from digital cognitive tasks to physical world interaction.

Central to Altman's vision is the concept of recursive self-improvement and self-reinforcing loops. He observes that "the tools we have already built will help us find further scientific insights and aid us in creating better AI systems" [1]. This creates what he describes as "a larval version of recursive self-improvement," where AI systems contribute to their own advancement. The economic value creation from AI has already started "a flywheel of compounding infrastructure buildout," creating the conditions for accelerated progress.

Altman also addresses the human element of this transition, arguing that people are "capable of adapting to almost anything" and that "wonders become routine, and then table stakes" [1]. This observation captures something essential about human psychology and technological adoption. We quickly adjust our expectations upward, moving from amazement at AI's ability to generate a paragraph to wondering when it can write a novel, from being impressed by medical diagnoses to expecting AI to develop cures.

The productivity gains Altman describes are already measurable. He notes that scientists report being "two or three times more productive than they were before AI" [1]. This isn't speculative future benefit but present-day reality, suggesting that the economic and scientific impact of AI is already substantial and growing. The implication is that we're not waiting for some future breakthrough to unlock AI's potential—we're already living in the early stages of that transformation.

Importantly, Altman's vision acknowledges challenges while maintaining optimism. He recognizes that "whole classes of jobs going away" will be difficult, but argues that "the world will be getting so much richer so quickly that we'll be able to seriously entertain new policy ideas we never could before" [1]. This perspective suggests that the benefits of AI advancement will create new possibilities for addressing its disruptive effects.

The "gentle" nature of Altman's singularity lies in its gradualism. Rather than a sudden, disruptive transformation, he envisions a process where "the singularity happens bit by bit, and the merge happens slowly" [1]. From this perspective, we're climbing "the long arc of exponential technological progress" where change "always looks vertical looking forward and flat going backwards, but it's one smooth curve" [1]. This framing suggests that while the pace of change may feel overwhelming when looking ahead, the actual experience of living through it will feel more manageable and continuous.

Altman's perspective is fundamentally optimistic about human agency and adaptation. He believes that while AI will dramatically expand our capabilities, humans will continue to find new things to do and want, just as we did after previous technological revolutions. The comparison to the Industrial Revolution is implicit but clear: just as that transformation ultimately created new forms of work and value, the AI revolution will generate new opportunities and purposes for human activity.

This vision of the gentle singularity provides a framework for understanding current AI developments not as isolated achievements but as part of a coherent progression toward more capable systems. It suggests that the apparent inconsistencies and limitations in current AI are temporary growing pains rather than fundamental barriers, and that the trajectory toward superintelligence, while not without challenges, is both inevitable and manageable.

Apple's Reality Check: The Fundamental Limitations of AI Reasoning

While Sam Altman paints a picture of steady progress toward superintelligence, Apple's recent research presents a starkly different assessment of current AI capabilities. The study, titled "GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models," delivers what AI researcher Gary Marcus described as "pretty devastating" findings about the reasoning abilities of our most advanced AI systems [2].

The Apple research team, led by Iman Mirzadeh and colleagues, conducted a systematic investigation into whether large language models (LLMs) and large reasoning models (LRMs) possess genuine reasoning capabilities or merely sophisticated pattern-matching abilities [3]. Their findings challenge fundamental assumptions about AI progress and suggest that current approaches to artificial intelligence may have encountered "fundamental barriers to generalisable reasoning" [2].

The study's methodology was both elegant and revealing. Rather than relying on existing benchmarks that might be contaminated by training data, the researchers created GSM-Symbolic, a new evaluation framework based on symbolic templates that could generate diverse variations of mathematical problems. This approach allowed them to test whether AI models could truly reason through problems or were simply recognizing and reproducing patterns from their training data.

The results were striking and concerning. When presented with different instantiations of the same underlying problem—where only numerical values were changed—AI models showed "noticeable variance" in performance [3]. This suggests that the models weren't truly understanding the logical structure of the problems but were instead relying on surface-level pattern recognition. More troubling still, when researchers added a single irrelevant clause to problems—information that appeared relevant but didn't contribute to the solution—performance dropped by up to 65% across all state-of-the-art models [3].

Perhaps most revealing was what happened as problem complexity increased. The Apple researchers found that AI models experienced "complete accuracy collapse" when faced with highly complex reasoning tasks [2]. But the pattern of this collapse revealed something fundamental about how these systems operate. On simple problems, the models would often find correct solutions early in their "thinking" process, then continue processing unnecessarily. As problems became more complex, models would first explore incorrect solutions before eventually arriving at correct ones. But for the most challenging problems, the models would enter what the researchers termed "collapse," failing to generate any correct solutions at all.

Even more concerning was the discovery that as models approached their performance collapse point, they "counterintuitively begin to reduce their reasoning effort despite increasing problem difficulty" [2]. This behavior is the opposite of what we would expect from a genuinely reasoning system, which should increase its analytical effort when faced with more challenging problems. Instead, these AI systems appeared to give up precisely when more sophisticated reasoning was most needed.

The Apple study tested some of the most advanced AI systems available, including OpenAI's o3, Google's Gemini Thinking, Anthropic's Claude 3.7 Sonnet-Thinking, and DeepSeek-R1 [2]. The fact that all of these systems exhibited similar limitations suggests that the problems identified are not specific to any particular architecture or training approach, but rather reflect fundamental issues with current AI methodologies.

The researchers' conclusion was unambiguous: current LLMs are "not capable of genuine logical reasoning" and instead "attempt to replicate reasoning steps observed in their training data" [3]. This distinction is crucial because it suggests that what appears to be reasoning is actually sophisticated pattern matching and memorization. The models have learned to produce text that resembles logical thinking, but they lack the underlying cognitive processes that enable true reasoning.

Gary Marcus, a prominent AI researcher and critic, seized on these findings as evidence that the field's optimism about artificial general intelligence may be misplaced. In his analysis of the Apple paper, Marcus wrote that "anybody who thinks LLMs are a direct route to the sort [of] AGI that could fundamentally transform society for the good is kidding themselves" [2]. This perspective suggests that current AI approaches may represent a dead end rather than a pathway to more capable systems.

The implications of Apple's research extend beyond technical limitations to broader questions about AI development strategy. Andrew Rogoyski of the Institute for People-Centred AI at the University of Surrey suggested that the findings indicate the industry may have reached "a cul-de-sac in current approaches" [2]. This assessment implies that continued scaling of existing architectures and training methods may not lead to the breakthrough capabilities that many in the field anticipate.

The Apple research also highlights the problem of what researchers call "data contamination" in AI evaluation. Many existing benchmarks may have been inadvertently included in training datasets, leading to inflated performance estimates. The GSM-Symbolic approach addresses this by generating novel problem instances that couldn't have been seen during training, providing a more honest assessment of model capabilities.

The study's focus on mathematical reasoning is particularly significant because mathematics represents a domain where logical thinking is paramount and where correct answers are unambiguous. If AI systems struggle with mathematical reasoning—a relatively constrained domain with clear rules—it raises serious questions about their ability to handle the more complex, ambiguous reasoning required for general intelligence.

The Apple researchers were careful to acknowledge limitations in their study, noting that their focus on puzzle-solving represents only one aspect of reasoning ability. However, the fundamental issues they identified—the reliance on pattern matching rather than logical thinking, the performance collapse under complexity, and the reduction of effort when more reasoning is needed—suggest problems that extend beyond mathematical domains.

These findings paint a picture of AI systems that are simultaneously impressive and fundamentally limited. They can produce remarkable outputs in many contexts, but their apparent reasoning abilities may be largely illusory. This perspective suggests that the path to artificial general intelligence may be longer and more difficult than current progress might suggest, requiring fundamental advances in our understanding of intelligence and reasoning rather than simply scaling existing approaches.

The Reconciliation: How Both Perspectives Can Be True

The apparent contradiction between Sam Altman's optimistic vision and Apple's sobering research findings dissolves when we recognize that they are examining different aspects of the same complex phenomenon. Rather than representing incompatible worldviews, these perspectives illuminate different dimensions of artificial intelligence that can coexist and even complement each other. Understanding how both can be true requires us to think more precisely about what we mean by intelligence, reasoning, and progress in AI development.

The Spectrum of Intelligence

The key to reconciling these viewpoints lies in recognizing that intelligence is not a monolithic capability but rather a spectrum of different cognitive functions. Altman's observations about AI systems being "smarter than people in many ways" and Apple's findings about reasoning limitations can both be accurate if we understand that current AI excels in some types of intelligence while failing at others.

Consider the domains where AI demonstrably surpasses human capabilities: processing vast amounts of text, recognizing patterns across enormous datasets, generating creative content, translating between languages, and synthesizing information from multiple sources. In these areas, AI systems genuinely exhibit superhuman performance. A system like ChatGPT can indeed be "more powerful than any human who has ever lived" in terms of its ability to access and synthesize information, as Altman suggests [1].

However, Apple's research reveals that these same systems fail at what we might call "deep reasoning"—the ability to work through novel logical problems step by step, to maintain consistent reasoning under complexity, and to distinguish between relevant and irrelevant information in problem-solving contexts. This type of systematic, logical thinking represents a different category of intelligence than the pattern recognition and information synthesis at which current AI excels.

The distinction becomes clearer when we consider how humans use these different types of intelligence. Much of what we consider intelligent behavior in daily life—understanding context, making connections between ideas, generating appropriate responses to complex situations—relies heavily on pattern recognition and learned associations. These are precisely the areas where current AI systems shine. But when we need to work through a complex logical proof, debug a novel technical problem, or reason through an unfamiliar ethical dilemma, we engage different cognitive processes that current AI systems struggle to replicate.

The Productivity Paradox

This spectrum view of intelligence helps explain why Altman's observations about productivity gains are accurate even while Apple's findings about reasoning limitations are also true. Scientists reporting 2-3x productivity improvements aren't necessarily using AI for deep logical reasoning. Instead, they're leveraging AI's strengths in information processing, literature synthesis, code generation, and hypothesis formation—tasks that benefit enormously from AI's pattern recognition capabilities.

A researcher using AI to analyze thousands of papers, generate initial hypotheses, or draft sections of a manuscript is experiencing genuine productivity gains even if the AI cannot engage in the kind of step-by-step logical reasoning that Apple's study examined. The AI serves as an incredibly powerful research assistant, amplifying human capabilities in areas where pattern recognition and information synthesis are valuable, while the human researcher provides the logical reasoning and critical evaluation that current AI systems lack.

This division of cognitive labor explains why we can simultaneously experience AI as transformative in practical applications while recognizing its fundamental limitations in reasoning tasks. The productivity gains are real because they don't depend on the AI possessing human-like reasoning abilities—they depend on AI's superhuman capabilities in information processing and pattern recognition.

The Narrow Superintelligence Hypothesis

Perhaps the most useful framework for understanding this apparent paradox is what we might call "narrow superintelligence." Current AI systems may represent superintelligent capabilities within specific, constrained domains while remaining fundamentally limited in their general reasoning abilities. This concept helps explain how both Altman's and Apple's observations can be simultaneously true.

In domains like language processing, image recognition, and information synthesis, AI systems have achieved superhuman performance. They can process more information, identify more subtle patterns, and generate more diverse outputs than any human. Within these narrow domains, they genuinely represent a form of superintelligence. This is the superintelligence that Altman observes when he notes that we have systems "smarter than people in many ways."

However, these narrow superintelligences lack the general reasoning capabilities that Apple's research examines. They cannot transfer their problem-solving abilities to novel domains, maintain logical consistency under complexity, or engage in the kind of systematic thinking that characterizes human reasoning at its best. They are simultaneously superhuman and subhuman, depending on the specific cognitive task at hand.

This narrow superintelligence hypothesis suggests that the "event horizon" Altman describes may be real within specific domains while the fundamental limitations Apple identifies remain barriers to general intelligence. We may indeed be experiencing a form of singularity in information processing and pattern recognition while still being far from the kind of general artificial intelligence that can reason through novel problems.

The Dual Track Theory of AI Progress

Another way to reconcile these perspectives is through what we might call the "dual track theory" of AI progress. This framework suggests that AI development is proceeding along two parallel but distinct tracks: one focused on scaling and optimizing current approaches, and another focused on developing fundamentally new approaches to reasoning and intelligence.

Track One represents the continuation and refinement of current large language model approaches. This track is delivering the productivity gains and narrow superintelligence capabilities that Altman observes. Systems are becoming more capable at information processing, more sophisticated in their outputs, and more useful in practical applications. The economic value creation and infrastructure buildout that Altman describes are primarily driven by progress on this track.

Track Two represents the fundamental research needed to address the reasoning limitations that Apple's research identifies. This track requires breakthroughs in our understanding of reasoning, logic, and general intelligence that go beyond simply scaling current architectures. Progress on this track may be slower and less predictable than the steady improvements we see in Track One capabilities.

The genius of this dual track framework is that it allows both perspectives to be correct about their respective domains. Altman's optimism about continued rapid progress may be well-founded for Track One capabilities, while Apple's concerns about fundamental limitations may be equally valid for Track Two challenges. The "gentle singularity" may be occurring in Track One domains while Track Two remains a significant unsolved challenge.

The Temporal Dimension

Time provides another crucial dimension for reconciling these perspectives. Altman's vision operates on a timeline of years to decades, while Apple's research examines current capabilities. It's possible that the fundamental limitations Apple identifies represent temporary barriers that will be overcome as new approaches to AI development emerge.

Altman acknowledges that current systems have limitations but argues that "the least-likely part of the work is behind us" [1]. This suggests that while current systems may fail at complex reasoning tasks, the scientific foundations for more capable systems have been established. The reasoning limitations Apple identifies might represent engineering challenges rather than fundamental impossibilities.

Conversely, Apple's findings might indicate that the timeline for achieving general artificial intelligence is longer than Altman's optimistic projections suggest. The fundamental barriers to reasoning that Apple identifies might require years or decades of additional research to overcome, making the path to AGI more gradual than the rapid progression Altman envisions.

The Complementary Nature of the Perspectives

Rather than viewing these perspectives as contradictory, we can see them as complementary contributions to our understanding of AI development. Altman's vision helps us appreciate the transformative potential of current AI capabilities and the economic and social changes they're already driving. Apple's research helps us maintain realistic expectations about current limitations and the challenges that remain to be solved.

Together, these perspectives suggest a more nuanced picture of AI progress: rapid advancement in specific domains combined with persistent challenges in general reasoning, significant productivity gains alongside fundamental limitations, and transformative applications built on systems that lack true understanding. This nuanced view may be more accurate than either pure optimism or pure skepticism about AI capabilities.

The reconciliation of these perspectives also suggests important implications for AI development strategy. It supports continued investment in scaling current approaches to capture their significant benefits while also emphasizing the need for fundamental research into reasoning and general intelligence. It suggests that the path to AGI may be longer and more complex than simple scaling would suggest, requiring breakthroughs in multiple dimensions of intelligence rather than just improvements in a single approach.

Implications for the Future of AI

The reconciliation of Altman's optimistic vision with Apple's sobering research findings has profound implications for how we should think about AI development, investment, and societal preparation. Rather than choosing between unbridled optimism and cautious pessimism, this nuanced understanding suggests a more sophisticated approach to navigating the AI revolution.

Recalibrating Expectations

Perhaps the most immediate implication is the need to recalibrate our expectations about AI progress. The narrow superintelligence framework suggests that we should expect continued rapid advancement in specific domains while recognizing that general artificial intelligence may remain elusive for longer than current hype suggests. This has important consequences for both investors and policymakers who are making decisions based on assumptions about AI timelines.

For businesses and organizations implementing AI solutions, this perspective suggests focusing on applications that leverage AI's current strengths—pattern recognition, information synthesis, and content generation—rather than expecting systems to handle complex reasoning tasks independently. The productivity gains that Altman describes are real and substantial, but they come from augmenting human capabilities rather than replacing human reasoning.

The dual track theory of AI progress also suggests that different types of AI applications may advance at different rates. We should expect continued rapid improvement in AI tools for content creation, data analysis, and information processing, while breakthroughs in reasoning and general intelligence may require longer timeframes and different approaches. This uneven progress pattern has implications for workforce planning, education, and economic policy.

Investment and Research Strategy

The reconciliation of these perspectives suggests a balanced approach to AI research and development investment. The success of current approaches in narrow domains justifies continued investment in scaling and optimizing existing architectures. The economic value creation that Altman describes is already substantial and likely to grow, making investments in current AI technologies economically rational.

However, Apple's findings about reasoning limitations suggest that achieving artificial general intelligence will require fundamental breakthroughs beyond simply scaling current approaches. This implies the need for significant investment in basic research into reasoning, logic, and the foundations of intelligence. The industry's current focus on scaling may need to be balanced with more speculative research into alternative approaches to AI.

The narrow superintelligence hypothesis also suggests opportunities for hybrid approaches that combine AI's strengths with human reasoning capabilities. Rather than viewing AI as a replacement for human intelligence, we might focus on developing systems that amplify human reasoning while handling the information processing tasks at which AI excels. This could lead to more powerful and reliable AI applications than either pure AI or pure human approaches.

Societal Preparation and Policy

From a societal perspective, the reconciliation suggests that we have more time to prepare for the most disruptive aspects of AI while still needing to address the immediate impacts of current capabilities. The job displacement that Altman acknowledges as a challenge may be more gradual than a rapid transition to AGI would suggest, but the productivity gains from current AI are already reshaping work and economic relationships.

This timeline suggests that policymakers should focus on addressing the immediate impacts of narrow AI capabilities—job displacement in specific sectors, the need for retraining and education, and the economic disruption from productivity gains—while also preparing for the longer-term possibility of more general AI capabilities. The "gentle singularity" that Altman describes may indeed be gentle enough to allow for adaptive policy responses.

The reasoning limitations that Apple identifies also have important implications for AI safety and governance. If current AI systems lack genuine reasoning capabilities, then some of the most concerning scenarios about AI risk may be less immediate than they appear. However, the narrow superintelligence in information processing and pattern recognition still poses significant challenges for misinformation, privacy, and economic disruption.

The Evolution of Human-AI Collaboration

Perhaps most importantly, the reconciliation suggests a future characterized by sophisticated human-AI collaboration rather than AI replacement of human intelligence. The complementary nature of human reasoning and AI information processing capabilities suggests that the most powerful applications may come from systems that combine both effectively.

This has implications for education and workforce development. Rather than preparing for a world where AI replaces human thinking, we might focus on developing human capabilities that complement AI strengths. This could include enhanced critical thinking, creative problem-solving, ethical reasoning, and the ability to work effectively with AI tools.

The productivity gains that scientists are already experiencing suggest a model for this collaboration. Researchers use AI to process vast amounts of information, generate hypotheses, and draft initial analyses, while applying human reasoning to evaluate results, design experiments, and draw conclusions. This division of cognitive labor allows both humans and AI to operate in their areas of strength.

Long-term Trajectories

Looking further into the future, the reconciliation suggests several possible trajectories for AI development. One possibility is that continued progress on the current track will eventually overcome the reasoning limitations that Apple identifies, leading to the general artificial intelligence that Altman envisions. The fundamental scientific insights may indeed be in place, with engineering challenges being the primary remaining barriers.

Alternatively, achieving general AI may require fundamental breakthroughs in our understanding of reasoning and intelligence that go beyond current approaches. This could lead to a longer timeline for AGI but potentially more robust and reliable systems when they do emerge. The reasoning limitations Apple identifies might represent fundamental barriers that require new paradigms rather than incremental improvements.

A third possibility is that narrow superintelligence in multiple domains may prove sufficient for most practical purposes, making general AI less critical than current discussions suggest. If AI systems can achieve superhuman performance in most specific tasks while humans provide reasoning and integration, the combination might be more powerful than either general AI or human intelligence alone.

Preparing for Uncertainty

Perhaps the most important implication of reconciling these perspectives is the need to prepare for uncertainty about AI trajectories. Rather than betting everything on a single vision of AI progress, individuals, organizations, and societies should develop adaptive strategies that can respond to different possible futures.

This might include investing in both current AI capabilities and fundamental research, preparing for both gradual and rapid changes in AI capabilities, and developing institutions and policies that can adapt to different AI development scenarios. The reconciliation suggests that the future of AI is likely to be more complex and nuanced than either pure optimism or pure pessimism would suggest.

The narrow superintelligence framework also suggests that the most important question may not be when we achieve AGI, but how we can most effectively combine human and artificial intelligence to address the challenges facing humanity. The productivity gains that Altman describes and the reasoning capabilities that humans possess may together be more powerful than either alone, suggesting a future of augmented rather than artificial intelligence.

This perspective shifts the focus from replacing human intelligence to amplifying it, from achieving artificial general intelligence to developing effective human-AI collaboration, and from predicting AI timelines to preparing for multiple possible futures. In this view, both Altman's optimism and Apple's caution serve important roles in helping us navigate the complex landscape of AI development with both ambition and wisdom.

Conclusion: Embracing the Complexity

The apparent contradiction between Sam Altman's "gentle singularity" and Apple's research on AI limitations dissolves when we embrace the complexity of intelligence itself. Rather than forcing a choice between optimism and skepticism, we can recognize that both perspectives capture essential truths about the current state and future trajectory of artificial intelligence.

Altman's vision of rapid progress and transformative capabilities is grounded in the very real achievements of current AI systems. The productivity gains, the superhuman performance in specific domains, and the economic value creation he describes are not speculative future possibilities but present realities. Scientists are indeed becoming more productive, businesses are finding genuine value in AI applications, and the infrastructure for more capable systems is being built at an unprecedented pace.

Simultaneously, Apple's research reveals fundamental limitations in current AI approaches that cannot be dismissed or minimized. The inability to engage in genuine logical reasoning, the performance collapse under complexity, and the reliance on pattern matching rather than understanding represent serious constraints on current systems. These limitations suggest that the path to artificial general intelligence may be longer and more challenging than current progress might indicate.

The reconciliation of these perspectives suggests that we are living through a unique moment in technological history: a period of narrow superintelligence that is simultaneously transformative and limited. Current AI systems represent genuine breakthroughs in information processing and pattern recognition while remaining fundamentally constrained in their reasoning capabilities. This creates a landscape where rapid progress and persistent limitations coexist, where transformative applications emerge from systems that lack true understanding.

This nuanced view has important implications for how we approach AI development and deployment. It suggests that we should continue to invest in and benefit from current AI capabilities while maintaining realistic expectations about their limitations. It supports a balanced research strategy that pursues both incremental improvements to existing approaches and fundamental breakthroughs in reasoning and general intelligence.

Perhaps most importantly, this perspective suggests that the future of AI may be characterized more by human-AI collaboration than by AI replacement of human intelligence. The complementary nature of human reasoning and AI information processing capabilities points toward a future where the combination of human and artificial intelligence is more powerful than either alone.

The "gentle singularity" that Altman describes may indeed be gentle precisely because it represents the gradual integration of narrow superintelligence into human activities rather than the sudden emergence of general artificial intelligence. The reasoning limitations that Apple identifies may persist for years or decades, making human intelligence an essential complement to AI capabilities rather than an obsolete predecessor.

This view requires us to hold multiple truths simultaneously: that AI is both revolutionary and limited, that progress is both rapid and constrained, that current systems are both superhuman and subhuman depending on the task at hand. This complexity may be uncomfortable for those seeking simple narratives about AI progress, but it may also be more accurate than either pure optimism or pure pessimism.

As we navigate this complex landscape, both Altman's vision and Apple's research serve essential functions. Altman's optimism helps us recognize and capitalize on the transformative potential of current AI capabilities, while Apple's research helps us maintain realistic expectations and focus on the fundamental challenges that remain to be solved. Together, they provide a more complete picture of where we stand in the AI revolution and where we might be heading.

The AI paradox—how systems can be simultaneously superhuman and fundamentally limited—may not be a contradiction to be resolved but a complexity to be embraced. In recognizing this complexity, we can develop more sophisticated strategies for AI development, more realistic expectations for AI capabilities, and more effective approaches to human-AI collaboration. The future of artificial intelligence may be neither the rapid transition to superintelligence that some envision nor the prolonged struggle with fundamental limitations that others fear, but something more nuanced, more gradual, and ultimately more human than either extreme suggests.

In this view, the question is not whether Sam Altman or Apple is correct about AI capabilities, but how we can learn from both perspectives to navigate the complex reality of artificial intelligence development. The answer lies not in choosing sides but in embracing the paradox, recognizing that the most important truths about AI may be found not in simple narratives but in the tension between competing perspectives. As we continue to develop and deploy AI systems, this nuanced understanding may be our best guide for realizing the benefits while addressing the challenges of this remarkable technology.

References

[1] Altman, S. (2025, June 10). The Gentle Singularity. Sam Altman's Blog. https://blog.samaltman.com/the-gentle-singularity

[2] Milmo, D. (2025, June 9). Advanced AI suffers 'complete accuracy collapse' in face of complex problems, study finds. The Guardian. https://www.theguardian.com/technology/2025/jun/09/apple-artificial-intelligence-ai-study-collapse

[3] Mirzadeh, I., Alizadeh, K., Shahrokhi, H., Tuzel, O., Bengio, S., & Farajtabar, M. (2024). GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models. arXiv preprint arXiv:2410.05229. https://arxiv.org/pdf/2410.05229