The Berkeley Agentic AI Summit 2025: A Deep Dive into the Future of Intelligent Agents

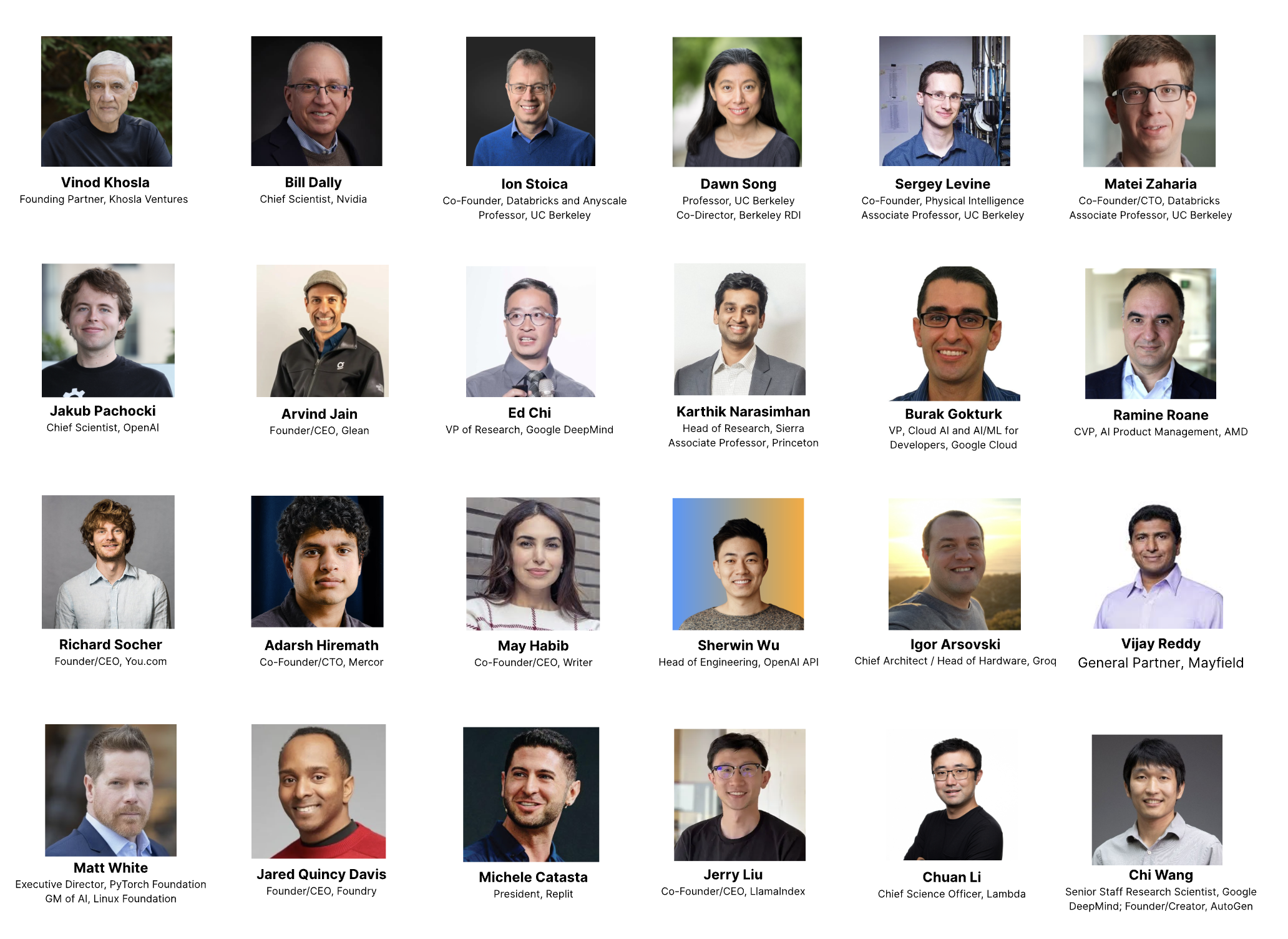

The year 2025 has been declared "the year of agents," and nowhere was this more evident than at the Berkeley Agentic AI Summit held on August 2nd at UC Berkeley. With over 2,000 in-person attendees and 10,000 online participants, this landmark event brought together visionary leaders from academia, pioneering entrepreneurs, experts from leading AI organizations, venture capitalists, and policymakers to explore the rapidly evolving landscape of agentic artificial intelligence.

Hosted by Berkeley's Center for Responsible Decentralized Intelligence (RDI), the summit built upon the momentum of their globally popular LLM Agents MOOC series, which has attracted over 25,000 registered learners worldwide. The event showcased not just the latest technological advancements, but also the practical challenges and breakthrough solutions that are shaping how AI agents will transform industries, enterprises, and society at large.

What emerged from the day-long conference was a clear picture of an inflection point in AI development. We are moving beyond simple chatbots and language models toward sophisticated, multi-agent systems capable of autonomous reasoning, tool use, and complex problem-solving. The speakers painted a compelling vision of agents that don't just automate tasks, but actively empower individuals, enrich societies, and amplify human potential.

This comprehensive analysis covers the key insights, technical breakthroughs, and strategic implications discussed across five major sessions, from infrastructure and frameworks to enterprise adoption and industry transformation. The conversations revealed both the immense promise and the significant challenges that lie ahead as we navigate toward an AI-powered future where intelligent agents become integral to how we work, research, and solve complex problems.

Session 1: Building Infrastructure for Agents - The Hardware Foundation

Bill Dally's Vision: Hardware as the Spark for AI Revolution

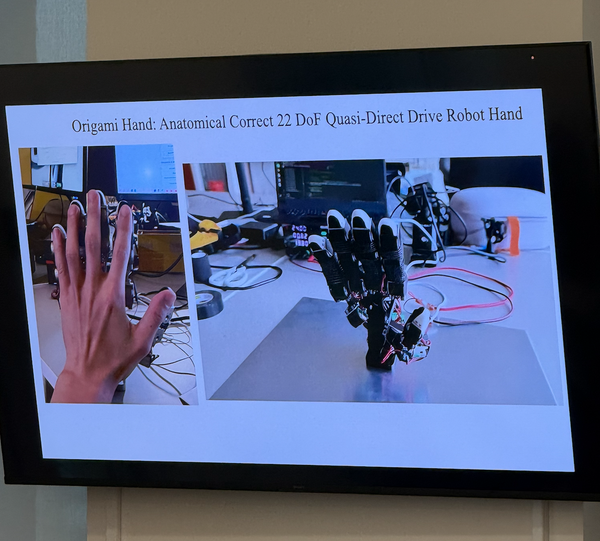

The summit opened with a powerful keynote from Bill Dally, NVIDIA's Chief Scientist, who provided both historical context and future vision for the hardware infrastructure powering AI agents. Dally began with a compelling demonstration of "Elegante," an AI agent developed by NVIDIA's Toronto AI lab that functions essentially as a second-year graduate student in computational chemistry.

When asked a question like "What is the activation energy of caffeine?" – a query particularly relevant to Dally that morning – Elegante demonstrates the sophisticated architecture that defines modern AI agents. The system operates as a hierarchy of specialized agents, each an LLM with specific state and capabilities, working together to generate molecular geometry, optimize structures, submit computational jobs for density functional theory calculations, and post-process results to deliver accurate answers.

This example illuminated Dally's central thesis about agent architecture: the most effective agents combine large language models with tools, memory, policy, and internal state. As he emphasized, "You're better off with a one billion parameter model attached to a calculator than with a trillion-parameter model trying to do that calculation just with language." This insight challenges the prevailing assumption that bigger models are always better, instead highlighting the power of specialized tool integration.

The Hardware Revolution Behind AI Agents

Dally's presentation revealed the staggering scale of computational advancement that has enabled the current AI revolution. Over the past decade, there has been a 10 million factor increase in the number of floating-point operations required to train state-of-the-art models. This growth has followed two distinct phases: during the era of vision models (AlexNet through ResNet), the growth rate was approximately 3x per year. However, since the "Attention is All You Need" paper introduced transformers in 2017, the slope has accelerated to 16x per year – a pace that presents significant challenges for hardware development.

What makes this growth particularly remarkable is where the performance gains have actually come from. Contrary to popular belief about Moore's Law, process technology improvements have contributed only a 3x factor out of the total 4,000x improvement from NVIDIA's Kepler generation through their latest Blackwell architecture. The real drivers of performance have been far more innovative.

The single largest contributor has been advances in number representation, moving from FP32 in the Kepler generation down to FP4 in Blackwell, providing a 32x improvement. This reduction in precision is possible because AI workloads don't require the same numerical precision as traditional scientific computing. The second major contributor has been the development of complex instructions, particularly matrix multiply operations, which provide a 12.5x improvement by amortizing instruction overhead across many operations.

Dally illustrated this with a striking example of instruction overhead. In their 45nm process, a half-precision floating multiply-add operation consumes about 1.5 picojoules of energy, but fetching the instruction, decoding it, fetching operands, and storing results requires about 30 picojoules – a 2000% overhead. By moving to matrix multiply instructions that perform 1024 operations under a single instruction, this overhead drops to just 16%, and in today's GPUs, it's around 11%.

The Distributed Future of Agent Infrastructure

The infrastructure session continued with insights from other industry leaders who painted a picture of increasingly distributed and specialized agent systems. Ramine Roane from AMD emphasized the importance of open-source software stacks and GPU-agnostic development, highlighting how agents will operate across edge devices, endpoints, and cloud infrastructure in a collaborative ecosystem.

Chuan Li from Lambda presented a thought-provoking perspective by asking: "What if agents were human?" This framing led to insights about how we might build infrastructure for agents by learning from the infrastructure we've built for human society. Li noted that while agents share similarities with humans – they have digital neurons, are trained on human-generated data, and are aligned with human instructions – they also possess unique capabilities: they can run much faster, with higher precision, and can memorize far more than humans.

This led to fascinating questions about the economics of agent systems. Li proposed thinking about tokens as currency in an agent economy, raising questions about who serves as the banks, whether humans can generate tokens, and what the exchange rate might be between human tokens and agent tokens. These economic considerations will become increasingly important as agent systems scale and interact with human economic systems.

The infrastructure discussion also touched on the integration challenges facing agent deployment. Li used the analogy of self-driving cars, which function as agents that have successfully integrated into existing human infrastructure without requiring fundamental changes to roads or traffic systems. This raises important questions about whether we should adapt our infrastructure for agents or design agents to work within existing systems.

Session 2: Frameworks & Stacks for Agentic Systems

The second session, featuring keynotes and focus talks from leaders at Databricks, OpenAI, Google DeepMind, LlamaIndex, and other major platforms, explored the software infrastructure and frameworks needed to build robust agentic systems. The session addressed critical questions about orchestration, context engineering, and the standardization needed for agent interoperability.

The discussions highlighted the tension between building specialized frameworks for current model limitations versus preparing for future models with enhanced capabilities. As models improve their reasoning abilities and context handling, many of the current "hacks" in context engineering may become obsolete, potentially simplifying agent architectures significantly.

Session 3: Foundations of Agents - Safety, Security, and Core Capabilities

The foundations session brought together some of the most influential voices in AI research, including Dawn Song from UC Berkeley on safety and security, Ed Chi from Google DeepMind on universal assistants, Jakub Pachocki from OpenAI on automating discovery, and Sergey Levine on reinforcement learning for agents.

The session addressed fundamental questions about building safe, secure, and reliable agent systems. The discussions likely covered critical topics such as alignment, robustness, and the technical challenges of ensuring agents behave as intended when deployed in real-world environments.

Session 4: Next Generation Enterprise Agents - From Prototype to Production

The Platform Revolution: Beyond Model Selection

The enterprise agents session provided some of the most practical and immediately actionable insights of the day. Burak Gokturk from Google opened with a crucial observation about the shifting landscape of enterprise AI adoption. "Maybe about two years ago, it was the choice of the model, but that's changing. It's not a choice of the platform," he explained, pointing to the rapidly evolving AI leaderboards where different companies regularly achieve top performance.

This shift toward platform thinking reflects a maturing understanding of enterprise AI needs. Rather than betting on a single model, successful enterprises are building capabilities around model gardens that provide access to multiple models, model builders for customization through fine-tuning and distillation, and agent builders with comprehensive toolsets for orchestration, extensions, connectors, and document processing.

Gokturk emphasized three critical trends shaping enterprise agent adoption. First, the integration of search with large language models has become essential. LLMs are trained on historical data and cannot access real-time information like "the score of the game from last night." They also hallucinate by design – creativity requires some departure from strict factuality – and they cannot provide citations, which is crucial for enterprise applications where source attribution is mandatory.

Second, the era of simple conversational AI is ending. As Gokturk defined it, agents are systems that "cannot do just by themselves" – they require tool integration, function calling, and the ability to combine multiple capabilities. However, function calling presents significant technical challenges. When choosing from 100 available functions, current models achieve only 50-70% accuracy, highlighting the importance of starting with simpler agent designs rather than overwhelming systems with too many options.

Multi-Agent Breakthroughs: The Core Scientists Example

Perhaps the most striking example of agent capabilities came from Gokturk's discussion of "Core Scientists," a multi-agent medical discovery system that represents a paradigm shift in scientific research. This system functions as a comprehensive research assistant for medical researchers and doctors, capable of reading vast amounts of literature across multiple domains, drawing connections, self-regulating its research process, and conducting ethics checks.

The results have been extraordinary. The system has demonstrated the ability to generate research hypotheses and discoveries that would typically take human researchers decades to develop, completing this work in a matter of days. Gokturk shared feedback from domain experts, including one genetics researcher with over a million followers who stated: "After what I've seen today related to an AI model, I can confidently claim that the scientific process will never be the same again. He's 99% certain that all diseases, including cancer, will be cured within roughly ten years."

This represents more than incremental improvement – it suggests a fundamental acceleration of scientific discovery that could compress decades or centuries of research into much shorter timeframes. The implications extend far beyond medicine to any field requiring extensive literature review, hypothesis generation, and systematic investigation.

Enterprise Reality: The Gap Between Prototype and Production

Arvind Jain, founder and CEO of Glean, provided a sobering perspective on the current state of enterprise AI adoption. While acknowledging that "every enterprise today is thinking about how they're going to transform their business with AI," he highlighted the significant gap between prototype capabilities and production deployment.

The challenges are both technical and organizational. On the technical side, enterprises struggle with fundamental data issues: information is often uncurated, outdated, or exists only in people's minds rather than documented systems. Even when data exists, it's frequently scattered across multiple systems with overlapping and inconsistent information. The challenge of bringing precisely the right information to agents for specific tasks remains a significant bottleneck.

Governance and security present equally complex challenges. Enterprise information is typically governed by complex permission structures – certain departments can access specific information while others cannot. When deploying agents, organizations must ensure these governance restrictions are maintained. As Jain noted, agents should only have access to information that the human they're representing would have access to.

Perhaps most concerning, many large enterprises have admitted they "don't trust their own governance." Years of accumulated data with improperly set permissions create security vulnerabilities that become magnified when AI systems can easily discover and access information. This has led to a new category of security challenges: preparing for agent rollouts by identifying and fixing permission vulnerabilities before deployment.

Search-Powered Agents: The You.com Approach

Richard Socher from You.com presented a compelling case study in building specialized agent capabilities that can compete with much larger organizations. His company has focused specifically on deep research agents, creating systems that produce exhaustive 30-page research reports with over 500 sources and citations.

The key insight from You.com's approach is that smaller organizations can achieve superior results by focusing intensively on specific use cases rather than trying to build general-purpose systems. Their deep research agents spend significantly more computational resources – more tokens, more time, more citations – on each research task, resulting in higher quality outputs than general-purpose systems.

Remarkably, when evaluated by OpenAI's own models, You.com's research reports consistently outperformed OpenAI's deep research mode. This success demonstrates that focused specialization and increased computational investment in specific domains can overcome the advantages of larger, more general systems.

Socher emphasized the importance of open-source benchmarking for validating these claims. Rather than relying on marketing assertions, You.com provides GitHub repositories where anyone can download, run, and verify their benchmark results. This commitment to transparency and reproducibility sets a valuable standard for the industry.

The enterprise session concluded with practical advice for organizations beginning their agent journey. The consensus among speakers was to start simple, focus on specific use cases, ensure robust data governance, and maintain human oversight for critical decisions. The gap between current capabilities and production-ready systems remains significant, but the trajectory toward transformative enterprise applications is clear.

Session 5: Agents Transforming Industries - From Code to Customer Service

Michele Catasta (Replit): The Evolution of Coding Agents

Michele Catasta, President of Replit, provided a fascinating retrospective on the evolution of coding agents, tracing their development from simple reactive systems to sophisticated autonomous developers. His presentation revealed both the rapid progress and the surprising insights about what actually works in practice.

Catasta began by acknowledging that coding agents were among the first successful implementations of agentic AI. The journey started with React-based architectures where agents would observe code execution, create feedback loops, and iterate on solutions. This was "maybe the inception and the first open-source project to show that code engagements were actually possible."

However, the path forward wasn't linear. As the field matured, developers added significant complexity to agent architectures, moving from simple React implementations to sophisticated multi-agent systems with specialized roles: editor agents, manager agents, and verifier agents. These systems showed promise initially but hit a performance plateau in the second half of 2024.

The key insight that emerged was counterintuitive: "imposing more structure in the core React is actually harder." Systems with more imposed structure showed initial improvements but quickly plateaued in performance. Meanwhile, frontier AI labs were taking the opposite approach – removing structure from agent scaffolds and allowing models to become more proficient at deciding their next steps autonomously.

This led to a fundamental shift in coding agent architecture. As Catasta explained, most successful coding agents on the market have embraced a return to simpler architectures centered around the LLM's decision-making capabilities. The model decides when to take actions, which actions to take, and can execute several actions in sequence until it decides to stop or return control to the user.

This user experience pattern has become familiar across platforms like Replit, Cursor, and other coding assistants. The success of this approach validates a broader principle: as frontier models improve, especially with massive reinforcement learning scaling, they become better at coherent long-term reasoning and agent trajectories.

For companies building agent scaffolds, this evolution leaves several critical areas for innovation. Context management remains crucial, with sub-agents providing ways to delegate work and return condensed results to maintain focus. Environment quality is equally important – the environment provides feedback to agents about their progress and defines the action space through available tools and integrations.

Catasta emphasized a significant gap between research benchmarks and real-world coding needs. Most current benchmarks focus on isolated tasks like creating pull requests from existing issues, but real-world development involves maintainable, iterative development starting from scratch. The aesthetics of code matter enormously, and integrating humans into the development loop remains challenging.

His call to action for the research community was clear: "We need more evolves." Current public evaluations like SWE-Bench are limited and focus on single tasks, while major product impact requires evaluating code generation from scratch, functionality development, and especially long-term development trajectories.

Karthik Narasimhan (Sierra): Building Reliable AI Agents

Karthik Narasimhan, Head of Research at Sierra and Associate Professor at Princeton, addressed perhaps the most critical challenge facing agent deployment: reliability. His presentation began with a telling audience poll – when asked how many attendees would trust an AI agent to completely handle their taxes, only about 20 hands went up in a room full of AI enthusiasts.

This low confidence isn't due to capability limitations, Narasimhan explained, but rather reliability concerns. The core challenge isn't that agents can't perform tasks, but that users don't find them consistently trustworthy for autonomous operation.

Narasimhan broke down reliability into two essential components. First, agents must be predictable – consistently acting in ways users can anticipate. Second, they must be aligned not just with safety requirements, but with individual user preferences and decision-making patterns. True autonomy requires agents that understand and mirror their users' tastes and judgment.

The path to reliability begins with measurement. Citing Peter Drucker's famous principle that "you can't improve what you can't measure," Narasimhan emphasized the need for comprehensive evaluation systems that assess entire agent systems, not just individual components like models or tools.

He highlighted two benchmark examples from his work. SWE-Bench, released two years ago, addressed the limitation of coding evaluations that only tested code writing ability. Real software engineering involves much more than typing code – it requires understanding systems, debugging, and iterative problem-solving. SWE-Bench aimed to evaluate these more realistic software engineering tasks.

More recently, Sierra developed Tau Bench, specifically designed for customer support agents. This benchmark introduced a crucial "pass@K" metric that measures consistency across repeated executions of the same task. In customer support, success isn't about average performance on diverse tasks – it's about reliably handling the same types of queries thousands or millions of times.

The results revealed significant reliability gaps. Many models with tool-calling capabilities failed even when attempting the same task multiple times, highlighting the consistency challenges that prevent full autonomous deployment.

Beyond measurement, Narasimhan outlined several approaches for improving reliability. Careful interface design, including thoughtful tool selection and context management, forms the foundation. Self-evaluation capabilities, memory systems, and targeted fine-tuning provide additional reliability improvements.

Looking forward, Narasimhan identified two promising directions for enhancing agent reliability. First, making agents more proactive – systems that can recognize their limitations, ask for help when needed, and communicate uncertainty rather than simply reacting to user inputs. Second, enabling agents to improve over time through experience, mirroring how humans develop expertise and reliability through practice.

Multi-agent networks represent another promising approach, where agents can verify each other's work and provide additional layers of trust and validation. This distributed approach to reliability could address many of the consistency challenges facing individual agent systems.

Adarsh Hiremath (Mercor): The Future of Work in an AI Economy

Adarsh Hiremath, Co-Founder and CTO of Mercor, provided insights into how AI agents are reshaping the fundamental economics of expertise and human-AI collaboration. Mercor operates at the intersection of AI development and human talent, automating recruitment processes while placing expert contractors who drive model improvement through post-training and evaluation work.

Hiremath's perspective revealed a critical shift in AI development bottlenecks. While early AI development was constrained by compute resources, the current limitation is increasingly data – specifically, high-quality human expertise for training and evaluation. As foundational models have become more capable, taking them to the next level requires sophisticated post-training techniques that demand expert human input.

The evolution of training approaches illustrates this shift. Early supervised fine-tuning used simple prompt-completion pairs. Reinforcement learning from human feedback typically involved multiple-choice questions where humans selected the best response. Today's thinking models and reasoning agents require much more granular feedback – experts must evaluate and reward specific reasoning chains and decision-making processes.

This creates massive demand for expert-level human data, not crowdsourced labeling. As Hiremath explained, modern AI improvement isn't about identifying hot dogs or stop signs – it requires the best Rust engineers to teach models programming, Olympiad medalists to improve mathematical reasoning, and domain experts across finance, law, and other specialized fields.

Mercor's platform connects over 700,000 experts with AI companies needing specialized knowledge for model improvement. The company has automated much of the sourcing, vetting, and placement process using their own agents, creating a recursive system where AI agents help improve AI systems through expert human guidance.

A key insight from Mercor's work is that "a model is only as good as its eval, and an agent is only as good as its eval." Evaluation frameworks drive all modeling work, making the design and implementation of robust evaluation systems critical for agent development.

The panel discussion revealed important insights about human-AI collaboration patterns. Rather than the common mental model of giving agents complete tasks to execute from zero to 100%, the reality involves more bidirectional relationships. Agents might complete 70% of a task, with human experts providing the final 30% of refinement and validation.

This collaboration model suggests a future where there's a premium on that final 30% of human expertise – the nuanced judgment, creative problem-solving, and quality assurance that agents cannot yet provide autonomously. For knowledge workers, this represents both an opportunity and a challenge: agents will handle routine work while humans focus on higher-value activities requiring expertise and judgment.

The Panel: Practical Insights on Agent Deployment

The session concluded with a panel discussion that provided practical insights for organizations deploying agent systems. Several key themes emerged from the conversation between industry leaders.

On the timeline for human-agent collaboration, the panelists agreed that we're already seeing humans function as managers of multiple agent systems, orchestrating parallel workflows and tracking progress across multiple automated tasks. However, the path to complete automation remains much longer than many anticipate, particularly for non-technical users.

Security and explainability emerged as critical requirements for mission-critical applications. As one panelist noted, "if you don't, as an overseer of those agents, understand what decisions were made, why they were made, and actually have points of interdiction as appropriate, it's going to be very hard to allow these agents to operate on critical systems."

The discussion highlighted the importance of maintaining human oversight and intervention capabilities, especially during the current phase of agent development where reliability remains inconsistent. The most successful deployments combine agent capabilities with human expertise, creating collaborative systems that leverage the strengths of both.

Looking ahead, the panelists identified agent learning and multi-agent communication as the most promising areas for development. Agents that can improve through experience and coordinate effectively with other agents will unlock new categories of automation and productivity enhancement.

The Fireside Chat: Vinod Khosla's Vision for AI's Future

The summit concluded with a fireside chat featuring Vinod Khosla, founding partner of Khosla Ventures and one of Silicon Valley's most influential investors in AI and technology. Khosla's conversation with Dawn Song provided profound insights into the investment landscape, innovation dynamics, and his bold predictions for AI's transformative impact.

The Power of University Research and Distributed Innovation

Khosla began by emphasizing the critical role of university research and distributed innovation in driving AI breakthroughs. His investment philosophy centers on a key observation: "One of the ways I look at what areas will have large breakthroughs is to look at universities and say, where is the best talent going?"

He traced his AI investment journey back to 2011-2012, when Khosla Ventures began seriously discussing AI, leading to their first investments around 2014. While those early investments didn't succeed – it was the era of ImageNet and more limited capabilities – Khosla recognized the importance of talent flow and idea diversification.

"Our progress doesn't happen if most of the talent is at the big companies and the big labs," Khosla explained. "They tend to do narrow exploration in a narrow domain, as opposed to looking more blindly and widely." This insight drives his conviction that the more university students, individual researchers, and independent hackers work on a problem, the faster the rate of progress.

This philosophy directly challenges the conventional wisdom that only well-resourced big tech companies can drive AI innovation. Khosla argued that resource constraints actually drive innovation rather than hinder it.

The Advantage of Resource Constraints

Perhaps Khosla's most counterintuitive insight was his strong belief that lack of resources is an advantage, not a disadvantage, for innovation. "If you had ten or 100 times the money, you would be less likely to innovate than if you had less money. I'm absolutely convinced," he stated emphatically.

He illustrated this principle with a concrete example from robotics research. When Google researchers work on robotic systems and need more training data, their obvious solution is to deploy hundreds or thousands of robots because they can afford it. "Because they can afford it, they get lazy about finding other ways to get much better or more efficient data," Khosla observed.

This resource abundance makes researchers "lazy and less creative, looking for new solutions." In contrast, researchers with limited resources are forced to find more innovative, efficient approaches. "If you have less resources within bounds, you're more likely to come up with more invention and innovation than if you have lots of resources."

This principle – that necessity is the mother of invention – provides hope for university researchers, startups, and smaller organizations competing against well-funded tech giants. The constraint forces creativity that abundance can actually inhibit.

The OpenAI Investment Decision

Khosla's decision to invest in OpenAI in 2018 exemplified his strategic thinking about market dynamics and innovation vectors. His analysis was remarkably straightforward: "I looked at the world then in 2018. There were two research labs in AI that mattered. There was university research, but there was Google, and there was Baidu in China. I thought the world needed more than those two."

Google, despite having "probably still the best repertoire of researchers" and "very good talent," was moving at their own pace without real competitive pressure. Baidu was essentially copying Google's approach, setting up offices nearby and trying to hire their people. Neither represented the kind of focused, fast-moving innovation vector that Khosla believed was necessary.

OpenAI offered something different: "What was needed was a small, really good team. And it was a small, really great team that wanted to move fast. Those were the only characteristics needed in 2016."

Following Data, Not Expert Opinion

Khosla's investment approach relies heavily on data trends rather than expert predictions. In 2016, he gave a talk at the National Bureau of Economic Research where he presented about 20 curves showing AI capability progress across different dimensions compared to human performance. While the absolute performance looked "silly compared to human performance," the rate of growth was exponential.

"Instead of saying what people believed, what other people believed, and saying these robots are silly, they tell and 80 ask an 83-year-old man if they're pregnant," Khosla noted, referencing common AI failures of the time. "I said, 'What's the rate of change?' When you followed that curve, it was obvious it was only a matter of time before AI exceeded human capability in any of these dimensions."

This data-driven approach led him to ignore expert opinion entirely. His presentation included projections from about 50 experts on AGI timelines, which "varied from 5 years to 500 years or never." His conclusion: "Expert opinion doesn't matter. What matters is just keep innovating step by step and see where it gets you."

The rate of progress was accelerating because both talent input and creativity were increasing. While Khosla couldn't predict whether the breakthrough would come in three years (it happened with ChatGPT in 2022) or five to seven years later, he knew it would happen and would be significant enough that being early wouldn't be a problem.

Bold Predictions for the Future

The fireside chat concluded with Khosla's characteristically bold predictions about AI's near-term impact. He referenced a tweet he had posted that morning, making a prediction that "most of you won't believe today": "Before 2030, we will stop using keyboards and speech for [input]."

Implications for Entrepreneurs and Researchers

Khosla's insights provide a roadmap for entrepreneurs and researchers working in AI and agentic systems. His key messages include:

Embrace Resource Constraints: Rather than viewing limited resources as a disadvantage, recognize them as a driver of innovation and creativity. Constraints force more efficient and novel solutions.

Focus on Talent and Ideas: The concentration of talent and diversity of approaches matter more than absolute resource levels. University research and distributed innovation drive breakthrough progress.

Follow Data, Not Experts: Exponential curves and measurable progress indicators provide better guidance than expert opinion, which tends to be highly variable and often conservative.

Move Fast with Small Teams: The OpenAI model of small, excellent teams moving quickly can compete effectively against much larger, well-resourced organizations.

Think in Terms of Vectors: Look for different approaches and vectors of innovation rather than incremental improvements within existing paradigms.

Khosla's perspective reinforces many of the themes that emerged throughout the summit: the importance of specialized approaches, the power of focused innovation, and the potential for smaller organizations to achieve breakthrough results through creativity and focus rather than brute-force resource application.

His confidence in continued exponential progress, combined with his track record of successful early-stage AI investments, provides an optimistic framework for thinking about the future of agentic AI and the opportunities available to innovative researchers and entrepreneurs.

Key Challenges and Technical Bottlenecks

Throughout the summit, several critical challenges emerged that currently limit the deployment of production-ready agent systems:

Function Calling Accuracy

One of the most significant technical bottlenecks is the accuracy of function calling. When agents must choose from 100 available functions, current models achieve only 50-70% accuracy. This limitation forces developers to start with simpler agent designs and gradually increase complexity as the underlying models improve.

Data Governance and Security

Enterprise deployment faces substantial challenges around data governance, permission management, and security. Many organizations lack confidence in their own data governance systems, creating vulnerabilities when AI agents can easily discover and access information. The cost of finding security vulnerabilities is decreasing rapidly with AI, creating both risks and opportunities for system hardening.

Reliability and Consistency

The reliability of agent outputs remains inconsistent, particularly in multi-agent systems where coordination and communication between agents can introduce additional failure modes. As one panelist noted, "If we can't solve reliability with LLMs, can we really solve it with agents?"

Enterprise Integration

The gap between prototype capabilities and production deployment remains significant. While agents can be built quickly, integrating them into existing enterprise systems with proper governance, security, and reliability requires substantial additional work.

Technical Breakthroughs and Innovations

Despite these challenges, the summit showcased several breakthrough applications that demonstrate the transformative potential of agent systems:

Scientific Discovery Acceleration

The Core Scientists system represents a paradigm shift in research methodology, compressing decades of scientific work into days or weeks. This capability could fundamentally accelerate progress in medicine, materials science, and other research-intensive fields.

Specialized Agent Performance

You.com's success in building research agents that outperform much larger systems demonstrates that focused specialization can overcome resource disadvantages. This suggests opportunities for smaller organizations to build world-class capabilities in specific domains.

Infrastructure Optimization

NVIDIA's hardware innovations show how specialized infrastructure can enable new categories of agent applications. The 4,000x performance improvement over the past decade has been driven primarily by algorithmic innovations in number representation and instruction design rather than traditional process improvements.

Future Outlook: The Path Forward

The Berkeley Agentic AI Summit painted a picture of an industry at an inflection point. While significant technical and organizational challenges remain, the trajectory toward transformative agent capabilities is clear. Several key themes emerged for the path forward:

Platform-Centric Development

The shift from model selection to platform capabilities will continue, with successful organizations building comprehensive toolsets for agent development, deployment, and management rather than betting on individual models.

Gradual Enterprise Adoption

Enterprise adoption will likely follow a gradual path, starting with simple, well-defined use cases and expanding as reliability and governance challenges are addressed. Human-in-the-loop approaches will remain critical during this transition period.

Specialized vs. General Agents

The success of specialized agents like Core Scientists and You.com's research agents suggests that focused, domain-specific systems may achieve breakthrough performance before general-purpose agents reach similar capabilities.

Infrastructure Evolution

Continued hardware and software infrastructure development will be critical for supporting the computational demands of sophisticated agent systems. The emphasis on open-source development and standardization will help ensure broad accessibility.

Regulatory and Ethical Considerations

As agents become more capable and autonomous, questions of safety, security, and ethical deployment will become increasingly important. The involvement of academic institutions like Berkeley RDI in hosting these discussions reflects the need for responsible development practices.

Conclusion: Agents as the Next Computing Platform

The Berkeley Agentic AI Summit 2025 demonstrated that we are witnessing the emergence of agents as a new computing platform. Like previous platform shifts from mainframes to PCs to mobile to cloud, the transition to agentic AI will create new categories of applications, business models, and user experiences.

The technical foundations are rapidly solidifying, with breakthrough hardware capabilities, sophisticated software frameworks, and increasingly capable models. The enterprise adoption challenges, while significant, are being actively addressed by organizations that recognize the transformative potential of agent technologies.

Perhaps most importantly, the summit showcased applications like Core Scientists that suggest we are approaching capabilities that could fundamentally accelerate human progress in science, medicine, and other critical domains. The prediction that "all diseases, including cancer, will be cured within roughly ten years" may seem optimistic, but the demonstrated ability of agent systems to compress decades of research into days suggests that such acceleration may indeed be possible.

As we move forward into what has been declared "the year of agents," the insights from Berkeley's summit provide both a roadmap for technical development and a framework for thinking about the broader implications of this technological transformation. The future of agentic AI is not just about building better tools – it's about fundamentally amplifying human capability and accelerating progress toward solving our most important challenges.

The conversations and demonstrations at Berkeley made clear that this future is not a distant possibility but an emerging reality. Organizations, researchers, and policymakers who understand and prepare for this transition will be best positioned to harness the transformative potential of agentic artificial intelligence.

This analysis is based on transcripts and materials from the Berkeley Agentic AI Summit 2025, hosted by UC Berkeley's Center for Responsible Decentralized Intelligence. The summit brought together over 2,000 in-person attendees and 10,000 online participants to explore the future of agentic AI systems.

URL: https://rdi.berkeley.edu/events/agentic-ai-summit

Written by Bogdan Cristei and Manus AI