Your AI is a Goldfish: Why Context Engineering is the New Secret Weapon for Startups

If you’ve ever tried to have a serious conversation with an AI, you know the feeling. You start strong, laying out your brilliant idea. The AI seems to get it. Then, five messages later, it asks a question you answered at the very beginning. It’s like talking to a goldfish—a very, very smart goldfish with access to all of human knowledge, but a goldfish nonetheless.

For entrepreneurs and investors pouring money into the AI gold rush, this isn't just frustrating; it's a multi-million dollar problem. You're building the next game-changing AI product, but under the hood, it has the memory of a housefly. This is where the magic of context engineering comes in. It’s the difference between building a forgetful toy and a truly intelligent partner. And frankly, it’s the skill that will separate the AI winners from the wannabes.

From Prompt Whisperer to AI Architect

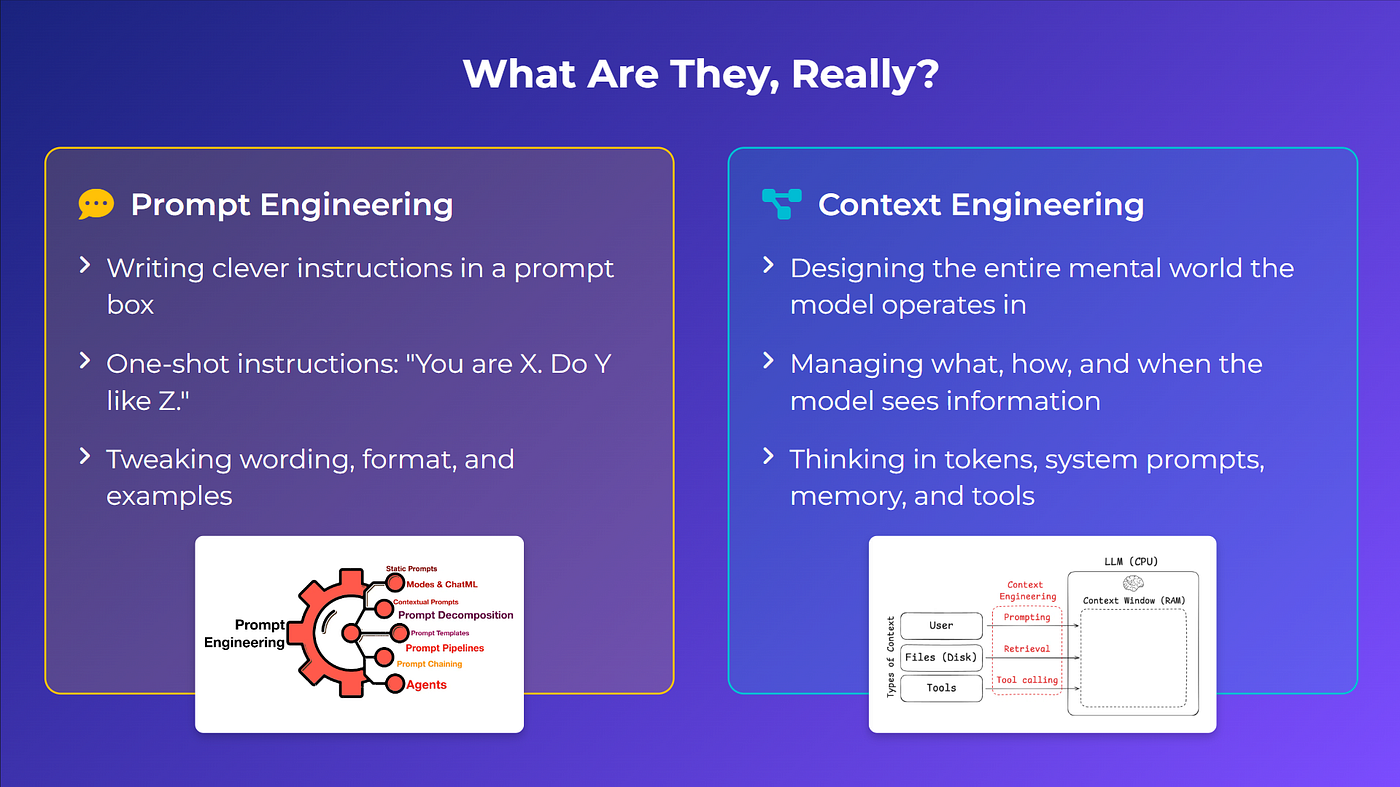

You’ve probably heard of “prompt engineering.” It’s the art of whispering sweet nothings into an AI’s ear to get it to do what you want. It’s useful, like knowing how to order a complicated coffee. But context engineering is like designing the entire coffee shop.

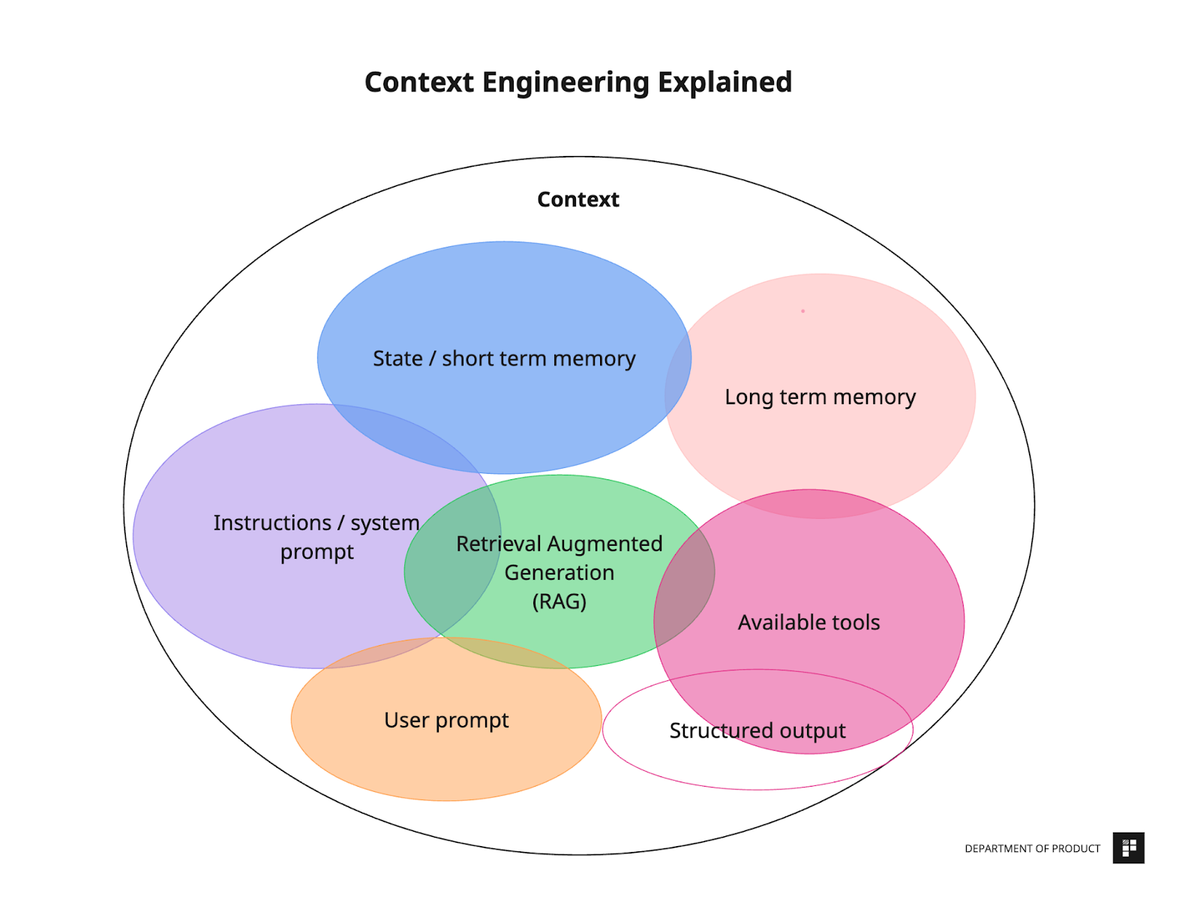

Prompt engineering is writing instructions for a single task. Context engineering is building a system that remembers previous orders, knows you hate foam, and has your triple-shot-oat-milk-latte ready before you even ask [1].

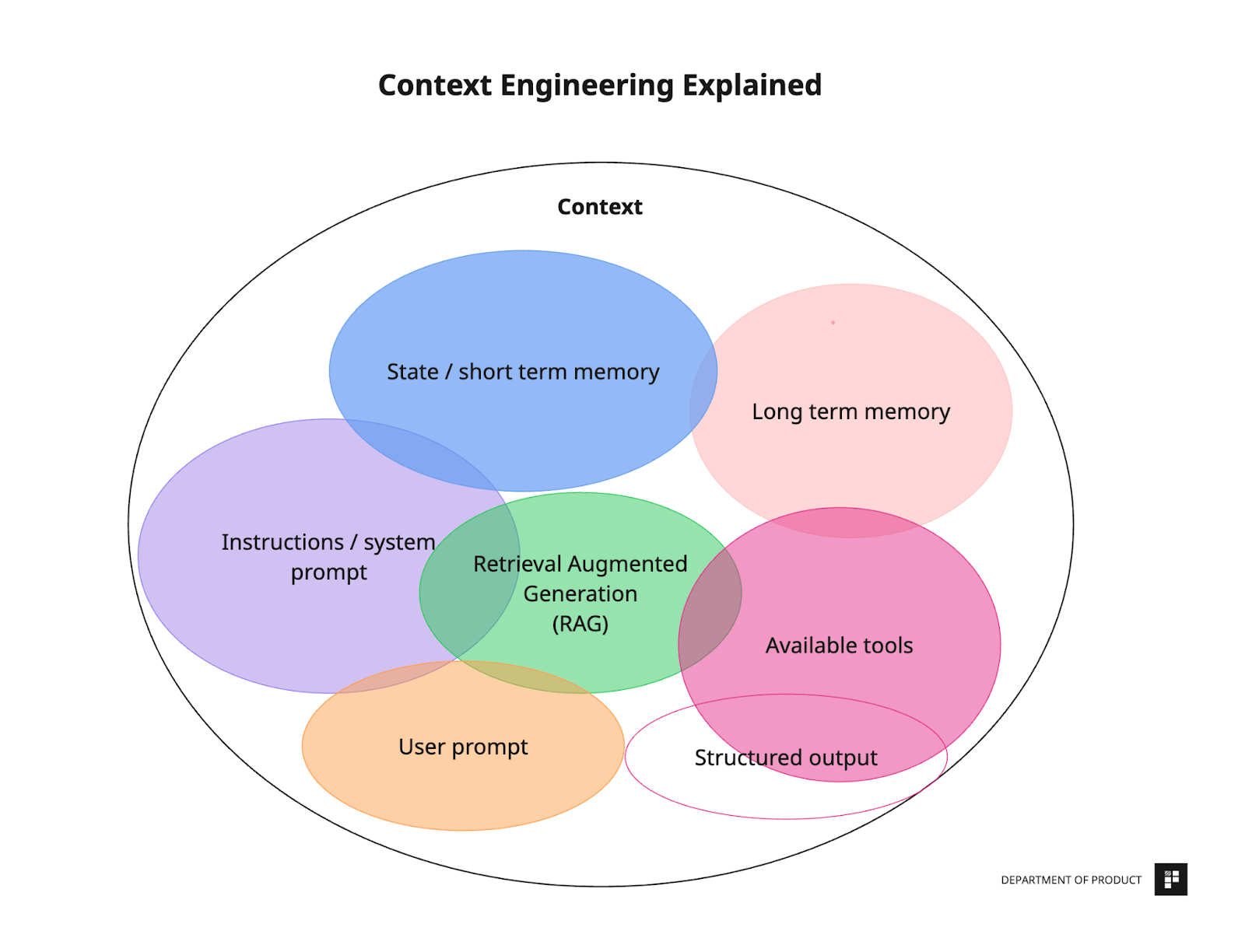

In short, prompt engineering is about the now. Context engineering is about creating a persistent, evolving understanding—the context—that makes an AI truly useful over time. It’s the art and science of feeding the AI the right information, from the right sources, at the right time, so it can do its job without forgetting what it learned two seconds ago.

(Image Source: Adapted from multiple sources showing the simplicity of a single prompt versus the rich, multi-faceted nature of a context-engineered system)

The “Lost in Translation” Problem: When Good AI Goes Bad

Large Language Models (LLMs) are powerful, but they have a critical weakness: the context window. This is the AI’s short-term memory. Everything you tell it—instructions, documents, your life story—has to fit into this window. Once the window is full, the AI starts forgetting the earliest parts of the conversation. It’s not malicious; it’s just how the math works.

“Simple,” you say, “let’s just build bigger windows!” Not so fast. Research shows that as context windows get massive, AI models can suffer from a kind of “brain fog.”

- Context Distraction: With too much information, the model gets distracted by the sheer volume of its own memories and starts repeating itself or losing focus on the actual task. One study found that a top-tier model’s performance started to degrade long before its massive context window was full [2]. It’s like trying to find a single sticky note in a room wallpapered with them.

- Context Poisoning: This is even scarier. If the AI makes a mistake or “hallucinates” a fact early on, that error gets baked into the context. The AI then references this bad information over and over, leading it down a rabbit hole of nonsense. The DeepMind team saw this happen when an agent playing Pokémon hallucinated the game state and then spent ages pursuing impossible goals [3].

This is why you can’t just throw more data at your AI and hope for the best. You need to be a curator, a librarian, an architect of information.

(Image Source: Department of Product, Substack [6])

The AI Whisperer's Toolkit: How Context Engineering Works

So how do you stop your AI from being a forgetful, distractible mess? Context engineering provides a set of powerful techniques.

| Technique | Analogy | Why It Matters for Your Startup |

|---|---|---|

| Retrieval-Augmented Generation (RAG) | The AI’s personal, searchable library | Your AI can instantly access your company’s private knowledge base, product docs, or customer data without expensive retraining. |

| AI Agents & Tools | Giving your AI hands, eyes, and a phone | Your AI can browse the web, check stock prices, or book a meeting. It’s not just a brain in a jar; it’s an active participant in workflows. |

| Memory Systems | The AI’s short-term and long-term memory | Your AI remembers user preferences from last week’s conversation, providing a personalized and continuous experience that builds customer loyalty. |

These aren’t just theoretical concepts; they are the building blocks of sophisticated AI applications, from customer service bots that actually solve problems to AI coding assistants that understand your entire codebase [1].

Lessons from the Trenches: The Manus Method

At Manus, we’ve been living and breathing context engineering since day one. We’ve rebuilt our agent framework four times, learning hard lessons along the way in a process our founder affectionately calls “Stochastic Graduate Descent” [4]. Here are a few gems of hard-won wisdom:

- Obsess Over Your Cache: In AI, speed is everything, and cost is a close second. The KV-cache is a mechanism that saves the results of previous computations. A high cache hit rate means your AI is thinking faster and cheaper. We found that seemingly innocent things, like putting a timestamp in the system prompt, were killing our cache and costing us a fortune. For agents, where the input is often 100 times larger than the output, this is a game-changer. It can be a 10x cost difference [4].

- Give Your AI Fewer Toys: When you give an agent hundreds of tools, it can get confused and less effective. Instead of dynamically removing tools (which also breaks the cache), we learned to “mask” them. We essentially hide the toys the AI doesn’t need for a specific task, guiding it to the right choice without overwhelming it.

- The File System is Your Friend: Even with million-token context windows, you’ll run out of space. We treat the agent’s file system as a form of external, long-term memory. The agent can write down its thoughts, save important files, and read them back later. It’s the AI equivalent of keeping a detailed lab notebook.

The Rise of Situated Agency: It's the Environment, Stupid!

If context engineering is the "how," then Situated Agency is the "why." This powerful concept, recently highlighted by technologist Dev Shah, argues that true intelligence doesn't come from the AI model alone. Instead, it emerges from the dynamic coupling of a model with its environment—its tools, memory, and execution sandbox [7].

"I have a solid argument favoring that intelligence cannot exist in isolation. It cannot be dissociated from the context and environment in which it operationalizes itself." - Dev Shah [7]

This isn't just academic theory; it's a validated business strategy. The recent acquisition of ManusAI by Meta is a prime example. Meta didn't just acquire a model; they acquired an environment. Manus achieved state-of-the-art agentic performance, even beating OpenAI on the GAIA benchmark, not by building a new foundation model, but by engineering the most compatible environment for existing models to reason and act within [7].

This suggests a paradigm shift. While some companies are in a costly race to build the biggest and best models, the real leverage may lie in building the best platform for those models to operate in. It's the "Android vs. iOS" strategy applied to AI: you don't need to build the best phone; you need to build the best operating system that can run on any phone.

The Bottom Line for Founders and Investors

As Andrej Karpathy, one of the brightest minds in AI, put it, context engineering is the “delicate art and science of filling the context window with just the right information” [1]. It’s not as flashy as training a new model from scratch, but it’s what makes AI products actually work in the real world.

For investors, the next time a startup pitches you their revolutionary AI, go beyond the model. Ask about their context engineering and Situated Agency strategy. Are they building a model, or are they building an environment? How do they manage memory, prevent context poisoning, and optimize for cost and latency? Their answers will tell you if they're building a fleeting novelty or a lasting, intelligent system.

For founders, mastering context engineering is your competitive advantage. It’s how you’ll build an AI that feels less like a goldfish and more like your smartest employee—one that remembers, learns, and gets better over time.

Written By Bogdan Cristei and Manus AI

References

[1] DataCamp. (2025, July 8). Context Engineering: A Guide With Examples. https://www.datacamp.com/blog/context-engineering

[2] Breunig, D. (2025). Four surprising ways context can get out of hand. (As referenced in the DataCamp article).

[3] Google DeepMind. (2025). Gemini 2.5 Technical Report. (As referenced in the DataCamp article).

[4] Ji, Y. (2025, July 18). Context Engineering for AI Agents: Lessons from Building Manus. https://manus.im/blog/Context-Engineering-for-AI-Agents-Lessons-from-Building-Manus

[5] LangChain Blog. (2025, June 23). The rise of "context engineering". https://blog.langchain.com/the-rise-of-context-engineering/

[6] Department of Product. (2024). Context Engineering for AI Agents Explained. https://departmentofproduct.substack.com/p/context-engineering-for-ai-agents-explained

[7] Shah, D. (2025, December 30). Post on Situated Agency and Meta's acquisition of ManusAI. https://x.com/0xDevShah/status/2005808074704183739